Author: Umang Dayal Physical AI succeeds not only because of larger models, but also because of richer, synchronized multisensor...

Read MoreBuild Smarter AI with Multisensor Fusion

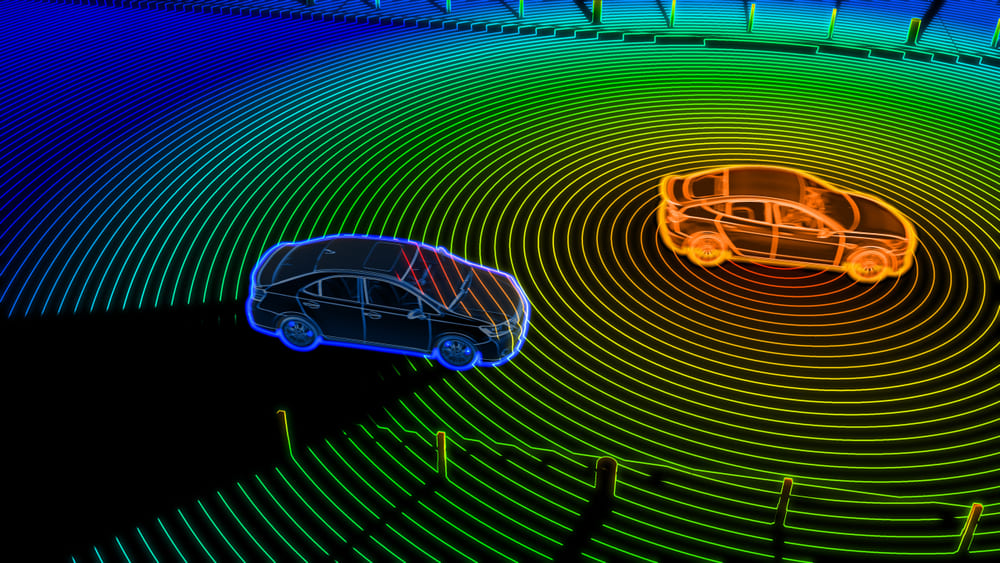

Digital Divide Data delivers high-quality multisensor fusion services that combine camera, LiDAR, radar, and other sensor data into unified training datasets. By synchronizing and annotating multimodal inputs, we help computer vision systems achieve robust perception, improved accuracy, and real-world reliability.

Multisensor Fusion Services That Power Reliable Autonomous Systems

Digital Divide Data (DDD) is a global leader in computer vision data services, enabling AI systems to understand the world through integrated sensor data. Our multisensor fusion services combine human expertise, advanced quality frameworks, and secure infrastructure to deliver production-ready datasets for complex AI applications.

Multisensor Fusion Workflow End-to-End

Fully managed multisensor fusion from raw sensors to unified intelligence

Define perception goals, sensor stack, annotation requirements, and quality benchmarks.

Multisensor data is ingested, synchronized, and spatially calibrated in controlled environments.

Objects and events are annotated consistently across all sensor modalities.

Multi-layer QA ensures spatial, temporal, and semantic consistency across datasets.

Challenging conditions and rare scenarios are reviewed to improve system reliability.

Unified datasets are delivered in client-ready formats with iterative refinement as models evolve.

Multisensor Fusion Use Cases We Support

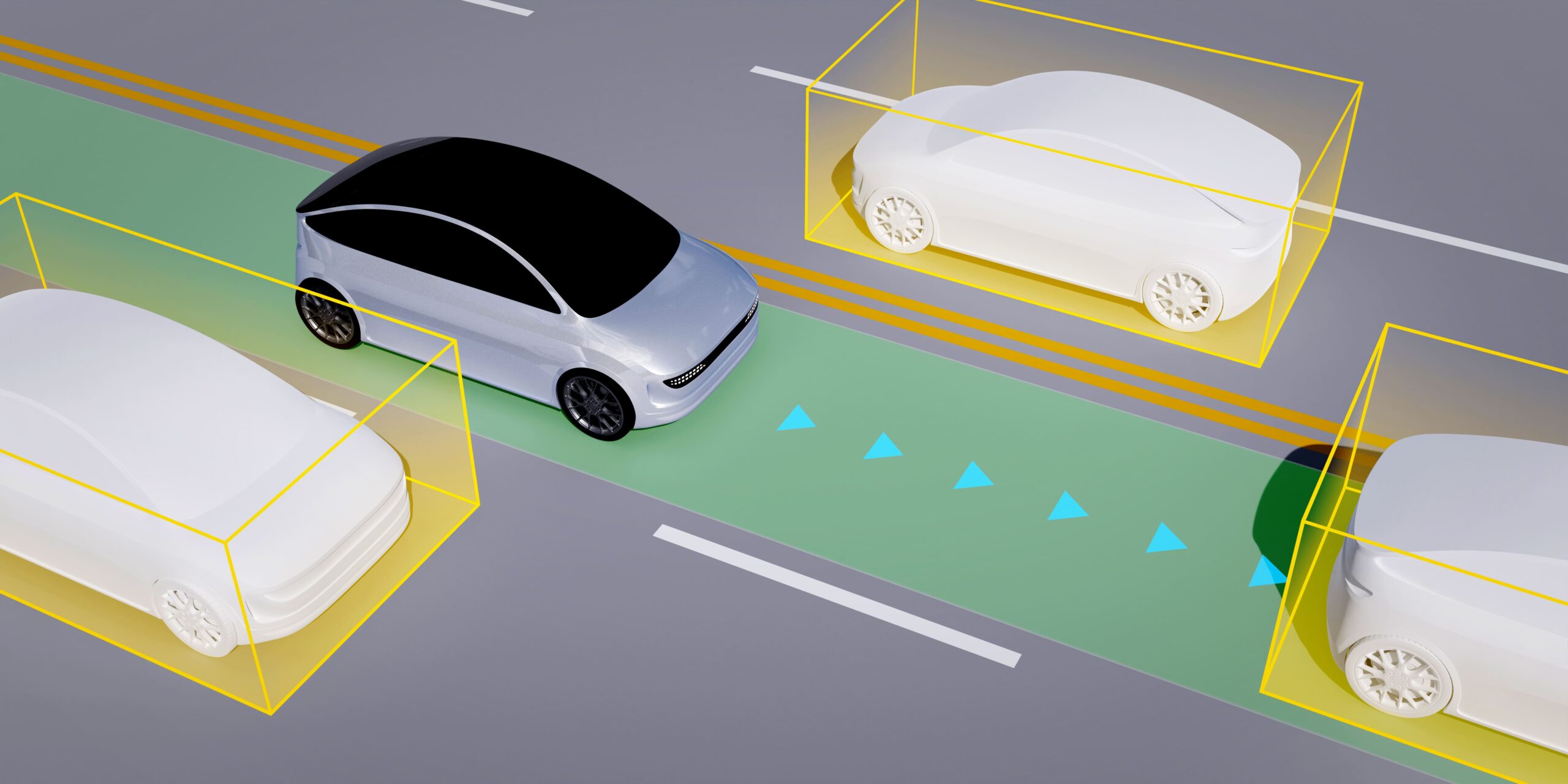

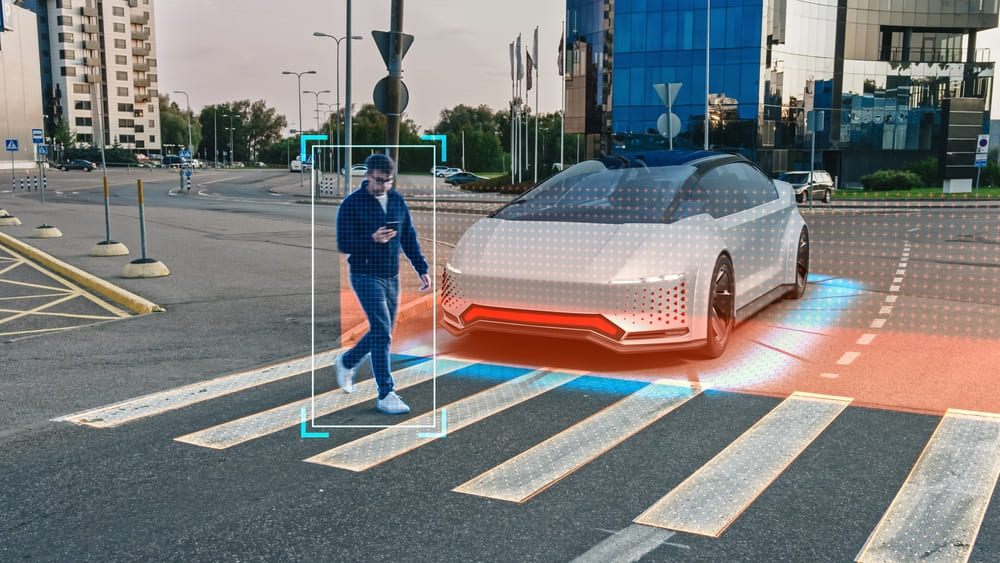

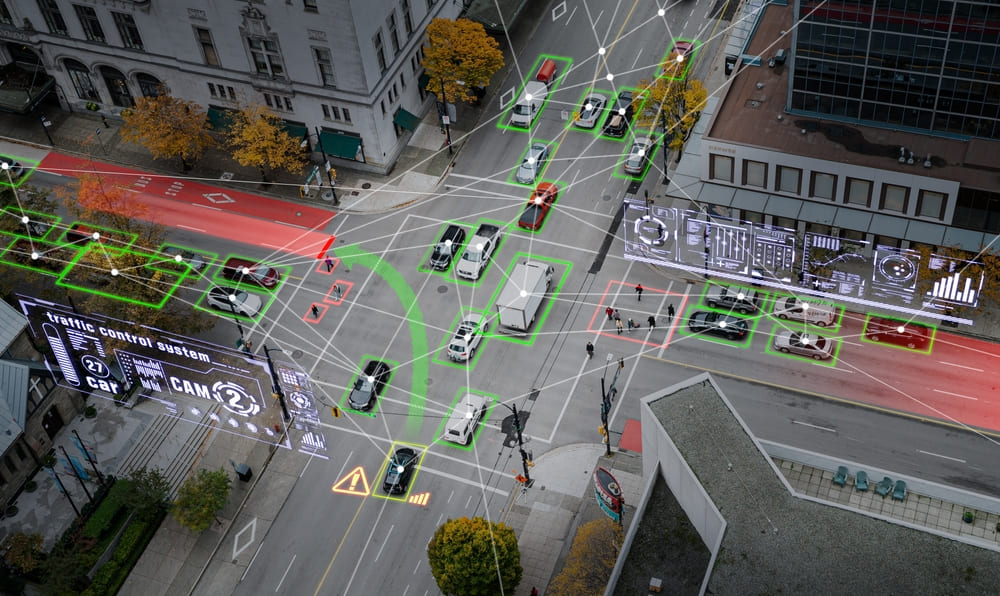

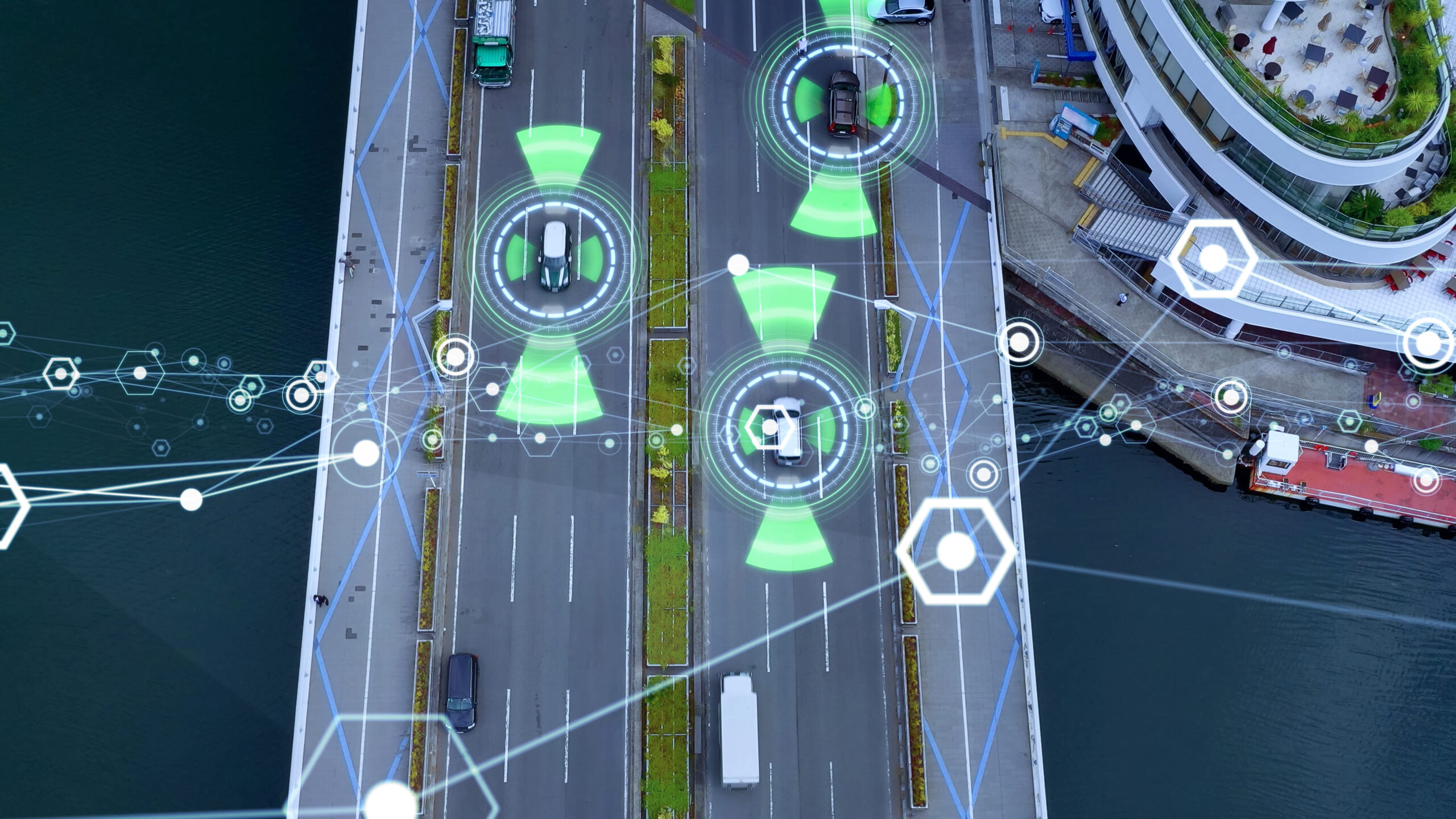

Enable autonomous vehicles to detect and track objects reliably by fusing camera, LiDAR, and radar data.

Build resilient perception systems by combining multiple sensors to maintain accuracy even when one sensor degrades or fails.

Improve AI performance in low-light, fog, rain, and occluded environments through multisensor data fusion.

Support ADAS features with fused sensor awareness for collision avoidance, lane keeping, and hazard detection.

Enable robots to navigate complex, unstructured spaces using unified sensor perception.

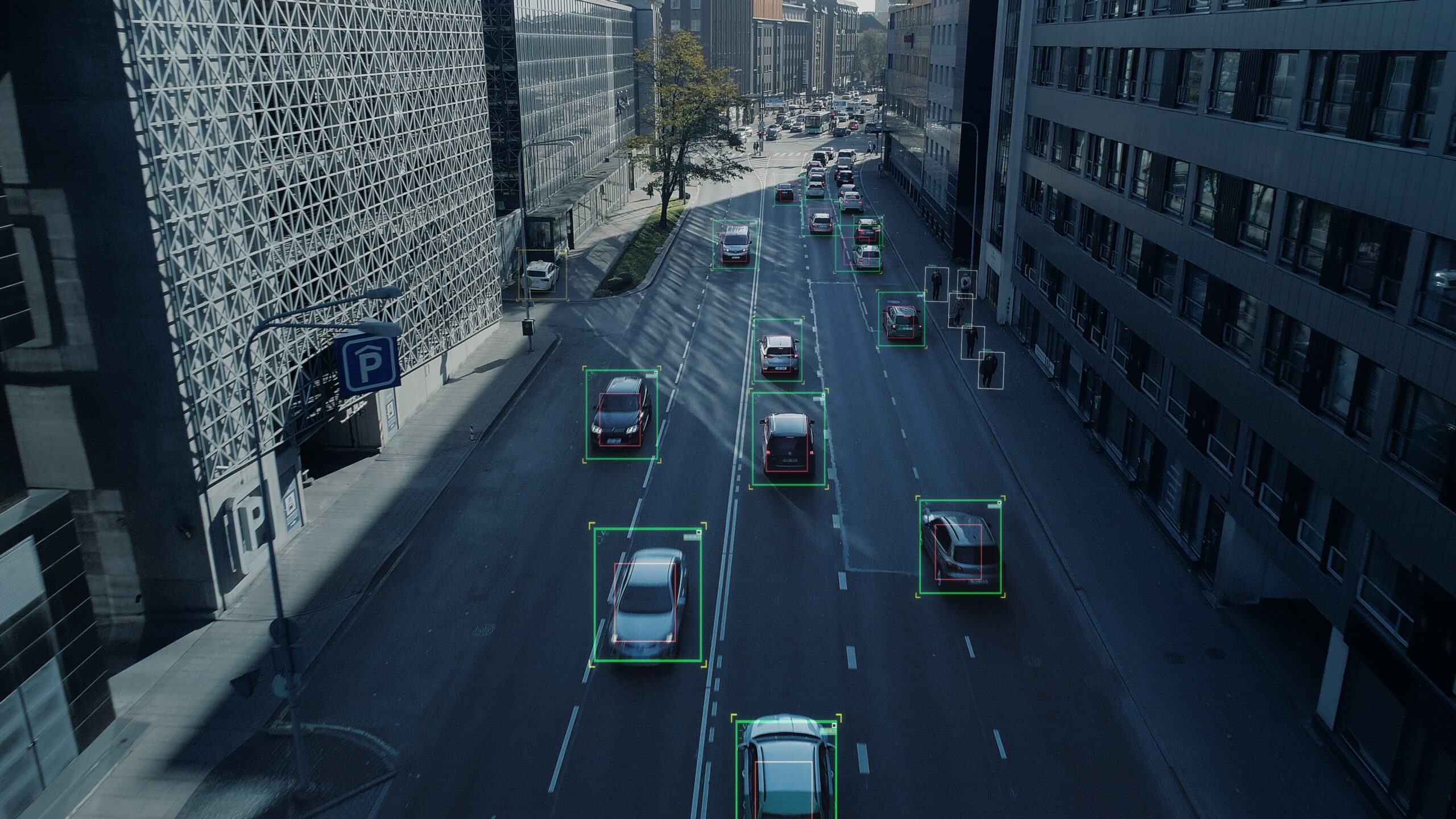

Enhance urban intelligence by analyzing traffic flow, congestion, and movement patterns using multisensor data.

Support situational awareness and threat detection through fused visual and spatial sensor inputs.

Enable automation systems with precise spatial understanding for monitoring, navigation, and operational efficiency.

Industries We Support

Autonomous Driving

Training perception systems with accurate depth and spatial understanding for safe navigation.

Government

Supporting surveillance, infrastructure monitoring, and defense intelligence initiatives.

Geospatial Intelligence

Annotating LiDAR data for mapping, urban planning, and environmental monitoring.

Retail & E-Commerce

Supporting spatial analytics and automation in warehouses and fulfillment centers.

What Our Clients Say

DDD’s multisensor fusion annotation significantly improved our detection accuracy in challenging conditions.

Their ability to align camera, LiDAR, and radar data was critical for our navigation stack.

DDD helped us build robust sensor-fusion datasets that performed consistently in real-world scenarios.

Security, precision, and consistency made DDD a trusted multisensor data partner.

Why Choose DDD?

Specialized annotation teams ensure precise cross-sensor alignment and consistent contextual labeling across modalities.

DDD’s Commitment to Security & Compliance

Your multisensor data is protected at every stage through rigorous global standards and secure operational infrastructure.

SOC 2 Type 2

ISO 27001

Holistic information security management with continuous audits

GDPR & HIPAA Compliance

Responsible handling of personal and sensitive data

TISAX Alignment

Read Our Latest Blogs

Challenges of Synchronizing and Labeling Multi-Sensor Data

This blog explores the critical challenges that organizations face in synchronizing and labeling multi-sensor data, and why solving them...

Read MoreMulti-Sensor Data Fusion in Autonomous Vehicles — Challenges and Solutions

In this blog, we will discuss some of the challenges in fusing data from different sensors. At the same...

Read MoreHuman-Powered Multisensor Fusion for Real-World AI

Frequently Asked Questions

Multisensor fusion combines data from multiple sensors, such as cameras, LiDAR, radar, GPS, and IMUs, to create a unified and more reliable perception of the environment for AI systems.

Fusing multiple sensors improves accuracy, robustness, and reliability by compensating for the limitations of individual sensors, especially in complex or adverse conditions.

Unlike single-sensor annotation, multisensor fusion requires precise spatial and temporal alignment across datasets to ensure objects and events are consistently labeled across all sensor inputs.

We provide cross-sensor object annotation, spatial alignment, temporal synchronization, event labeling, and validation across fused datasets.

We use specialized tools, calibration workflows, timestamp validation, and multi-layer quality assurance to ensure precise spatial and temporal alignment.