DDD Solutions Engineering Team

25 Aug, 2025

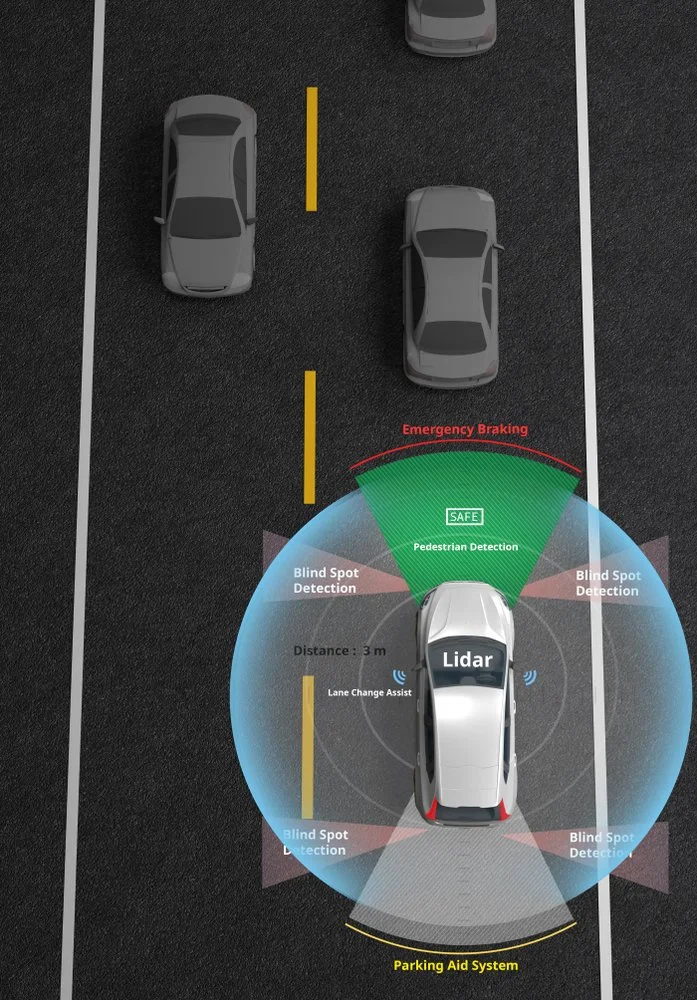

By combining data from cameras, LiDAR, radar, GPS, and inertial sensors, Multi-sensor systems provide a more complete and reliable picture of the world than any single sensor can achieve. They are central to the functioning of autonomous vehicles, humanoids, and defense tech, and smart infrastructure, where safety and accuracy depend on capturing complex, real-world environments from multiple perspectives.

The power of sensor fusion lies in its ability to build redundancy and resilience into perception. If a camera struggles in low light, LiDAR can provide depth information. If LiDAR fails to capture fine details, radar can deliver robust detection under poor weather conditions. Together, these technologies make decision-making systems more trustworthy and less prone to single points of failure.

However, the benefits of multi-sensor fusion are only realized if the data from different sensors can be synchronized and labeled correctly. Aligning multiple data streams in both time and space, and then ensuring that annotations remain consistent across modalities, has become one of the most difficult and resource-intensive challenges in deploying real-world AI systems.

This blog explores the critical challenges that organizations face in synchronizing and labeling multi-sensor data, and why solving them is essential for the future of autonomous and intelligent systems.

Why Synchronization in Multi-Sensor Data Matters

At the heart of multi-sensor perception lies the challenge of aligning data streams that operate at different speeds. Cameras often capture 30 frames per second, LiDAR systems may generate scans at 10 hertz, while inertial sensors produce hundreds of measurements each second. If these data streams are not carefully aligned, the system may attempt to interpret events that never occurred in the same moment, leading to a distorted view of reality.

Each sensor has its own internal clock, and even small timing differences accumulate into significant errors over time. Transmission delays from hardware, networking, or processing pipelines add further uncertainty. A system that assumes perfect synchronization risks misjudging the position of an object by several meters simply because the data was captured at slightly different moments.

These misalignments have real-world consequences. A pedestrian detected by a camera but not yet seen by LiDAR may cause an autonomous vehicle to hesitate or make an unsafe maneuver. A drone navigating in windy conditions may miscalculate its trajectory if inertial and GPS signals are out of sync. In safety-critical systems, even millisecond errors can cascade into poor perception, faulty tracking, or incorrect predictions.

Synchronization is therefore not just a technical detail, but a foundation for trust. Without reliable alignment, sensor fusion cannot function as intended, and the entire perception pipeline becomes vulnerable to inaccuracies.

Spatial Alignment and Calibration in Multi-Sensor Data

Synchronizing sensors in time is only one part of the challenge. Equally important is ensuring that data from different devices aligns correctly in space. Each sensor operates in its own coordinate system, and without careful calibration, their outputs cannot be meaningfully combined.

Two kinds of calibration are essential. Intrinsic calibration deals with the internal properties of a sensor, such as correcting lens distortion in a camera or compensating for systematic measurement errors in a LiDAR. Extrinsic calibration focuses on the spatial relationship between sensors, defining how a camera’s view relates to the three-dimensional space captured by LiDAR or radar. Both must be accurate for multi-sensor fusion to function reliably.

The complexity grows when multiple modalities are involved. A camera provides a two-dimensional projection of the world, while LiDAR produces a sparse set of three-dimensional points. Radar adds another dimension by measuring velocity and distance with lower resolution. Mapping these diverse representations into a unified spatial frame is computationally demanding and highly sensitive to calibration errors.

In real-world deployments, calibration does not remain fixed. Vibrations from driving, temperature fluctuations, or even minor impacts can shift sensors slightly out of alignment. These small deviations may not be noticeable at first but can lead to substantial errors over time. Maintaining accurate calibration requires not only precise setup during installation but also periodic recalibration or the use of automated self-calibration techniques in the field.

Spatial alignment and calibration are therefore continuous challenges. Without them, synchronized data streams still fail to align, undermining the very foundation of multi-sensor perception.

Data Volume and Infrastructure Burden

Beyond synchronization and calibration, one of the most pressing challenges in multi-sensor systems is the sheer scale of data they generate. A single high-resolution camera can produce gigabytes of video in just a few minutes. Add multiple cameras, LiDAR scans containing hundreds of thousands of points, radar sweeps, GPS streams, and IMU data, and the result is terabytes of information being produced every day by a single platform.

This volume creates immediate infrastructure strain. Streaming large amounts of data in real time requires high-bandwidth networks, which may not always be available in the field. Storage quickly becomes a bottleneck as fleets or robotic systems scale up, forcing organizations to invest in specialized hardware and compression strategies to keep data manageable. Even after data is collected, replaying and analyzing synchronized streams can overwhelm conventional computing resources.

While handling the output of a single prototype system may be feasible, expanding to dozens or hundreds of units multiplies both the data volume and the engineering effort required to process it. Fleets of autonomous vehicles or large-scale robotic deployments demand infrastructure capable of handling synchronized multi-sensor data at an industrial scale.

Without a robust infrastructure for managing this data, synchronization and labeling efforts can stall before they begin. Effective solutions require not only technical methods for aligning and annotating data, but also scalable systems for moving, storing, and processing the information in the first place.

Labeling Across Modalities for Multi-Sensor

Once data streams are synchronized and calibrated, the next challenge is creating consistent labels across different sensor modalities. This task is far more complex than labeling a single dataset from one sensor type. A bounding box drawn around a vehicle in a two-dimensional camera image must accurately correspond to the same vehicle represented in a LiDAR point cloud or detected by radar. Any misalignment results in inconsistencies that weaken the training data and undermine model performance.

The inherent differences between modalities add to the difficulty. Cameras capture dense, detailed images of every pixel in a scene, while LiDAR provides a sparse but geometrically precise map of points. Radar contributes distance and velocity information, but with far less spatial resolution. Translating annotations across these diverse data types requires specialized tools and workflows to ensure that one object is labeled correctly everywhere it appears.

Human annotators face a significant cognitive load in this process. Interpreting and labeling fused data demands constant switching between modalities, perspectives, and representations. Unlike labeling a single image, multi-sensor annotation requires reasoning about depth, perspective, and cross-modality consistency simultaneously. Over time, this complexity can lead to fatigue, higher error rates, and inconsistencies across the dataset.

Accurate cross-modal labeling is essential for developing reliable perception systems. Without it, even perfectly synchronized and calibrated data cannot fulfill its potential, as the downstream models will struggle to learn meaningful representations of the real world.

Noise, Dropouts, and Edge Cases

Even when sensors are carefully synchronized and calibrated, their outputs are never perfectly clean. Each modality carries its own vulnerabilities. Cameras are affected by changes in lighting, glare, and shadows. LiDAR struggles with highly reflective or absorptive surfaces, producing gaps or spurious points. Radar can be confused by multipath reflections or interference in complex environments. These imperfections introduce uncertainty that complicates both synchronization and labeling.

Temporary sensor failures, or dropouts, create additional challenges. In real-world deployments, a camera may briefly lose exposure control, a LiDAR might skip a frame, or a radar might fail to return usable signals. When one sensor drops out, the task of aligning and labeling across modalities becomes inconsistent, and downstream models must compensate for incomplete inputs. Reconstructing reliable data streams under these conditions is difficult and often requires fallback strategies.

Edge cases amplify these issues. Rare scenarios such as unusual weather conditions, fast-moving objects, or crowded environments test the limits of both the sensors and the synchronization pipelines. These cases often expose weaknesses that remain hidden in controlled testing, yet they are precisely the scenarios that autonomous and robotic systems must handle reliably.

Addressing noise, dropouts, and edge cases is therefore not optional but central to building trust in multi-sensor systems. Without robust strategies to manage imperfections, synchronized and labeled data will fail to represent the realities of deployment environments.

Generating Reliable Ground Truth

Reliable ground truth is the benchmark against which perception systems are trained and evaluated. In the context of multi-sensor data, producing this ground truth is particularly demanding because it requires consistency across time, space, and modalities. Unlike single-sensor datasets, where annotations can be applied directly to a single stream, multi-sensor setups demand multi-stage pipelines that ensure alignment between different forms of representation.

Creating such pipelines involves carefully cross-checking annotations across modalities. A pedestrian labeled in a camera image must be accurately linked to the corresponding points in LiDAR and any detections from radar. These checks are not simply clerical but essential to prevent systematic labeling errors from cascading through entire datasets. Each stage adds cost, complexity, and the need for rigorous quality assurance.

Dynamic scenes make this process even more complex. Fast-moving objects, occlusions, and overlapping trajectories can cause labels to become inconsistent across frames and modalities. Ensuring temporal continuity while maintaining spatial precision requires sophisticated workflows that combine automated assistance with human oversight.

Uncertainty is another factor that cannot be ignored. Some scenarios do not allow for precise labeling, such as partially visible objects or sensor measurements degraded by noise. Forcing deterministic labels in such cases risks introducing artificial precision that misleads the model. Representing uncertainty, whether through probabilistic annotations or confidence scores, provides a more realistic foundation for training and evaluation.

Reliable ground truth is therefore not just a product of annotation but a process of validation, consistency checking, and uncertainty management. Without this level of rigor, synchronized and calibrated multi-sensor data cannot be fully trusted to support safe and scalable AI systems.

Tooling and Standardization Challenges of Multi Sensor Data

Even with synchronization, calibration, and careful labeling in place, the practical work of managing multi-sensor data is often slowed by limitations in tooling and a lack of standardization. Most annotation and processing tools were designed for single modalities, such as 2D image labeling or 3D point cloud analysis, and are not well-suited to handling both simultaneously. This forces teams to work with fragmented toolchains, exporting data from one platform and re-importing it into another, which increases complexity and the risk of errors.

The absence of widely accepted standards compounds this issue. Different organizations and industries frequently adopt proprietary data formats, labeling schemas, and metadata conventions. As a result, datasets cannot be easily shared or reused across projects, and tooling built for one environment often cannot be applied in another without significant adaptation. This lack of interoperability slows research, inflates costs, and reduces opportunities for collaboration.

Operational scaling brings another layer of difficulty. Managing multi-sensor synchronization and labeling across a small pilot project is one challenge, but doing so across hundreds of vehicles, drones, or industrial robots requires infrastructure that is both robust and flexible. Automated validation pipelines, scalable data storage, and consistent quality control processes must be in place to handle the growth, yet many existing toolsets are not designed to support such scale.

Without better tools and stronger standards, the gap between research prototypes and deployable systems will remain wide. Closing this gap is essential to make multi-sensor synchronization and labeling both efficient and repeatable in real-world applications.

Read more: How Data Labeling and Real‑World Testing Build Autonomous Vehicle Intelligence

Emerging Solutions for Multi Sensor Data

Despite the challenges, promising solutions are beginning to reshape how organizations approach multi-sensor synchronization and labeling.

Using automation and self-supervised methods

Algorithms can now align data streams by detecting common features across modalities, reducing reliance on manual calibration and lowering the risk of drift in long-term deployments. These approaches are particularly valuable for large-scale systems where manual recalibration is impractical.

Integrated annotation environments

Instead of forcing annotators to switch between 2D image tools and 3D point cloud platforms, object-centric systems allow a single label to propagate across modalities automatically. This not only improves consistency but also reduces cognitive load, making large annotation projects more efficient and less error-prone.

Synthetic and simulation-based data

Digital twins enable testing of synchronization and labeling workflows under controlled conditions, where variables such as sensor noise, lighting, and weather can be manipulated without risk. While synthetic data cannot fully replace real-world examples, it plays an important role in filling gaps and stress-testing systems before deployment.

Finally, there is momentum toward standardization. Industry and research communities are working to define common data formats, labeling conventions, and interoperability protocols. Such efforts are essential to break down silos, enable collaboration, and accelerate progress across sectors.

Looking forward, these innovations point to a future where synchronization and labeling become less of a bottleneck and more of a streamlined, repeatable process. As methods mature, multi-sensor AI systems will gain the reliability and scalability needed to support autonomy, robotics, and other mission-critical applications at scale.

How We Can Help

Digital Divide Data (DDD) supports organizations in overcoming the practical hurdles of synchronizing and labeling multi-sensor data. Our expertise lies in managing the complexity of multi-modal annotation at scale, ensuring that datasets are both consistent and production-ready.

Our teams are trained to handle cross-modality challenges, linking objects seamlessly across camera images, LiDAR point clouds, and radar data. By combining skilled human annotators with workflow automation and quality control systems, DDD reduces errors and accelerates turnaround times. This approach allows clients to focus on advancing their models rather than struggling with fragmented or inconsistent datasets.

Conclusion

Synchronizing and labeling multi-sensor data is one of the most critical challenges in building trustworthy perception systems. The technical hurdles span temporal alignment, spatial calibration, data volume management, cross-modal labeling, and resilience against noise and dropouts. Each layer introduces complexity, yet each is essential for ensuring that downstream models receive accurate, consistent, and reliable information.

Success in this space requires balancing technical innovation with operational discipline. Advances in automation, integrated annotation platforms, and synthetic data are helping to reduce manual effort and error rates. At the same time, organizations must adopt rigorous pipelines, scalable infrastructure, and clear quality standards to handle the realities of deployment at scale.

As these solutions mature, the industry is steadily moving away from treating synchronization and labeling as fragile bottlenecks. Instead, they are becoming core enablers of multi-sensor AI systems that can be trusted to operate in safety-critical domains such as autonomous vehicles, robotics, and defense. With robust foundations in place, multi-sensor perception will shift from a research challenge to a reliable backbone for intelligent systems in the real world.

Partner with Digital Divide Data to build the reliable data foundation your autonomous, robotic, and defense applications need.

References

Brödermann, T., Bruggemann, D., Sakaridis, C., Ta, K., Liagouris, O., Corkill, J., & Van Gool, L. (2024). MUSES: The multi-sensor semantic perception dataset for driving under uncertainty. In European Conference on Computer Vision (ECCV 2024). Springer. https://muses.vision.ee.ethz.ch/pub_files/muses/MUSES.pdf

Basawapatna, G., White, J., & Van Hooser, P. (2024, September). Wireless precision time synchronization alternatives and performance. Riverside Research Institute. Proceedings of the ION GNSS+ Conference. Retrieved from https://www.riversideresearch.org/uploads/Academic-Paper/ION_2024_RRI.pdf

Wiesmann, L., Labe, T., Nunes, L., Behley, J., & Stachniss, C. (2024). Joint intrinsic and extrinsic calibration of perception systems utilizing a calibration environment. IEEE Robotics and Automation Letters, 9(4), 3102–3109. https://www.ipb.uni-bonn.de/wp-content/papercite-data/pdf/wiesmann2024ral.pdf

FAQs

How do organizations typically validate synchronization quality in multi-sensor systems?

Validation often involves using calibration targets, reference environments, or benchmarking against high-precision ground truth systems. Some organizations also employ automated scripts that check for time or spatial inconsistencies across modalities.

What role does edge computing play in managing multi-sensor data?

Edge computing enables preprocessing and synchronization closer to where data is collected. This reduces bandwidth requirements, lowers latency, and ensures that only refined or partially fused data is transmitted to central systems for further analysis.

Are there cost considerations unique to multi-sensor labeling projects?

Yes. Multi-sensor labeling is more resource-intensive than single-modality annotation due to the added complexity of ensuring cross-modal consistency. Costs are influenced by the number of modalities, annotation complexity, and the need for specialized tooling.

Can machine learning models assist in reducing human effort for cross-modal labeling?

They can. Automated pre-labeling and self-supervised methods can generate initial annotations that are then refined by human annotators. This hybrid approach reduces time and improves efficiency, although quality control remains essential.

What industries outside of autonomous driving benefit most from multi-sensor synchronization and labeling?

Defense systems, industrial robotics, logistics, smart infrastructure, and even healthcare imaging applications benefit from synchronized and consistently labeled multi-sensor data, as they all rely on robust perception under varied conditions.

How often should multi-sensor systems be recalibrated in real-world deployments?

The frequency depends on the environment and use case. Mobile platforms exposed to vibration or temperature changes may require frequent recalibration, while static installations can operate with less frequent adjustments. Automated recalibration methods are increasingly being used to reduce downtime.