DDD Solutions Engineering Team

July 15, 2025

Autonomy relies on their ability to perceive and interpret the world around them accurately and resiliently. To achieve this, modern perception stacks increasingly depend on data from multiple sensor modalities, particularly LiDAR, RADAR, and cameras. Each of these sensors brings unique strengths: LiDAR offers precise 3D spatial data, RADAR excels in detecting objects under poor lighting or adverse weather, and cameras provide rich visual detail and semantic context. However, the true potential of these sensors is unlocked when their inputs are combined effectively through synchronized, high-quality data annotation.

Multi-modal annotation requires more than simply labeling data from different sensors. It requires precise spatial and temporal alignment, calibration across coordinate systems, handling discrepancies in resolution and frequency, and developing workflows that can consistently handle large-scale data. The problem becomes even more difficult in dynamic environments, where occlusions, motion blur, or environmental noise can lead to inconsistencies across sensor readings.

This blog explores multi-modal data annotation for autonomy, focusing on the synchronization of LiDAR, RADAR, and camera inputs. It provides a deep dive into the challenges of aligning sensor streams, the latest strategies for achieving temporal and spatial calibration, and the practical techniques for fusing and labeling data at scale. It also highlights real-world applications, fusion frameworks, and annotation best practices that are shaping the future of autonomous systems across industries such as automotive, robotics, aerial mapping, and surveillance.

Why Multi-Modal Sensor Fusion is Important

Modern autonomous systems operate in diverse and often unpredictable environments, from urban streets with heavy traffic to warehouses with dynamic obstacles and limited lighting. Relying on a single type of sensor is rarely sufficient to capture all the necessary environmental cues. Each sensor type has inherent limitations; cameras struggle in low-light conditions, LiDAR can be affected by fog or rain, and RADAR, while robust in weather, lacks fine-grained spatial detail. Sensor fusion addresses these gaps by combining the complementary strengths of multiple modalities, enabling more reliable and context-aware perception.

LiDAR provides dense 3D point clouds that are highly accurate for mapping and localization, particularly useful in estimating depth and object geometry. RADAR contributes reliable measurements of velocity and range, performing well in adverse weather where other sensors may fail. Cameras add rich semantic understanding of the scene, capturing textures, colors, and object classes that are critical for tasks like traffic sign recognition and lane detection. By fusing data from these sensors, systems can form a more comprehensive and redundant view of the environment.

This fusion is particularly valuable for safety-critical applications. In autonomous vehicles, for example, sensor redundancy is essential for detecting edge cases, unusual or rare situations where a single sensor may misinterpret the scene. A RADAR might detect a metal object hidden in shadow, which a camera might miss due to poor lighting. A LiDAR might capture the exact 3D contour of an object that RADAR detects only as a motion vector. Combining these views improves object classification accuracy, reduces false positives, and allows for better predictive modeling of moving objects.

Beyond transportation, sensor fusion also plays a key role in domains such as robotics, smart infrastructure, aerial mapping, and defense. Indoor robots navigating warehouse floors benefit from synchronized RADAR and LiDAR inputs to avoid collisions. Drones flying in mixed lighting conditions can rely on RADAR for obstacle detection while using cameras for visual mapping. Surveillance systems can use fusion to detect and classify objects accurately, even in rain or darkness.

This makes synchronized data annotation not just a technical necessity but a foundational requirement. Poorly aligned or inconsistently labeled data can degrade model performance, create safety risks, and increase the cost of re-training. In the next section, we examine why this annotation process is so challenging and what makes it a key bottleneck in building robust, sensor-fused systems.

Challenges in Multi-Sensor Data Annotation

Creating reliable multi-modal datasets requires more than just capturing data from LiDAR, RADAR, and cameras. The true challenge lies in synchronizing and annotating this data in a way that maintains spatial and temporal coherence across modalities. These challenges span hardware limitations, data representation discrepancies, calibration inaccuracies, and practical workflow constraints that scale with data volume.

Temporal Misalignment: Different sensors operate at different frequencies and latencies. LiDAR may capture data at 10 Hz, RADAR at 20 Hz, and cameras at 30 or even 60 Hz. Synchronizing these streams in time, especially in dynamic environments with moving objects, is critical. A delay of even a few milliseconds can result in misaligned annotations, leading to errors in training data that compound over time in model performance.

Spatial Calibration: Each sensor occupies a different physical position on the vehicle or robot, with its own frame of reference. Accurately transforming data between coordinate systems, camera images, LiDAR point clouds, and RADAR reflections requires meticulous intrinsic and extrinsic calibration. Even small calibration errors can cause significant inconsistencies, such as bounding boxes that appear correctly in one modality but are misaligned in another. These discrepancies undermine the integrity of fused annotations and reduce the effectiveness of perception models trained on them.

Heterogeneity of Sensor Data: Cameras output 2D image grids with RGB values, LiDAR provides sparse or dense 3D point clouds, and RADAR offers a different type of 3D or 4D data that is often noisier and lower in resolution but includes velocity information. Designing annotation pipelines that can handle this variety of data formats and fuse them meaningfully is non-trivial. Moreover, each modality perceives the environment differently: transparent or reflective surfaces may be captured by cameras but not by LiDAR, and small or non-metallic objects may be missed by RADAR altogether.

Scale of Annotation: Autonomous systems collect vast amounts of data across thousands of hours of driving or operation. Annotating this data manually is prohibitively expensive and time-consuming, especially when high-resolution 3D data is involved. Creating accurate annotations across all modalities requires specialized tools and domain expertise, often involving a combination of human effort, automation, and validation loops.

Quality Control and Consistency: Annotators must maintain uniform labeling across modalities and frames, which is challenging when occlusions or environmental conditions degrade visibility. For example, an object visible in RADAR and LiDAR might be partially occluded in the camera view, leading to inconsistent labels if the annotator is not equipped with a fused perspective. Without robust QA workflows and annotation standards, dataset noise can slip into training pipelines, affecting model reliability in edge cases.

Data Annotation and Fusion Techniques for Multi-modal Data

Effective multi-modal data annotation is inseparable from how well sensor inputs are fused. Synchronization is not just about matching timestamps; it’s about aligning data with different sampling rates, coordinate systems, noise profiles, and detection characteristics. Over the past few years, several techniques and frameworks have emerged to handle the complexity of fusing LiDAR, RADAR, and camera inputs at both the data and model levels.

Time Synchronization: Hardware-based synchronization using shared clocks or protocols like PTP (Precision Time Protocol) is ideal, especially for systems where sensors are integrated into a single rig. In cases where that’s not feasible, software-based alignment using timestamp interpolation can be used, often supported by GPS/IMU signals for temporal correction. Some recent datasets, like OmniHD-Scenes and NTU4DRadLM, include such synchronization mechanisms by default, making them a strong foundation for fusion-ready annotations.

Spatial Alignment: Requires precise intrinsic calibration (lens distortion, focal length, etc.) and extrinsic calibration (relative position and orientation between sensors). Calibration targets like checkerboards, AprilTags, and reflective markers are widely used in traditional workflows. However, newer approaches like SLAM-based self-calibration or indoor positioning systems (IPS) are gaining traction. The IPS-based method published in IRC 2023 demonstrated how positional data can be used to automate the projection of 3D points onto camera planes, dramatically reducing manual intervention while maintaining accuracy.

Once synchronization is achieved, fusion strategies come into play. These are generally classified into three levels: early fusion, mid-level fusion, and late fusion. In early fusion, data from different sensors is combined at the raw or pre-processed input level.

For example, projecting LiDAR point clouds onto image planes allows joint annotation in a common 2D space, though this requires precise calibration. Mid-level fusion works on feature representations. Here, feature maps generated separately from each sensor are aligned, and the merged approach supports flexibility while preserving modality-specific strengths. Late fusion, on the other hand, happens after detection or segmentation, where predictions from each modality are combined to arrive at a consensus result. This modular design is seen in systems like DeepFusion, which allows independent tuning and failure isolation across modalities.

Annotation pipelines increasingly integrate fusion-aware workflows, enabling annotators to see synchronized sensor views side by side or as overlaid projections. This ensures label consistency and accelerates quality control, especially in ambiguous or partially occluded scenes. As the ecosystem matures, we can expect to see more fusion-aware annotation tools, dataset formats, and APIs designed to make multi-modal perception easier to build and scale.

Real-World Applications of Multi-Modal Data Annotation

As multi-modal sensor fusion matures, its applications are expanding across industries where safety, accuracy, and environmental adaptability are non-negotiable.

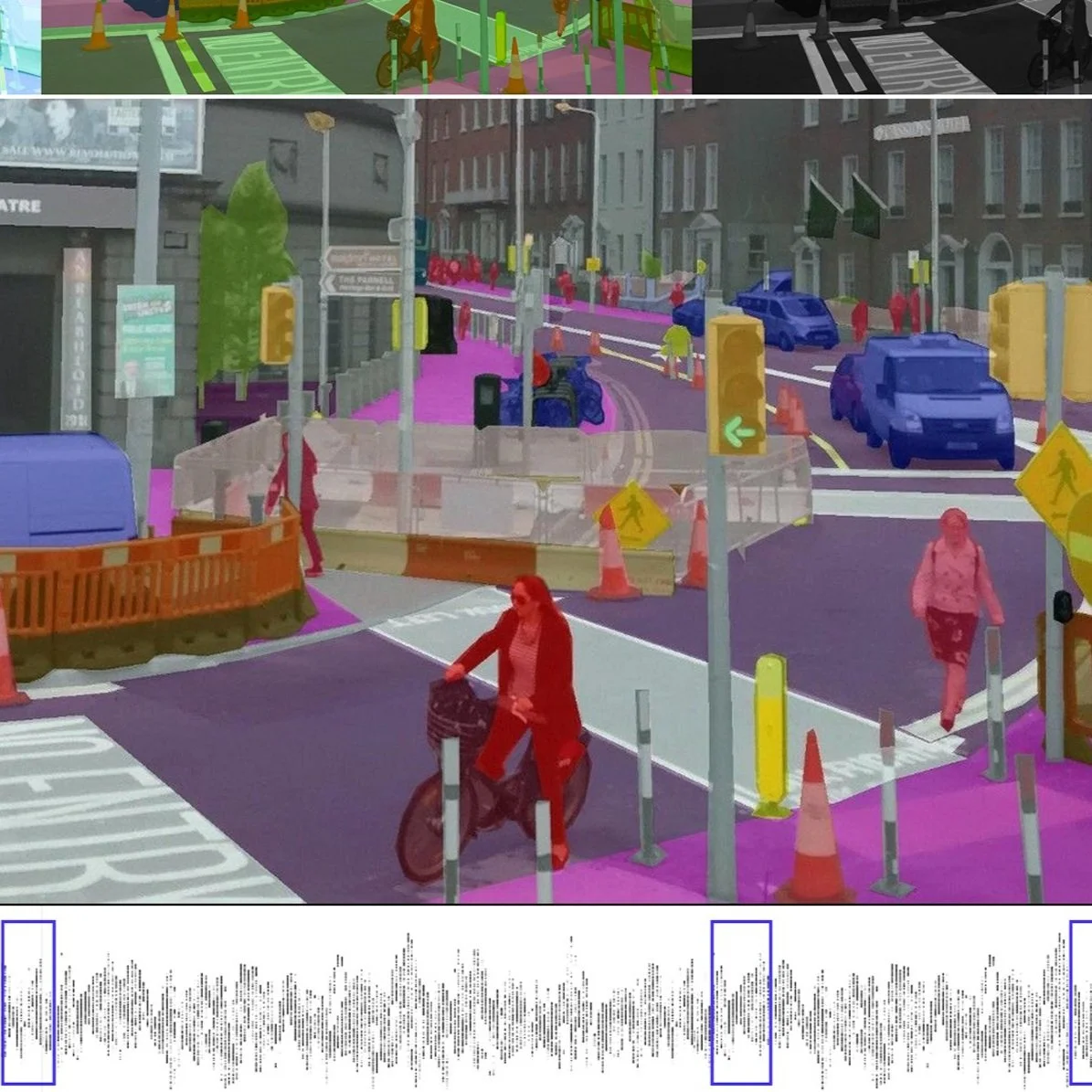

In the autonomous vehicle sector, multi-sensor annotation enables precise 3D object detection, lane-level semantic segmentation, and robust behavior prediction. Leading datasets have demonstrated the importance of combining LiDAR’s spatial resolution with camera-based semantics and RADAR’s motion sensitivity. Cooperative perception is becoming especially prominent in connected vehicle ecosystems, where synchronized data from multiple vehicles or roadside units allows for enhanced situational awareness.

In such scenarios, accurate multi-modal annotation is crucial to training models that can understand not just what is visible from one vehicle’s perspective, but from the entire connected network’s viewpoint.

Indoor Robotics: Multi-modal fusion is also central to, especially in warehouse automation, where autonomous forklifts and inspection robots must navigate tight spaces filled with shelves, reflective surfaces, and moving personnel. These environments often lack consistent lighting, making RADAR and LiDAR essential complements to vision systems. Annotated sensor data is used to train SLAM (Simultaneous Localization and Mapping) and obstacle avoidance algorithms that operate in real time.

Aerial Systems: Drones used for inspection, surveying, and delivery, combining camera feeds with LiDAR and RADAR inputs, significantly improve obstacle detection and terrain mapping. These systems frequently operate in GPS-denied or visually ambiguous settings, like fog, dust, or low-light, where single-sensor reliance leads to failure. Multi-modal annotations help train detection models that can anticipate and adapt to such environmental challenges.

Surveillance and Smart Infrastructure Platforms: In environments like airports, industrial zones, or national borders, it’s not enough to simply detect objects; systems must identify, classify, and track them reliably under a wide range of conditions. Fused sensor systems using RADAR for motion detection, LiDAR for shape estimation, and cameras for classification are proving to be more resilient than vision-only systems. Accurate annotation across modalities is essential here to build datasets that reflect the diversity and unpredictability of these high-security environments.

Read more: Accelerating HD Mapping for Autonomy: Key Techniques & Human-In-The-Loop

Best Practices for Multi-Modal Data Annotation

Building high-quality, multi-modal datasets that effectively synchronize LiDAR, RADAR, and camera inputs requires a deliberate approach. From data collection to annotation, every stage must be designed with fusion and consistency in mind. Over the past few years, organizations working at the forefront of autonomous systems have refined a number of best practices that significantly improve the efficiency and quality of multi-sensor annotation pipelines.

Investing in sensor synchronization infrastructure

Systems that use hardware-level synchronization, such as shared clocks or PPS (pulse-per-second) signals from GPS units, dramatically reduce the need for post-processing alignment. If such hardware is unavailable, software-level timestamp interpolation should be guided by auxiliary sensors like IMUs or positional data to minimize drift and latency mismatches. Pre-synchronized datasets demonstrate how much easier annotation becomes when synchronization is already built into the data.

Prioritize accurate and regularly validated calibration procedures

Calibration is not a one-time setup; it must be repeated frequently, especially in mobile platforms where physical alignment between sensors can degrade over time due to vibrations or impacts. Using calibration targets is still standard, but emerging methods that leverage SLAM or IPS-based calibration are proving to be faster and more robust. These automated methods not only save time but also reduce dependency on highly trained personnel for every calibration event.

Embrace fusion-aware tools that present data

Annotators should be able to view 2D and 3D representations side by side or in overlaid projections to ensure label consistency. When possible, annotations should be generated in a unified coordinate system rather than labeling each modality separately. This helps eliminate ambiguity and speeds up validation.

Integrate a semi-automated labeling approach

These include model-assisted pre-labeling, SLAM-based object tracking for temporal consistency, and projection tools that allow 3D labels to be viewed or edited in camera space. Automation doesn’t replace manual review, but it reduces the cost per frame and makes large-scale dataset creation more feasible. Combining this with human-in-the-loop QA processes ensures that quality remains high while annotation throughput improves.

Cross-modality QA mechanisms

Errors that occur in one sensor view often cascade into others, so quality control should include consistency checks across modalities. These can be implemented through projection-based overlays, intersection-over-union (IoU) comparisons of bounding boxes across views, or automated checks for calibration drift. Without these controls, even well-labeled datasets can contain silent failures that compromise model performance.

Read more: Utilizing Multi-sensor Data Annotation To Improve Autonomous Driving Efficiency

Conclusion

As the demand for high-performance autonomous systems grows, the importance of synchronized, multi-modal data annotation becomes increasingly clear. The fusion of LiDAR, RADAR, and camera data allows perception models to interpret their environments with greater depth, resilience, and semantic understanding than any single modality can offer. However, realizing the benefits of this fusion requires meticulous attention to synchronization, calibration, data consistency, and annotation workflow design.

The future of perception will be defined not just by model architecture or training techniques, but by the quality and integrity of the data these systems learn from. For teams working in autonomous driving, humanoids, surveillance, or aerial mapping, multi-modal data annotation is no longer an experimental technique; it’s a necessity. As tools and standards mature, those who invest early in fusion-ready datasets and workflows will be better positioned to build systems that perform reliably, even in the most challenging real-world scenarios.

Leverage DDD’s deep domain experience, fusion-aware annotation pipelines, and cutting-edge toolsets to accelerate your AI development lifecycle. From dataset design to sensor calibration support and semi-automated labeling, we partner with you to ensure your models are trained on reliable, production-grade data.

Ready to transform your perception stack with sensor-fused training data? Get in touch

References:

Baumann, N., Baumgartner, M., Ghignone, E., Kühne, J., Fischer, T., Yang, Y.‑H., Pollefeys, M., & Magno, M. (2024). CR3DT: Camera‑RADAR fusion for 3D detection and tracking. arXiv preprint. https://doi.org/10.48550/arXiv.2403.15313

Rubel, R., Dudash, A., Goli, M., O’Hara, J., & Wunderlich, K. (2023, December 6). Automated multimodal data annotation via calibration with indoor positioning system. arXiv. https://doi.org/10.48550/arXiv.2312.03608

Frequently Asked Questions (FAQs)

1. Can synthetic data be used for multi-modal training and annotation?

Yes, synthetic datasets are becoming increasingly useful for pre-training models, especially for rare edge cases. Simulators can generate annotated LiDAR, RADAR, and camera data.

2. How is privacy handled in multi-sensor data collection, especially in public environments?

Cameras can capture identifiable information, unlike LiDAR or RADAR. To address privacy concerns, collected image data is often anonymized through blurring of faces and license plates before annotation or release. Additionally, data collection in public areas may require permits and explicit privacy policies, particularly in the EU under GDPR regulations.

3. Is it possible to label RADAR data directly, or must it be fused first?

RADAR data can be labeled directly, especially when used in its image-like formats (e.g., range-Doppler maps). However, due to its sparse and noisy nature, annotations are often guided by fusion with LiDAR or camera data to increase interpretability. Some tools now allow direct annotation in radar frames, but it’s still less mature than LiDAR/camera workflows.

4. How do annotation errors in one modality affect model performance in fusion systems?

An error in one modality can propagate and confuse feature alignment or consensus mechanisms, especially in mid- and late-fusion architectures. For example, a misaligned bounding box in LiDAR space can degrade the effectiveness of a BEV fusion layer, even if the camera annotation is correct.