DDD Solutions Engineering Team

11 Aug, 2025

While breakthroughs in deep learning architectures and simulation environments often capture the spotlight, the practical intelligence of Autonomous Vehicles stems from more foundational elements: the quality of data they are trained on and the scenarios they are tested in.

High-quality data labeling and thorough real-world testing are not just supporting functions; they are essential building blocks that determine whether an AV can make safe, informed decisions in dynamic environments.

This blog outlines how data labeling and real-world testing complement each other in the AV development lifecycle.

The Role of Data Labeling in Autonomous Vehicle Development

Why Data Labeling Matters

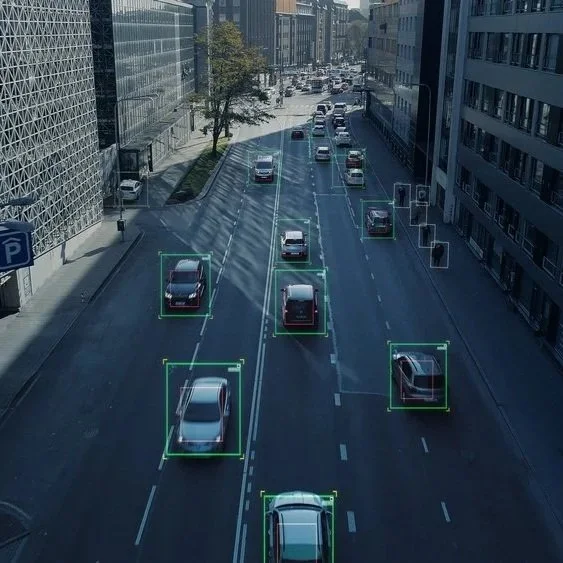

At the core of every autonomous vehicle is a perception system trained to interpret its surroundings through sensor data. For that system to make accurate decisions, such as identifying pedestrians, navigating intersections, or merging in traffic, it must be trained on massive volumes of precisely labeled data. These annotations are far more than a technical formality; they form the ground truth that neural networks learn from. Without them, the vehicle’s ability to distinguish a cyclist from a signpost, or a curb from a shadow, becomes unreliable.

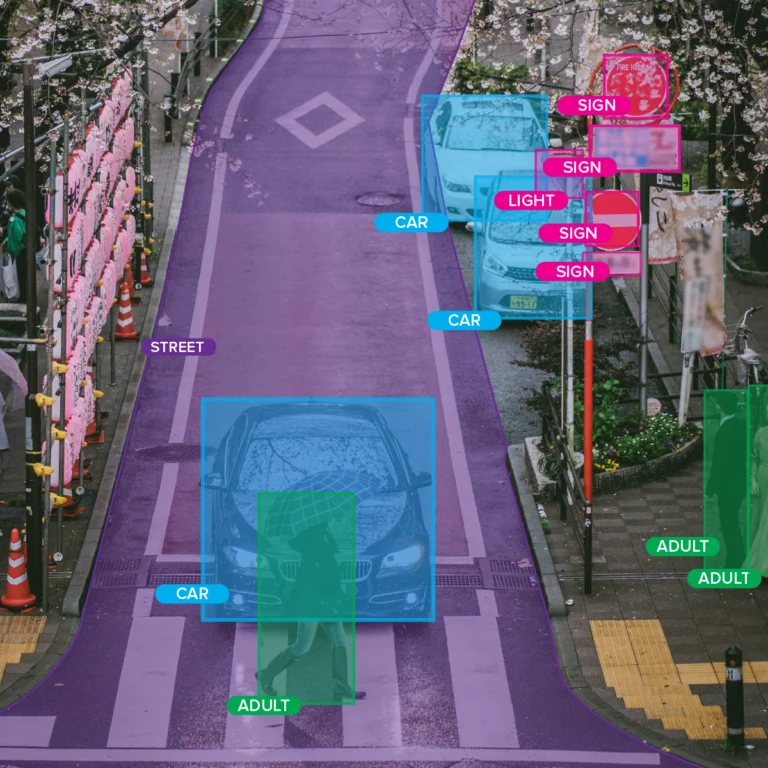

Data labeling in the AV domain typically involves multimodal inputs: high-resolution images, LiDAR point clouds, radar streams, and even audio signals in some edge cases. Each modality requires a different labeling strategy, but all share a common goal: to reflect reality with high fidelity and semantic richness. This labeled data powers key perception tasks such as object detection, semantic segmentation, lane detection, and Simultaneous Localization and Mapping (SLAM). The accuracy of these models in real-world deployments directly correlates with the quality and diversity of the labels they are trained on.

Types of Labeling

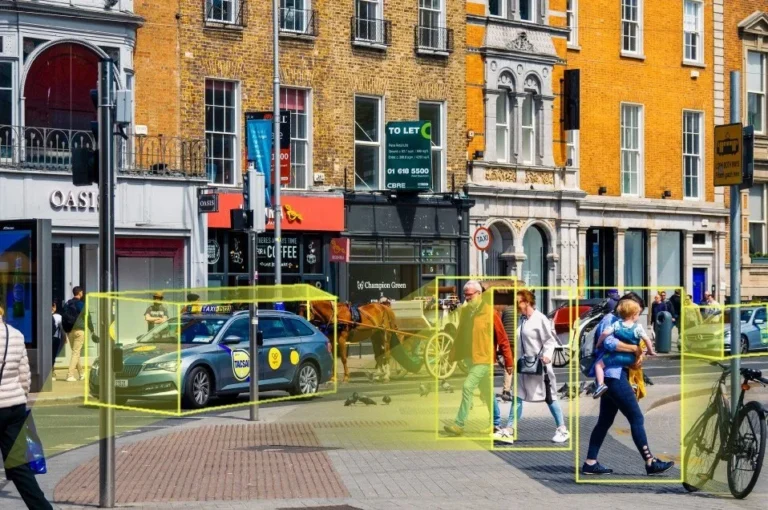

Different machine learning tasks require different annotation formats. For object detection, 2D bounding boxes are commonly used to enclose vehicles, pedestrians, traffic signs, and other roadway actors. For a more detailed understanding, 3D cuboids provide spatial awareness, enabling the vehicle to estimate depth, orientation, and velocity. Semantic and instance segmentation break down every pixel or point in an image or LiDAR scan, giving a precise class label, crucial for understanding drivable space, road markings, or occlusions.

Point cloud annotation is particularly critical for AVs, as it adds a third spatial dimension to perception. These annotations help train models that operate on LiDAR data, allowing the vehicle to perceive its environment in 3D and adapt to complex traffic geometries. Lane and path markings are another category, often manually annotated due to their variability across regions and road types. Each annotation type plays a distinct role in making perception systems more accurate, robust, and adaptable to real-world variability.

Real-World Testing for Autonomous Vehicles

What Real-World Testing Entails

No matter how well-trained an autonomous vehicle is in simulation or with labeled datasets, it must ultimately perform safely and reliably in the real world. Real-world testing provides the operational grounding that simulations and synthetic datasets cannot fully replicate. It involves deploying AVs on public roads or closed test tracks, collecting sensor logs during actual driving, and exposing the vehicle to unpredictable conditions, human behavior, and edge-case scenarios that occur organically.

During these deployments, the vehicle captures massive volumes of multimodal data, camera footage, LiDAR sweeps, radar signals, GPS and IMU readings, as well as system logs and actuator commands. These recordings are not just used for performance benchmarking; they form the raw inputs for future data labeling, scenario mining, and model refinement. Human interventions, driver overrides, and unexpected behaviors encountered on the road help identify system weaknesses and reveal where additional training or re-annotation is required.

Real-world testing also involves behavioral observations. AV systems must learn how to interpret ambiguous situations like pedestrians hesitating at crosswalks, cyclists merging unexpectedly, or aggressive drivers deviating from norms. Infrastructure factors, poor signage, lane closures, and weather conditions further test the robustness of perception and control. Unlike controlled simulation environments, real-world testing surfaces the nuances and exceptions that no pre-scripted scenario can fully anticipate.

Goals and Metrics

The primary goal of real-world testing is to validate the AV system’s ability to operate safely and reliably under a wide range of conditions. This includes compliance with industry safety standards such as ISO 26262 for functional safety and emerging frameworks from the United Nations Economic Commission for Europe (UNECE). Engineers use real-world tests to measure system robustness across varying lighting conditions, weather events, road surfaces, and traffic densities.

Key metrics tracked during real-world testing include disengagement frequency (driver takeovers), intervention triggers, perception accuracy, and system latency. More sophisticated evaluations assess performance in specific risk domains, such as obstacle avoidance in urban intersections or lane-keeping under degraded visibility. Failures and anomalies are logged, triaged, and often transformed into re-test scenarios in simulation or labeled datasets to close the learning loop.

Functional validation also includes testing of fallback strategies, what the vehicle does when a subsystem fails, when the road becomes undrivable, or when the AV cannot confidently interpret its surroundings. These behaviors must not only be safe but also align with regulatory expectations and public trust.

Labeling and Testing Feedback Cycle for AV

The Training-Testing Feedback Loop

The development of autonomous vehicles is not a linear process; it operates as a feedback loop. Real-world testing generates data that reveals how the vehicle performs under actual conditions, including failure points, unexpected behaviors, and edge-case encounters. These instances often highlight gaps in the training data or expose situations that were underrepresented or poorly annotated. That feedback is then routed back into the data labeling pipeline, where new annotations are created, and models are retrained to better handle those scenarios.

This cyclical workflow is central to improving model robustness and generalization. For example, if a vehicle struggles to detect pedestrians partially occluded by parked vehicles, engineers can isolate that failure, extract relevant sequences from the real-world logs, and annotate them with fine-grained labels. Once retrained on this enriched dataset, the model is redeployed for further testing. If performance improves, the cycle continues. If not, it signals deeper model or sensor limitations. Over time, this iterative loop tightens the alignment between what the AV system sees and how it acts.

Modern AV pipelines automate portions of this loop. Tools ingest driving logs, flag anomalies, and even pre-label data based on model predictions. This semi-automated system accelerates the identification of edge cases and reduces the time between observing a failure and addressing it in training. The result is not just a more intelligent vehicle, but one that is continuously learning from its own deployment history.

Recommendations for Data Labeling in Autonomous Driving

Building intelligence in autonomous vehicles is not simply a matter of applying the latest deep learning techniques; it requires designing processes that tightly couple data quality, real-world validation, and continuous improvement.

Invest in Hybrid Labeling Pipelines with Quality Assurance Feedback

Manual annotation remains essential for complex and ambiguous scenes, but it cannot scale alone. Practitioners should implement hybrid pipelines that combine human-in-the-loop labeling with automated model-assisted annotation.

Equally important is the incorporation of feedback loops in the annotation workflow. Labels should not be treated as static ground truth; they should evolve based on downstream model performance. Establishing QA mechanisms that flag and correct inconsistent or low-confidence annotations will directly improve model outcomes and reduce the risk of silent failures during deployment.

Prioritize Edge-Case Collection from Real-World Tests

Real-world driving data contains a wealth of rare but high-impact scenarios that simulations alone cannot generate. Instead of focusing solely on high-volume logging, AV teams should develop tools that automatically identify and extract unusual or unsafe situations. These edge cases are the most valuable training assets, often revealing systemic weaknesses in perception or control.

Practitioners should also categorize edge cases systematically, by behavior type, location, and environmental condition, to ensure targeted model refinement and validation.

Use Domain Adaptation Techniques to Bridge Simulation and Reality

While simulation environments offer control and scalability, they often fail to capture the visual and behavioral diversity of the real world. Bridging this gap requires applying domain adaptation techniques such as style transfer, distribution alignment, or mixed-modality training. These methods allow models trained in simulation to generalize more effectively to real-world deployments.

Teams should also consider mixing synthetic and real data within training batches, especially for rare classes or sensor occlusions. The key is to ensure that models not only learn from clean and idealized conditions but also from the messy, ambiguous, and imperfect inputs found on real roads.

Track Metrics Across the Data–Model–Validation Lifecycle

Developing an AV system is a lifecycle process, not a series of discrete tasks. Practitioners must track performance across the full development chain, from data acquisition and labeling to model training and real-world deployment. Metrics should include annotation accuracy, label diversity, edge-case recall, simulation coverage, deployment disengagements, and regulatory compliance.

Establishing these metrics enables informed decision-making and accountability. It also supports more efficient iteration, as teams can pinpoint whether performance regressions are due to data issues, model limitations, or environmental mismatches. Ultimately, mature metric tracking is what separates experimental AV programs from production-ready platforms.

How DDD can help

Digital Divide Data (DDD) supports autonomous vehicle developers by delivering high-quality, scalable data labeling services essential for training and validating perception systems. With deep expertise in annotating complex sensor data, including 2D/3D imagery, LiDAR point clouds, and semantic scenes.

DDD enables AV teams to improve model accuracy and accelerate feedback cycles between real-world testing and retraining. Its hybrid labeling approach, combining expert human annotators with model-assisted workflows and rigorous QA, ensures consistency and precision even in edge-case scenarios.

By integrating seamlessly into testing-informed annotation pipelines and operating with global SMEs, DDD helps AV innovators build safer, smarter systems with high-integrity data at the core.

Conclusion

While advanced algorithms and simulation environments receive much of the attention, they can only function effectively when grounded in accurate, diverse, and well-structured data. Labeled inputs teach the vehicle what to see, and real-world exposure teaches it how to respond. Acknowledge that autonomy is not simply a function of model complexity, but of how well the system can learn from both curated data and lived experience. In the race toward autonomy, data and road miles aren’t just fuel; they’re the map and compass. Mastering both is what will distinguish truly intelligent vehicles from those that are merely functional.

Partner with Digital Divide Data to power your autonomous vehicle systems with precise, scalable, and ethically sourced data labeling solutions.

References:

NVIDIA. (2023, March 21). Developing an end-to-end auto labeling pipeline for autonomous vehicle perception. NVIDIA Developer Blog. https://developer.nvidia.com/blog/developing-an-end-to-end-auto-labeling-pipeline-for-autonomous-vehicle-perception/

Connected Automated Driving. (2024, September). Recommendations for a European framework for testing on public roads: Regulatory roadmap for automated driving (FAME project). https://www.connectedautomateddriving.eu/blog/recommendations-for-a-european-framework-for-testing-on-public-roads-regulatory-roadmap-for-automated-driving/

Frequently Asked Questions (FAQs)

1. How is data privacy handled in AV data collection and labeling?

Autonomous vehicles capture vast amounts of sensor data, which can include identifiable information such as faces, license plates, or locations. To comply with privacy regulations like GDPR in Europe and CCPA in the U.S., AV companies typically anonymize data before storing or labeling it. Techniques include blurring faces or plates, removing GPS metadata, and encrypting raw data during transmission. Labeling vendors are also required to follow strict access controls and audit policies to ensure data security.

2. What is the role of simulation in complementing real-world testing?

Simulations play a critical role in AV development by enabling the testing of thousands of scenarios quickly and safely. They are particularly useful for rare or dangerous events, like a child running into the road or a vehicle making an illegal turn, that may never occur during physical testing. While real-world testing validates real behavior, simulation helps stress-test systems across edge cases, sensor failures, and adversarial conditions without putting people or property at risk.

3. How do AV companies determine when a model is “good enough” for deployment?

There is no single threshold for model readiness. Companies use a combination of quantitative metrics (e.g., precision/recall, intervention rates, disengagement frequency) and qualitative reviews (e.g., behavior in edge cases, robustness under sensor occlusion). Before deployment, models are typically validated against a suite of simulation scenarios, benchmark datasets, and real-world replay testing.

4. Can crowdsourcing be used for AV data labeling?

While crowdsourcing is widely used in general computer vision tasks, its role in AV labeling is limited due to the complexity and safety-critical nature of the domain. Annotators must understand 3D space, temporal dynamics, and detailed labeling schemas that require expert training. However, some platforms use curated and trained crowdsourcing teams to handle simpler tasks or validate automated labels under strict QA protocols.