DDD Solutions Engineering Team

June 24, 2025

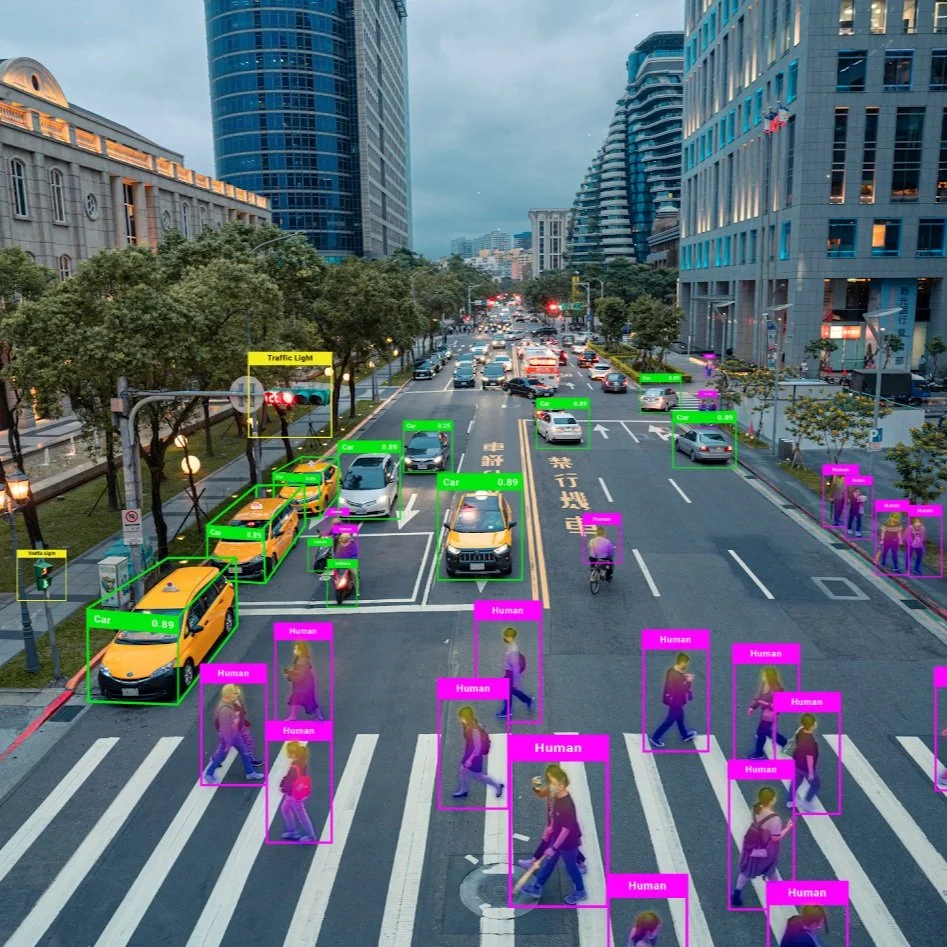

Behind the sleek hardware and intelligent systems powering autonomous vehicles lies a complex web of perception technologies that enable machines to see, understand, and react to the world around them. Among these, two key techniques stand out: semantic segmentation and instance segmentation.

They allow an autonomous vehicle to know where the road ends, where a pedestrian begins, and how to respond in real time to a cluttered, unpredictable urban environment. From differentiating between two closely parked cars to detecting the edge of a curb under poor lighting, these segmentation methods are foundational to machine perception.

This blog explores the role of Semantic and Instance Segmentation for Autonomous Vehicles, examining how each technique contributes to vehicle perception, the unique challenges they face in urban settings, and how integrating both can lead to safer and more intelligent navigation systems.

What is Semantic and Instance Segmentation for Autonomous Vehicles

In autonomous driving, perception systems must translate raw visual data into a structured, actionable understanding. One of the most important components in this process is segmentation, which divides an image into distinct regions based on the objects or surfaces represented. This segmentation allows a vehicle to differentiate between the road, other vehicles, pedestrians, signage, and surrounding infrastructure, all of which are essential for safe navigation.

Semantic Segmentation

Semantic segmentation provides a broad understanding of the driving environment by assigning a category to each pixel in the image. All pixels that represent the same type of object, such as a building, a pedestrian, or the road, are grouped under a shared class label. This classification helps the vehicle recognize navigable surfaces, roadside boundaries, and static structures. In effect, semantic segmentation offers a map-like view of the surroundings, which is invaluable for high-level planning and general context awareness.

Despite its value, semantic segmentation cannot distinguish between separate objects of the same type. For example, while it can identify the presence of pedestrians in a scene, it cannot tell how many there are or where one individual ends and another begins. This limitation becomes critical in dense urban scenarios where vehicles must react differently to each nearby object. Without the ability to treat these objects as separate entities, the system cannot accurately track movement, predict behavior, or prioritize safety decisions in real time.

Advantages of Semantic Segmentation

Semantic segmentation offers several key benefits in the development and deployment of autonomous driving systems. Its primary strength lies in the ability to provide a comprehensive, high-level understanding of the environment by labeling every pixel with a class identifier. This full-scene categorization helps the vehicle recognize the structure of the road, the presence of sidewalks, crosswalks, curbs, lane markings, and traffic control elements such as signs or lights.

One significant advantage of semantic segmentation is its computational efficiency. Since it does not need to distinguish between individual object instances, it requires fewer resources, making it more suitable for real-time applications where rapid processing is essential. This efficiency is especially valuable in early perception stages or embedded systems where memory and processing power are limited.

Instance Segmentation

Instance segmentation builds on semantic segmentation by not only classifying pixels by object type but also distinguishing between individual instances within the same category. This means that two cars side by side or a group of pedestrians are treated as separate, uniquely identified objects. This capability is crucial for tracking motion over time, predicting trajectories, and making context-sensitive decisions. For autonomous driving, it enables the system to follow a specific vehicle, yield to a crossing pedestrian, or anticipate the movements of a cyclist in a way that semantic segmentation alone cannot support.

While semantic segmentation provides the foundational structure of a scene, instance segmentation enables nuanced object-level understanding. Together, they form a complementary system where one outlines the general layout and the other fills in the detailed behavior of dynamic elements. This dual-layered perception is particularly vital in urban environments where unpredictability, high object density, and rapid decision-making are the norms.

Advantages of Instance Segmentation

Instance segmentation provides an extra layer of intelligence by offering detailed, object-level awareness. Unlike semantic segmentation, it allows the vehicle to identify and distinguish between different objects within the same category. This capability is vital for dynamic interaction with the environment, where understanding individual behavior and movement patterns is necessary.

The main advantage of instance segmentation is its support for object tracking and trajectory prediction. For example, in a scenario with multiple pedestrians near a crosswalk, instance segmentation enables the vehicle to track each one separately, assess their movement patterns, and predict whether they intend to cross the street. This individualized attention makes it possible to make fine-grained driving decisions that prioritize safety and responsiveness.

Instance segmentation is also critical for collision avoidance and behavior prediction in dense traffic. By distinguishing between different vehicles, cyclists, or other moving agents, the system can estimate how each object is likely to behave and adapt its own actions accordingly. This is especially important in complex or crowded urban environments, where multiple agents are in motion simultaneously and in close proximity.

Integration of Semantic and Instance Segmentation in Urban Driving

In the dynamic and often unpredictable environment of urban driving, both semantic and instance segmentation play vital roles. Semantic segmentation provides a broad understanding of the scene, which is essential for navigation and path planning. Instance segmentation offers detailed information about individual objects, which is crucial for tasks like obstacle avoidance and interaction with other road users.

Recent advancements have seen the integration of both techniques into unified models, such as panoptic segmentation, which combines the strengths of semantic and instance segmentation to provide a comprehensive understanding of the scene. These integrated approaches are particularly beneficial in urban environments, where the complexity and density of objects require both broad and detailed scene interpretation.

By leveraging the strengths of both semantic and instance segmentation, autonomous vehicles can achieve a more robust and nuanced understanding of urban environments, leading to improved safety and efficiency in navigation and decision-making processes.

What are the Challenges of Semantic and Instance Segmentation

Urban environments present a complex array of visual elements, making accurate segmentation a formidable task. The challenges are multifaceted, impacting both semantic and instance segmentation techniques.

1. Occlusions and Overlapping Objects

In dense urban settings, objects frequently occlude one another. Pedestrians may be partially hidden by vehicles, or street signs might be obscured by foliage. Semantic segmentation often struggles in these scenarios, as it assigns the same label to all pixels of a class without distinguishing individual instances. Instance segmentation aims to overcome this by identifying separate objects, but occlusions can still lead to inaccuracies in delineating object boundaries.

2. Variability in Object Scales

Urban scenes encompass objects of varying sizes, from distant traffic signs to nearby pedestrians. This scale variability poses a significant challenge for segmentation algorithms, which must accurately identify and classify objects regardless of their size.

3. Dynamic Lighting and Weather Conditions

Lighting conditions in urban environments can change rapidly due to factors like time of day, weather, and artificial lighting. These variations can adversely affect the performance of segmentation models, which may have been trained under specific lighting conditions. To mitigate this, some approaches incorporate data augmentation techniques during training to expose models to a broader range of lighting scenarios.

4. Real-Time Processing Requirements

Autonomous vehicles require real-time processing of visual data to make immediate decisions. Semantic segmentation models often offer faster processing times but may lack the granularity needed for certain tasks. Instance segmentation provides more detailed information but at the cost of increased computational complexity. Balancing speed and accuracy remains a critical challenge in deploying these models in real-world urban driving scenarios.

5. Sparse and Noisy Data

Sensors like LiDAR generate point cloud data that can be sparse and noisy, especially at greater distances. This sparsity makes it difficult for segmentation algorithms to accurately identify and classify objects.

6. Dataset Limitations

The performance of segmentation models heavily depends on the quality and diversity of training datasets. Many existing datasets may not capture the full variability of urban environments, leading to models that perform well in training but poorly in real-world scenarios. Efforts are underway to develop more comprehensive datasets that include a wider range of urban scenes and conditions.

7. Integration of Multi-Modal Data

Combining data from multiple sensors, such as cameras and LiDAR, can enhance segmentation accuracy. However, integrating these data sources poses challenges in terms of synchronization, calibration, and data fusion. Developing models that can effectively leverage multi-modal data remains an active area of research.

Read more: In-Cabin Monitoring Solutions for Autonomous Vehicles

How Can We Help?

Digital Divide Data empowers AI/ML innovation by providing high-quality, human-annotated training data at scale. Here’s how we help autonomous driving companies solve annotation challenges.

Scalable, High-Precision Data Annotation

DDD specializes in large-scale data annotation services, including pixel-level labeling, object instance tagging, and 3D point cloud segmentation. These services are essential for training deep learning models to recognize and distinguish urban objects such as pedestrians, vehicles, road signs, and infrastructure under complex city conditions.

By integrating quality assurance workflows and domain-specific training for its workforce, DDD ensures that the labeled data used to train semantic and instance segmentation models meets industry standards for accuracy and consistency, particularly vital for safety-critical applications in autonomous driving.

Support for Multi-Modal and Diverse Urban Datasets

Modern autonomous systems rely on multi-sensor data fusion (e.g., LiDAR, RGB, radar). DDD supports annotation across these data types, enabling robust fusion-based segmentation models. Furthermore, DDD’s work often emphasizes geographic and environmental diversity, contributing to the development of models capable of generalizing across varied urban landscapes.

Enabling Rare Class Detection through Dataset Balancing

Rare but critical classes like emergency vehicles, construction zones, or atypical road behaviors are often underrepresented in datasets. DDD supports dataset balancing by sourcing, curating, and annotating niche scenarios, thus enabling models to recognize low-frequency but high-impact elements critical to safe driving.

Leveraging Human-in-the-Loop Processes

DDD incorporates human-in-the-loop methodologies in annotation workflows, particularly for edge cases common in urban scenes such as occluded pedestrians, irregular vehicle shapes, and ambiguous infrastructure. This hybrid approach, combining automated tools with skilled human reviewers, greatly improves annotation accuracy for complex urban segmentation datasets.

Read more: How to Conduct Robust ODD Analysis for Autonomous Systems

Conclusion

Urban driving scenes introduce significant challenges: occlusions, inconsistent lighting, sensor noise, and the need for real-time decision-making all push the limits of segmentation models. Overcoming these challenges requires more than just algorithmic sophistication; it demands high-quality annotated data, diverse and well-balanced datasets, and scalable workflows that integrate human expertise into the AI development lifecycle.

The evolution of semantic and instance segmentation techniques continues to play a critical role in advancing autonomous driving technologies. By addressing the inherent challenges of urban environments through innovative model architectures and data integration strategies, the field moves closer to realizing fully autonomous vehicles capable of safe and efficient navigation in complex cityscapes.

If your team is building perception systems for autonomous driving, let’s talk. We’re here to help you turn visual complexity into safe, actionable intelligence.

Let DDD power your computer vision pipeline with high-quality, real-world segmentation data. Talk to our experts today.

References:

Zou, Y., Weinacker, H., & Koch, B. (2021). Towards urban scene semantic segmentation with deep learning from LiDAR point clouds: A case study in Baden-Württemberg, Germany. Remote Sensing, 13(16), 3220. https://doi.org/10.3390/rs13163220

Vobecky, A., et al. (2025). Unsupervised semantic segmentation of urban scenes via cross-modal distillation. International Journal of Computer Vision. https://doi.org/10.1007/s11263-024-02320-3

Vobecky, A., et al. (2025). Unsupervised semantic segmentation of urban scenes via cross-modal distillation. International Journal of Computer Vision. https://doi.org/10.1007/s11263-024-02320-3

FAQs

1. How is segmentation different from object detection in autonomous driving?

While object detection identifies and localizes objects using bounding boxes, segmentation provides a much finer level of detail by classifying every pixel. This pixel-level understanding helps autonomous vehicles interpret the shape, boundary, and precise position of objects, which is essential for tasks like lane following or obstacle avoidance.

2. What role does synthetic data play in training segmentation models?

Synthetic data, generated from simulations or video game engines, is increasingly used to augment real-world datasets. It helps address class imbalances, rare scenarios, and edge cases while reducing the time and cost of manual annotation. However, models trained on synthetic data still require fine-tuning on real-world datasets to generalize effectively.

3. How do segmentation models handle moving objects versus static ones?

Segmentation itself is agnostic to motion; it labels objects based on appearance in a single frame. However, when used in video sequences, segmentation can be combined with tracking algorithms or temporal models to identify which objects are moving and predict their future positions.

4. Is instance segmentation always better than semantic segmentation for autonomous vehicles?

Not necessarily. Instance segmentation provides more detail, but it is also more computationally intensive. In some applications, such as identifying road surface or traffic signs, semantic segmentation is sufficient and more efficient. The choice depends on the task’s complexity, the required level of detail, and hardware constraints.