DDD Solutions Engineering Team

26 Aug, 2025

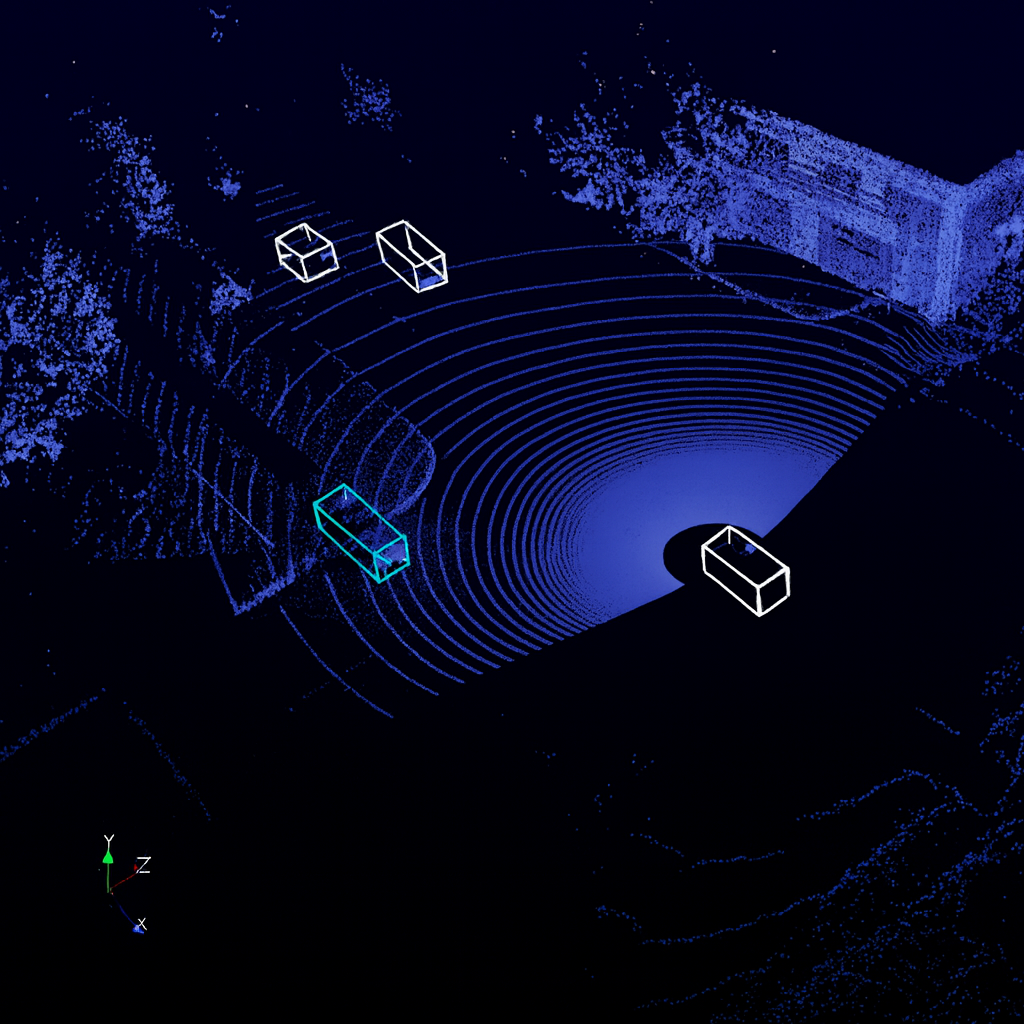

Autonomous vehicles rely on a sophisticated understanding of their surroundings, and one of the most critical inputs comes from 3D point clouds generated by LiDAR and radar sensors. These point clouds capture the environment in three dimensions, providing precise spatial information about objects, distances, and surfaces. Unlike traditional images, point clouds offer depth and structure, which are essential for safe navigation in dynamic and unpredictable road conditions.

To make sense of these vast collections of raw points, annotation plays a vital role. Annotation transforms unstructured data into labeled datasets that machine learning models can use to detect and classify vehicles, pedestrians, cyclists, traffic signs, and other key elements of the driving environment. Without accurate and consistent annotations, even the most advanced algorithms struggle to effectively interpret sensor inputs.

Understanding why 3D point cloud annotation is critical to autonomous driving, the challenges it presents, and the emerging methods for advancing safe and scalable self-driving technology.

Importance of 3D Point Cloud Annotation in Autonomous Driving

For autonomous vehicles, perception is the foundation of safe and reliable operation. Annotated 3D point clouds are at the heart of this perception layer. By converting raw LiDAR or radar data into structured, labeled information, they enable machine learning models to identify, classify, and track the elements of a scene with high precision. Vehicles, pedestrians, cyclists, road signs, barriers, and even subtle changes in road surface can all be mapped into categories that a self-driving system can interpret and act upon.

Unlike flat images, point clouds provide depth, scale, and accurate spatial relationships between objects. This makes them particularly valuable in addressing real-world complexities such as occlusion, where one object partially blocks another, or variations in size and distance that 2D cameras can misinterpret. For example, a child stepping into the road may be partially obscured by a parked car in an image, but in a point cloud, the geometry still reveals their presence.

High-quality data annotations also accelerate model training and validation. Clean, well-structured datasets improve detection accuracy and reduce the amount of training time required to achieve robust performance. They allow developers to identify gaps in model behavior earlier and adapt quickly, which shortens the development cycle. As autonomous vehicles expand into new environments with varying road structures, lighting conditions, and weather, annotated point clouds provide the adaptability and resilience needed to maintain safety and reliability.

Major Challenges in 3D Point Cloud Annotation

While 3D point cloud annotation is indispensable for autonomous driving, it brings with it a series of technical and operational challenges that make it one of the most resource-intensive stages of the development pipeline.

Data Complexity

Point clouds are inherently sparse and irregular, with millions of points scattered across three-dimensional space. Unlike structured image grids, each frame of LiDAR data contains points of varying density depending on distance, reflectivity, and sensor placement. Annotators must interpret this irregular distribution to label objects accurately, which requires advanced tools and highly trained personnel.

Annotation Cost

The process of labeling 3D data is significantly more time-consuming than annotating images. Creating bounding boxes or segmentation masks in three dimensions requires precise adjustments and careful validation. Given the massive number of frames collected in real-world driving scenarios, the cost of manual annotation quickly escalates, making scalability a major concern for companies building autonomous systems.

Ambiguity in Boundaries

Real-world conditions often introduce uncertainty into point cloud data. Objects may be partially occluded, scanned from an angle that leaves gaps, or overlapped with other objects. In dense urban environments, for example, bicycles, pedestrians, and traffic poles can merge into a single cluster of points. Defining clear and consistent boundaries under such circumstances is one of the most difficult challenges in 3D annotation.

Multi-Sensor Fusion

Autonomous vehicles rarely rely on a single sensor. LiDAR, radar, and cameras are often fused to achieve robust perception. Aligning annotations across these modalities introduces additional complexity. A bounding box drawn on a LiDAR point cloud must correspond precisely to its representation in an image frame, requiring synchronization and calibration across different sensor outputs.

Scalability

Autonomous vehicle datasets encompass millions of frames recorded in diverse geographies, traffic conditions, and weather scenarios. Scaling annotation pipelines to handle this volume while maintaining consistent quality across global teams is a persistent challenge. The need to capture edge cases, such as unusual objects or rare driving scenarios, further amplifies the workload.

Together, these challenges highlight why annotation has become both the most resource-intensive and the most innovative area of autonomous vehicle development.

Emerging Solutions for 3D Point Cloud Annotation

Although 3D point cloud annotation has long been seen as a bottleneck, recent breakthroughs are reshaping how data is labeled and accelerating the development of autonomous driving systems.

Advanced Tooling

Modern annotation platforms now integrate intuitive 3D visualization, semi-automated labeling, and built-in quality assurance features. These tools reduce manual effort by allowing annotators to manipulate 3D objects more efficiently and by embedding validation steps directly into the workflow. Cloud-based infrastructure also makes it possible to scale projects across distributed teams without sacrificing performance.

Weak and Semi-Supervision

Rather than requiring dense, frame-by-frame annotations, weak and semi-supervised methods enable models to learn from partially labeled or sparsely annotated datasets. This dramatically reduces the time and cost of data preparation while still delivering strong performance, especially when combined with active selection of the most valuable frames.

Self-Supervision and Pretraining

Self-supervised learning techniques leverage vast amounts of unlabeled data to pretrain models that can later be fine-tuned with smaller, labeled datasets. In the context of point clouds, this means autonomous systems can benefit from large-scale sensor data without requiring exhaustive manual labeling at the outset.

Active Learning

Active learning strategies identify the most informative or uncertain frames within a dataset and prioritize them for annotation. This ensures that human effort is concentrated where it has the greatest impact, improving model performance while reducing redundant labeling of straightforward cases.

Vision-Language Models (VLMs)

The emergence of multimodal AI models has opened the door to annotation guided by language and contextual cues. By leveraging descriptions of objects and scenes, VLMs can assist in disambiguating complex or ambiguous point clusters and speed up labeling in real-world driving scenarios.

Auto-Annotation and Guideline-Driven Labeling

Automated approaches are increasingly capable of translating annotation rules and specifications into machine-executed labeling. This allows teams to encode their quality standards into the system itself, producing annotations that are both consistent and scalable, while reserving human input for validation and correction.

Industry Applications for 3D Point Cloud

The advancements in 3D point cloud annotation directly translate into measurable benefits across the autonomous vehicle industry. As vehicles move closer to large-scale deployment, these applications demonstrate why precise annotation is indispensable.

Improved Safety

Reliable annotations strengthen the perception systems that detect and classify objects in complex environments. Better training data reduces false positives and missed detections, which are critical for preventing accidents and ensuring passenger safety in unpredictable traffic scenarios.

Faster Development Cycles

Annotated point clouds streamline model development by providing high-quality datasets that can be reused across experiments and iterations. With faster access to labeled data, research and engineering teams can test new architectures, validate updates, and deploy improvements more quickly. This efficiency shortens time to market and accelerates progress toward fully autonomous driving.

Cost Efficiency

Annotation breakthroughs such as weak supervision, automation, and active learning significantly reduce the burden of manual labeling. Companies can achieve the same or better levels of accuracy while investing fewer resources, making large-scale projects more financially sustainable.

Global Scalability

Autonomous vehicles must perform reliably across diverse geographies, weather conditions, and infrastructure. Scalable annotation pipelines enable datasets to cover everything from dense urban intersections to rural highways, ensuring that systems adapt effectively to regional variations. This global adaptability is essential for building AVs that can operate safely in any environment.

Recommendations for 3D Point Cloud Annotation in Autonomous Vehicles

As the autonomous vehicle ecosystem continues to expand, organizations must balance innovation with practical strategies for building reliable annotation pipelines. The following recommendations can help teams maximize the value of 3D point cloud data while managing cost and complexity.

Adopt Hybrid Approaches

A combination of automated annotation tools and human quality assurance offers the most efficient path forward. Automated systems can handle repetitive labeling tasks, while human reviewers focus on complex cases and edge scenarios that require nuanced judgment.

Leverage Active Learning

Instead of labeling entire datasets, prioritize frames that provide the greatest improvement to model performance. Active learning helps reduce redundancy by focusing human effort on challenging or uncertain examples, leading to faster gains in accuracy.

Invest in Scalable Infrastructure

Annotation platforms must be capable of handling multi-sensor data, large volumes, and distributed teams. Building a scalable infrastructure ensures that as datasets grow, quality and consistency do not degrade.

Establish Clear Annotation Guidelines

Consistency across large teams requires well-documented guidelines that define how to label objects, resolve ambiguities, and enforce quality standards. Strong documentation minimizes errors and ensures that annotations remain uniform across projects and regions.

Stay Aligned with Safety and Regulatory Standards

Emerging regulations in the US and Europe increasingly focus on data transparency, model explainability, and safety validation. Annotation workflows should be designed to align with these requirements, ensuring that datasets meet the expectations of both regulators and end-users.

How We Can Help

Building and maintaining high-quality 3D point cloud annotation pipelines requires expertise, scale, and rigorous quality control. Digital Divide Data (DDD) is uniquely positioned to support autonomous vehicle companies.

We have deep experience in handling large-scale annotation projects, including 2D, 3D, and multi-sensor data. Our teams are trained to work with advanced annotation platforms and can manage intricate tasks such as 3D segmentation, object tracking, and sensor fusion labeling.

We design workflows tailored to the specific needs of autonomous driving projects. Whether the requirement is bounding boxes for vehicles, semantic segmentation of urban environments, or cross-modal annotations combining LiDAR, radar, and camera inputs, DDD adapts processes to match project goals.

By partnering with DDD, autonomous vehicle developers can accelerate dataset preparation, reduce annotation costs, and improve the quality of their perception systems, all while maintaining flexibility and control over project outcomes.

Conclusion

3D point cloud annotation provides the foundation for perception systems that must identify, classify, and track objects in complex, real-world environments. At the same time, the process brings challenges related to data complexity, annotation cost, scalability, and cross-sensor integration. These hurdles have long made annotation one of the most resource-intensive aspects of building self-driving systems.

Yet the field is rapidly evolving. Advances in tooling, semi-supervised learning, self-supervision, active learning, and automated guideline-driven labeling are transforming how data is prepared. What was once a bottleneck is increasingly becoming an area of innovation, enabling companies to train more accurate models with fewer resources and shorter development cycles.

As the industry looks toward global deployment of autonomous vehicles, the ability to scale annotation pipelines while maintaining precision and compliance will remain essential. By combining emerging breakthroughs with practical strategies and expert partners, organizations can ensure that their systems are safe, efficient, and ready for real-world conditions.

Continued innovation in 3D point cloud annotation will be key to unlocking the next generation of safe, reliable, and scalable autonomous driving.

Partner with us to accelerate your autonomous vehicle development with precise, scalable, and cost-efficient 3D point cloud annotation.

References

O. Unal, D. Dai, L. Hoyer, Y. B. Can and L. Van Gool, “2D Feature Distillation for Weakly- and Semi-Supervised 3D Semantic Segmentation,” 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2024, pp. 7321-7330, doi: 10.1109/WACV57701.2024.00717.

Hekimoglu, A., Schmidt, M., & Marcos-Ramiro, A. (2024, January). Monocular 3D object detection with LiDAR guided semi-supervised active learning. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) (pp. 6156–6166). IEEE.

Martins, M., Gomes, I. P., Wolf, D. F., & Premebida, C. (2024). Evaluation of point cloud data augmentation for 3D-LiDAR object detection in autonomous driving. In L. Marques, C. Santos, J. L. Lima, D. Tardioli, & M. Ferre (Eds.), Robot 2023: Sixth Iberian Robotics Conference (ROBOT 2023) Springer. https://doi.org/10.1007/978-3-031-58676-7_7

FAQs

Q1. What is the difference between LiDAR and radar point cloud annotation?

LiDAR generates dense, high-resolution 3D data that captures fine object details, while radar provides sparser information but excels at detecting motion and distance, even in poor weather. Annotation strategies often combine both to create more robust datasets.

Q2. How do annotation errors affect autonomous vehicle systems?

Annotation errors can propagate into model training, leading to misclassification, missed detections, or unsafe driving decisions. Even small inconsistencies can reduce overall system reliability, which is why rigorous quality assurance is essential.

Q3. Can open-source tools handle large-scale 3D point cloud annotation projects?

Open-source platforms provide flexibility and accessibility but often lack the scalability, security, and integrated quality controls required for production-level autonomous driving projects. Enterprises typically combine open-source foundations with custom or commercial solutions.

Q4. How is synthetic data used in 3D point cloud annotation?

Synthetic point clouds generated from simulations or digital twins can supplement real-world data, especially for rare or hazardous scenarios that are difficult to capture naturally. These datasets reduce reliance on manual annotation and broaden model training coverage.

Q5. What role do regulations play in point cloud annotation for autonomous vehicles?

US and EU regulations increasingly emphasize traceability, safety validation, and data governance. Annotation pipelines must meet these standards to ensure that labeled datasets are consistent, transparent, and compliant with evolving legal frameworks.