Data Orchestration That Keeps Pipelines Moving, Reliably and at Scale

Delivering enterprise-grade data orchestration services that coordinate complex workflows across data preparation, engineering, and analytics.

Data Orchestration Use Cases We Support

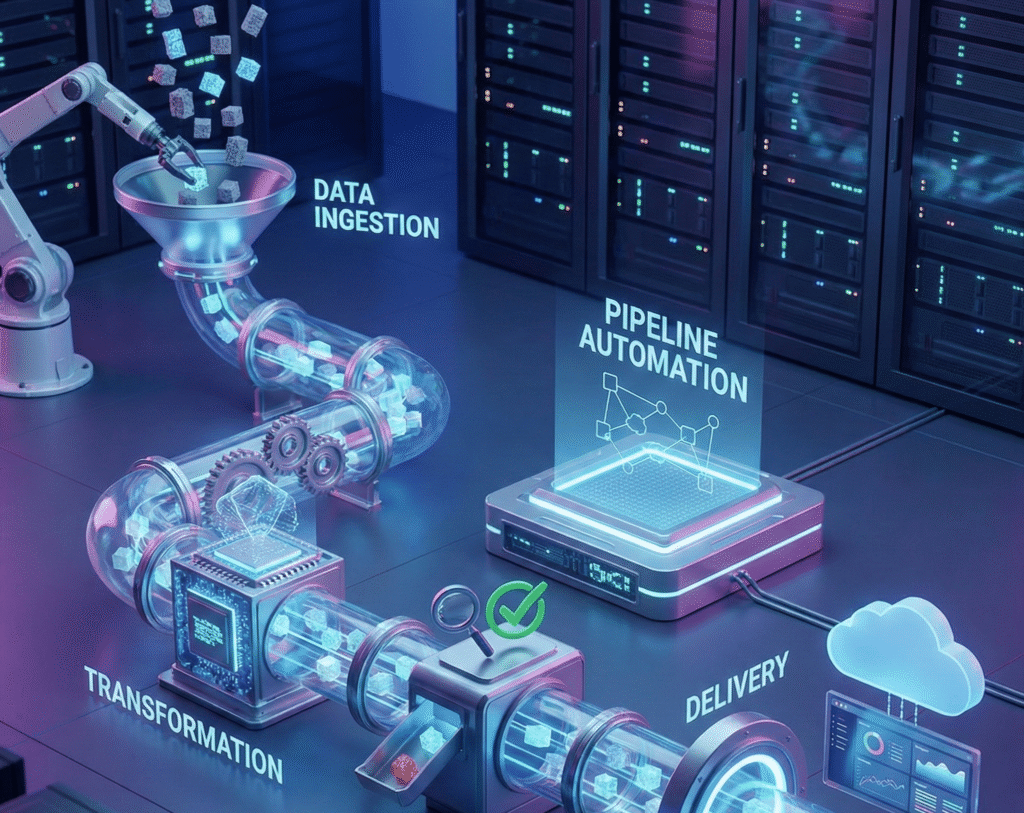

Coordinate data ingestion, transformation, validation, and delivery across complex pipelines.

Ensure timely, reliable data flows that support model training, inference, and analytics reporting.

Automate scheduled and event-driven pipelines with auditability and reliability.

Detect pipeline issues early and trigger retries, alerts, and remediation workflows.

Monitor, manage, and optimize pipelines continuously as volumes and complexity grow.

End-to-End Data Orchestration Workflow

Whether you need a one-time pipeline automation or an ongoing Data as a Service model, DDD manages the full orchestration lifecycle:

Understand data dependencies, timing requirements, failure risks, and downstream consumers.

Define workflow structure, triggers, scheduling logic, and dependency management.

Connect orchestration layers with data preparation, engineering, and analytics systems.

Implement time-based, event-driven, and conditional workflows across pipelines.

Track pipeline health, performance, and failures with proactive alerts.

Configure retries, fallbacks, and escalation workflows to minimize downtime.

Ensure traceability, logging, and compliance across all pipeline executions.

Refine workflows to improve reliability, performance, and cost efficiency over time.

Industries We Support

Cultural Heritage

Orchestrating multi-stage pipelines that process and preserve large archival datasets.

Financial Services

Delivering reliable, auditable orchestration for analytics and reporting workflows.

Healthcare

Managing sensitive data pipelines with strict scheduling, reliability, and compliance requirements.

What Our Clients Say

DDD’s data orchestration services significantly reduced pipeline failures and improved reporting reliability.

Their enterprise data orchestration approach gave us confidence in our data delivery timelines.

DDD helped us coordinate complex pipelines across systems without adding operational overhead.

They brought structure, monitoring, and reliability to pipelines we struggled to manage internally.

Why Choose DDD?

Scheduling, monitoring, and automated failure handling are embedded into every workflow to keep pipelines running on time.

Data Orchestration Built for Reliability, Scale, and Control

Frequently Asked Questions

DDD’s data orchestration services coordinate and automate complex data workflows across preparation, engineering, and analytics to ensure reliable, timely data delivery.

Data engineering focuses on building pipelines and transformations, while data orchestration manages how, when, and in what order those pipelines run, including scheduling, dependencies, monitoring, and recovery.

Yes. Our enterprise data orchestration approach is platform-agnostic and designed to integrate with your existing tools and environments.

We embed scheduling logic, monitoring, alerting, retries, and failure-handling mechanisms into every orchestration workflow.

We enable operational transparency through detailed logging, monitoring dashboards, execution tracking, and performance metrics.

Security and governance are built into orchestration workflows, aligned with SOC 2 Type II, ISO 27001, GDPR, HIPAA, and TISAX requirements where applicable.