In this blog, we explore how Vision-Language-Action models are transforming the autonomy industry. We’ll trace how they evolved from...

Read MoreVision-Language-Action (VLA) Model Analysis Services

Our VLA Model Analysis Solutions

Multimodal Scenario Evaluation

Planning Validation

Edge Case Analysis

Our teams analyze edge cases such as low-light conditions, occlusions, rare object interactions, motion anomalies, or ambiguous instructions.

Insights are translated into targeted dataset improvements, optimized prompts, and retraining pipelines to strengthen real-world reliability and reduce operational risk.

VLA Benchmarking & Performance Scoring

Our scoring integrates both automated evaluation and structured human-in-the-loop reviews.

This creates a complete model performance profile designed to support regulatory readiness, fleet-level deployment, and scalable productization.

Continuous Model Improvement Pipeline

Human-in-the-Loop Review & Safety Validation

Grounded, Reliable, Deployment-Ready VLA Models

Industries We Serve

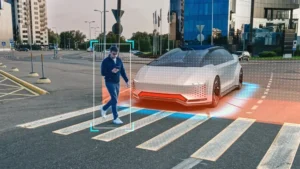

ADAS

Autonomous Driving

Robotics

Healthcare

Agriculture Technology

Humanoids

What Our Clients Say

DDD’s VLA evaluations helped us uncover hidden failure cases in our L3 ADAS stack and improve safety significantly.

Their multimodal testing accelerated our humanoid robot’s action-planning performance in real-world tasks

We rely on DDD to validate our agricultural vision-language models for crop detection and autonomous operations.

Their benchmarks gave us a clear understanding of where our VLA agent struggled and how to fix it.

Why Choose DDD?

Read Our Latest Blogs

Why Accurate Vulnerable Road User (VRU) Detection Is Critical for Autonomous Vehicle Safety

This blog examines how detection precision, data diversity, and shared situational awareness are becoming the foundation for autonomous safety...

Read MoreOvercoming the Challenges of Night Vision and Night Perception in Autonomy

In this blog, we will explore how to overcome challenges of night vision and night perception in autonomy through...

Read More