This blog explores why multilingual data annotation is uniquely challenging, outlines the key dimensions that define its quality and...

Read MoreHuman-in-the-Loop ML Data Annotation Services

Why Annotation Is Different for Physical AI

Multi-sensor inputs (multi-camera rigs, LiDAR, radar, GPS/IMU)

Complex, dynamic scenes (traffic, factories, warehouses, farms)

Safety-critical edge cases (near-misses, occlusions, rare events)

DDD’s AI data annotation services are designed for Physical AI, combining specialized workflows, domain-trained teams, and rigorous QA to deliver trustworthy labels for perception, mapping, navigation, manipulation, and human–robot interaction.

What We Annotate for Physical AI

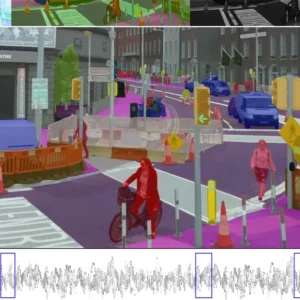

Perception Data (Vision, LiDAR, Multimodal)

- 2D Vision: Bounding boxes, polygons, and masks; instance & semantic segmentation; multi-frame tracking.

- LiDAR / 3D: Cuboids for agents and static objects; drivable vs. non-drivable space; infrastructure (poles, rails, docks, racks).

- Sensor Fusion: Consistent labels across camera–LiDAR projections and BEV views.

Supports AV/ADAS, mobile robots, drones, and inspection robots.

Mapping & Localization Annotations

- Road & Outdoor: Lanes, crosswalks, stop lines, traffic lights, signs, and barriers.

- Indoor: Aisles, docking zones, shelves/racks, markers, waypoints, and no-go zones.

- Structures: Walls, doors, stairs, loading bays, mezzanines, and SLAM landmarks.

Manipulation & Task-Level Labels

- Manipulation Annotations: Grasp points, affordances, contact surfaces, and keypoints on tools and parts.

- Task Phases: Approach, grasp, move, place, and verify, with success/failure states and error tags.

Human–Robot Interaction & Safety Labels

- Human Pose & Safety: Human pose/keypoints, proximity zones, and dynamic safety envelopes.

- Gestures & Intent: Hand signals and direction of movement.

- Scenario Tags: Near-collision, obstruction, queueing, handover, and co-manipulation.

Telemetry, Logs & Event Annotation

- Robot State & Control Logs: Operating modes, control commands, and error states.

- Sensor Health & Diagnostics: Sensor status, faults, and diagnostic events.

- Environment Events: Door states, machine statuses, and alarms.

- Time-Aligned Episodes: Structured episodes for RL training, evaluation, and edge-case mining.

Industries We Serve

ADAS

Autonomous Driving

Robotics

Healthcare

What Our Clients Say

DDD delivered extremely consistent 3D annotations across LiDAR frames, something even our internal teams struggled to achieve.

Their agriculture image labeling drastically reduced our model drift and improved yield estimation accuracy across multiple crop cycles.

DDD transformed our raw vehicle sensor streams into production-ready annotations that accelerated our L2+ perception stack.

Their QA workflows and domain expertise made our robotics navigation model far more reliable in edge environments.

Why Choose DDD?

Annotators and leads are trained on robotics and autonomy concepts (ODD, sensor stacks, coordinate frames, safety envelopes) so they understand why labels matter, not just where to click.

We’re comfortable in 3D tooling, bird’s-eye views, and multi-camera rigs, not just single 2D images, crucial for AV, drones, and warehouse robots.

You get production-grade teams and QA that can grow from pilot spans of a few sequences to full-fleet, continuous annotation, without sacrificing quality.

We integrate with your existing Physical AI toolchain: commercial 3D/AV labeling platforms, in-house data tools, or GIS environments, no forced platform migration.

Our quality and safety are strengthened through maker–checker workflows, gold tasks, targeted quality metrics, and clear escalation paths.

Read Our Latest Blogs

Multi-Modal Data Annotation for Autonomous Perception: Synchronizing LiDAR, RADAR, and Camera Inputs

This blog explores multi-modal data annotation for autonomy, focusing on the synchronization of LiDAR, RADAR, and camera inputs. Practical...

Read MoreThe Art of Data Annotation in Machine Learning

Data Annotation has become a cornerstone in the development of AI and ML models. In this blog, we will...

Read MoreCustomer Success Stories

Accelerating ADAS Model Development through 2D and 3D Annotations

A leading autonomous vehicle manufacturer sought to enhance the safety and accuracy of its Advanced Driver Assistance Systems (ADAS).

Read more →

Object detection in LIDAR with 98% quality consistency

Although one of the more straightforward LiDAR data processing tasks, object boxing is still challenging. For applications like ADAS, the task requires extreme precision.

Explore solutions →