This blog outlines how data labeling and real-world testing complement each other in the Autonomous Vehicle development lifecycle.

Read MorePerformance Evaluation Services for Intelligent Systems

AI performance testing to measure how your product performs under real-world, extreme, and mission-critical conditions.

Transformative Performance Assessment for Physical AI

Digital Divide Data (DDD) designs and executes end-to-end performance evaluation programs that quantify robustness, accuracy, resilience, and real-world behavior. We help you understand how your product behaves in ideal scenarios and in the unpredictable environments where safety matters most.

Our Performance Evaluation Use Cases

Quantify accuracy, latency, interpretability, robustness, and degradation across environments, datasets, and model versions.

- Vision model robustness under lighting and weather shifts

- NLP model drift under domain changes

- Robotics perception accuracy under occlusions

Evaluate full-stack systems across realistic load, environmental extremes, and operational uncertainty.

- Hardware–software integration stability

- Thermal, environmental, and vibration impact

- Stress scenarios for autonomy and mission systems

Real-World ScenarioPerformance

Understand behavior across user types, environments, and mission contexts.

- AV responses to rare or ambiguous road events

- Medical device outputs across demographic diversity

- Defense systems under terrain, weather, or communication degradation

Study consistency over repeated cycles, deployments, and product updates.

Fully Managed Performance Evaluation Workflow

From controlled testing to dynamic scenario evaluation, DDD manages the complete lifecycle:

Industries We Support

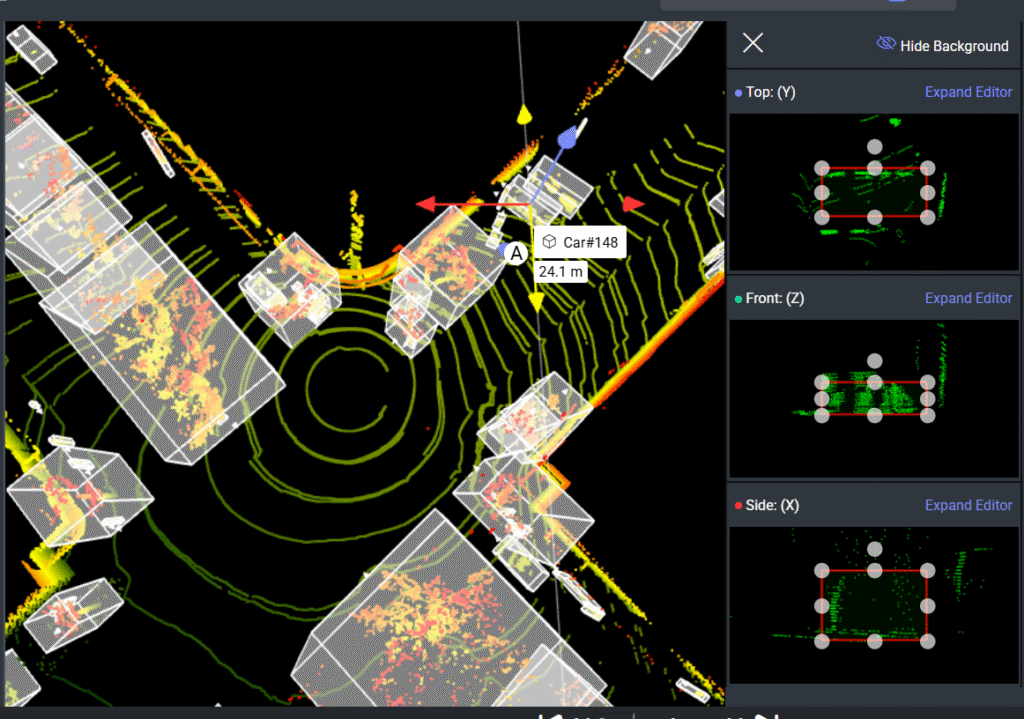

Autonomous Driving

Scenario-based evaluation, edge-case stress testing, perception/behavior benchmarking for AV and ADAS systems.

Defensetech

Performance and resilience evaluation for mission systems, autonomous platforms, control software, and ops-critical tools.

Healthcare

Algorithm and device performance assessment under clinical, demographic, and environmental variability.

Robotics

Precision, reliability, and environment-driven performance evaluation for industrial, service, and mobile robots.

What Our Clients Say

DDD’s structured evaluation revealed failure modes we hadn’t detected internally, critical for our ADAS launch

Their robotics testing boosted our perception accuracy by over 20% during real-world stress scenarios.

The performance diagnostics DDD delivered helped us pass a major regulatory milestone ahead of schedule.

In defense environments, reliability matters. DDD identified performance gaps that directly improved mission safety.

Why Choose DDD?

We bring deep experience evaluating complex, safety-critical AI systems with real-world precision.

Blog

Read expert articles, insights, and industry benchmarks across physical AI.

Building Digital Twins for Autonomous Vehicles: Architecture, Workflows, and Challenges

In this blog, we will explore how digital twins are transforming the testing and validation of autonomous systems, examine...

Read MoreHow to Conduct Robust ODD Analysis for Autonomous Systems

This blog provides a technical guide to conducting robust ODD analysis for autonomous driving, detailing how to define, structure,...

Read MorePrecision Evaluation for High-performance Physical Ai

Frequently Asked Questions

We evaluate a wide range of AI-driven systems, including computer vision models, autonomous decision-making systems, safety-critical algorithms, LLM applications, and complex multi-modal models, across both simulated and real-world environments.

We use trained domain experts to assess nuanced outputs while automated workflows run scalable, repeatable tests. This hybrid approach ensures accuracy, consistency, and cost-efficient throughput.

Yes. Our approach is fully tool-chain agnostic. We integrate seamlessly with your simulators, datasets, test harnesses, telemetry, cloud infrastructure, and CI/CD pipelines.

Automated tests often miss edge cases and context-driven failures. Our human-in-the-loop evaluators catch subtle errors, bias, safety issues, and degradation that automated metrics alone cannot detect.

We use multi-level QA, reproducible workflows, evaluator calibration, and version-controlled pipelines to ensure results are accurate, traceable, and repeatable across iterations.

Absolutely. We operate in secure, access-controlled environments aligned with industry best practices. Data handling, storage, and transfer workflows follow strict confidentiality and compliance standards.

Yes. We have experience evaluating models used in sectors such as mobility, defense, public safety, healthcare, and enterprise applications, where reliability, robustness, and auditability are essential.