Author: Umang Dayal

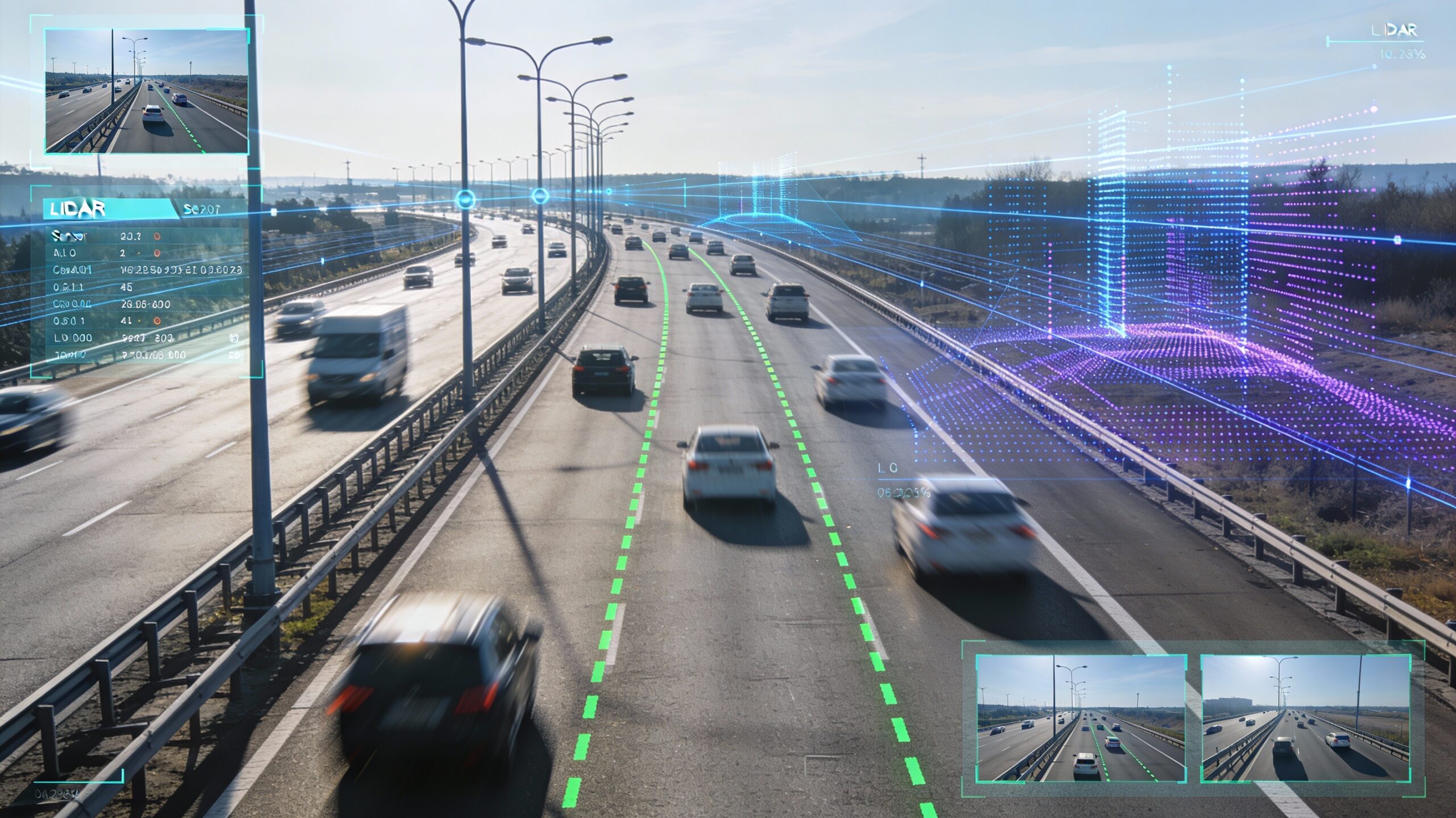

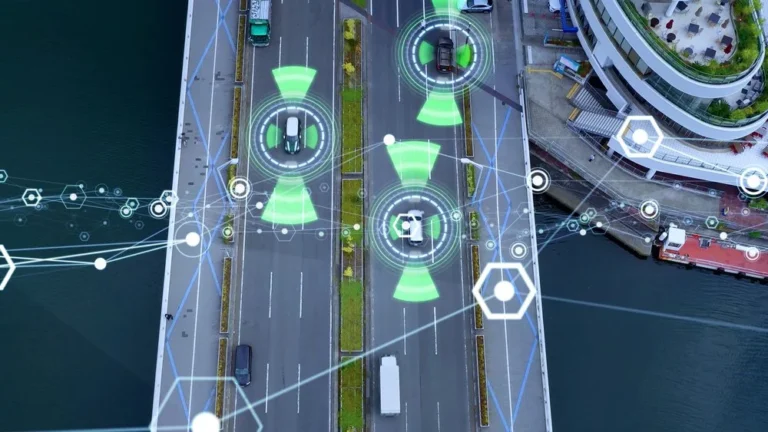

Physical AI refers to intelligent systems that perceive, reason, and act within real environments. It includes autonomous vehicles, collaborative robots, drones, defense systems, embodied assistants, and increasingly, machines that learn from human demonstration. Unlike traditional software that processes static inputs, physical AI must interpret continuous streams of sensory data and translate them into safe, precise actions.

Video sits at the center of this transformation. Cameras capture motion, intent, spatial relationships, and environmental change. Over time, organizations have shifted from collecting isolated frames to gathering multi-camera, long-duration recordings. Video data may be abundant, but clean, structured, temporally consistent annotations are far harder to scale.

The backbone of reliable physical AI is not simply more data. It is well-annotated video data, structured in a way that mirrors how machines must interpret the world. High-quality video annotation services are not a peripheral function; they are foundational infrastructure.

What Makes Physical AI Different from Traditional Computer Vision?

Static Image AI vs. Temporal Physical AI

Traditional computer vision often focuses on individual frames. A model identifies objects within a snapshot. Performance is measured per image. While useful, this frame-based paradigm falls short when actions unfold over time.

Consider a warehouse robot picking up a package. The act of grasping is not one frame. It is a sequence: approach, align, contact, grip, lift, stabilize. Each phase carries context. If the grip slips, the failure may occur halfway through the lift, rather than at the moment of contact. A static frame does not capture intent or trajectory.

Temporal understanding demands segmentation of actions across sequences. It requires annotators to define start and end boundaries precisely. Was the grasp complete when the fingers closed or when the object left the surface? Small differences in labeling logic can alter how models learn.

Long-horizon task understanding adds another dimension. A five-minute cleaning task performed by a domestic robot contains dozens of micro-actions. The system must recognize not just objects but goals. A cluttered desk becomes organized through a chain of decisions. Labeling such sequences calls for more than object detection. It requires a structured interpretation of behavior.

The Shift to Embodied and Multi-Modal Learning

Vehicles combine camera feeds with LiDAR and radar. Robots integrate depth sensors and joint encoders. Wearable systems may include inertial measurement units.

This sensor fusion means annotations must align across modalities. A bounding box in RGB imagery might correspond to a three-dimensional cuboid in LiDAR space. Temporal synchronization becomes essential. A delay of even a few milliseconds could distort training signals.

Language integration complicates matters further. Many systems now learn from natural language instructions. A robot may be told, “Pick up the red mug next to the laptop and place it on the shelf.” For training, the video must be aligned with textual descriptions. The word “next to” implies spatial proximity. The action “place” requires temporal grounding.

Embodied learning also includes demonstration-based training. Human operators perform tasks while cameras record the process. The dataset is not just visual. It is a representation of skill. Capturing this skill accurately demands hierarchical labeling. A single demonstration may contain task-level intent, subtasks, and atomic actions.

Real-World Constraints

In lab conditions, the video appears clean. In real deployments, not so much. Motion blur during rapid turns, occlusions when objects overlap, glare from reflective surfaces, and shadows shifting throughout the day. Physical AI must operate despite these imperfections.

Safety-critical environments raise the stakes. An autonomous vehicle cannot misclassify a pedestrian partially hidden behind a parked van. A collaborative robot must detect a human hand entering its workspace instantly. Rare edge cases, which might appear only once in thousands of hours of footage, matter disproportionately.

These realities justify specialized annotation services. Labeling physical AI data is not simply about drawing shapes. It is about encoding time, intent, safety context, and multi-sensor coherence.

Why Video Annotation Is Critical for Physical AI

Action-Centric Labeling

Physical AI systems learn through patterns of action. Breaking down tasks into atomic components such as grasp, push, rotate, lift, and release allows models to generalize across scenarios. Temporal segmentation is central here. Annotators define the precise frame where an action begins and ends. If the “lift” phase is labeled inconsistently across demonstrations, models may struggle to predict stable motion.

Distinguishing aborted actions from completed ones helps systems learn to anticipate outcomes. Without consistent action-centric labeling, models may misinterpret motion sequences, leading to hesitation or overconfidence in deployment.

Object Tracking Across Frames

Tracking objects over time requires persistent identifiers. A pedestrian in frame one must remain the same entity in frame one hundred, even if partially occluded. Identity consistency is not trivial. In crowded scenes, similar objects overlap. Tracking errors can introduce identity switches that degrade training quality.

In warehouse robotics, tracking packages as they move along conveyors is essential for inventory accuracy. In autonomous driving, maintaining identity across intersections affects trajectory prediction. Annotation services must enforce rigorous tracking standards, often supported by validation workflows that detect drift.

Spatio-Temporal Segmentation

Pixel-level segmentation extended across time provides a granular understanding of dynamic environments. For manipulation robotics, segmenting the precise contour of an object informs grasp planning. For vehicles, segmenting drivable areas frame by frame supports safe navigation. Unlike single-frame segmentation, spatio-temporal segmentation must maintain shape continuity. Slight inconsistencies in object boundaries can propagate errors across sequences.

Multi-View and Egocentric Annotation

Many datasets now combine first-person and third-person perspectives. A wearable camera captures hand movements from the operator’s viewpoint while external cameras provide context. Synchronizing these views requires careful alignment. Annotators must ensure that action labels correspond across angles. A grasp visible in the egocentric view should align with object movement in the third-person view.

Human-robot interaction labeling introduces further complexity. Detecting gestures, proximity zones, and cooperative actions demands awareness of both participants.

Long-Horizon Demonstration Annotation

Physical tasks often extend beyond a few seconds. Cleaning a room, assembling a product, or navigating urban traffic can span minutes. Breaking down long sequences into hierarchical labels helps structure learning. At the top level, the task might be “assemble component.” Beneath it lie subtasks such as “align bracket” or “tighten screw.” At the lowest level are atomic actions.

Sequence-level metadata captures contextual factors such as environment type, lighting condition, or success outcome. This layered annotation enables models to reason across time rather than react to isolated frames.

Core Annotation Types Required for Physical AI Systems

Different applications demand distinct annotation strategies. Below are common types used in physical AI projects.

Bounding Boxes with Tracking IDs

Bounding boxes remain foundational, particularly for object detection and tracking. When paired with persistent tracking IDs, they enable models to follow entities across time. In autonomous vehicles, bounding boxes identify cars, pedestrians, cyclists, traffic signs, and more. In warehouse robotics, boxes track packages and pallets as they move between zones. Consistency in box placement and identity assignment is critical. Slight misalignment across frames may seem minor, but it can accumulate into trajectory prediction errors.

Polygon and Pixel-Level Segmentation

Segmentation provides fine-grained detail. Instead of enclosing an object in a rectangle, annotators outline its exact shape. Manipulation robots benefit from precise segmentation of tools and objects, especially when grasping irregular shapes. Safety-critical systems use segmentation to define boundaries of drivable surfaces or restricted zones. Extending segmentation across time ensures continuity and reduces flickering artifacts in training data.

Keypoint and Pose Estimation in 2D and 3D

Keypoint annotation identifies joints or landmarks on humans and objects. In human-robot collaboration, tracking hand, elbow, and shoulder positions helps predict motion intent. Three-dimensional pose estimation incorporates depth information. This becomes important when systems must assess reachability or collision risk. Pose labels must remain stable across frames. Small shifts in keypoint placement can introduce noise into motion models.

Action and Event Tagging in Time

Temporal tags mark when specific events occur. A vehicle stops at a crosswalk. A robot successfully inserts a component. A drone detects an anomaly.

Precise event boundaries matter. Early or late labeling skews training signals. For planning systems, recognizing event order is just as important as recognizing the events themselves.

Sensor Fusion Annotation

Physical AI increasingly relies on multi-sensor inputs. Annotators may synchronize camera footage with LiDAR point clouds, radar signals, or depth maps. Three-dimensional cuboids in LiDAR data complement two-dimensional boxes in video. Alignment across modalities ensures that spatial reasoning models learn accurate geometry.

Challenges in Video Annotation for Physical AI

Video annotation at this level is complex and often underestimated.

Temporal Consistency at Scale

Maintaining label continuity across thousands of frames is demanding. Drift can occur when object boundaries shift subtly. Correcting drift requires a systematic review. Automated checks can flag inconsistencies, but human oversight remains necessary. Even small temporal misalignments can affect long-horizon learning.

Long-Horizon Task Decomposition

Defining taxonomies for complex tasks requires domain expertise. Overly granular labels may overwhelm annotators. Labels that are too broad may obscure learning signals. Striking the right balance involves iteration. Teams often refine hierarchies as models evolve.

Edge Case Identification

Rare scenarios are often the most critical. A pedestrian darting into traffic. A tool slipped during assembly. Edge cases may represent a fraction of data but have outsized safety implications. Systematically identifying and annotating such cases requires targeted sampling strategies.

Multi-Camera and Multi-Sensor Alignment

Synchronizing multiple streams demands precise timestamp alignment. Small discrepancies can distort perception. Cross-modal validation helps ensure consistency between visual and spatial labels.

Annotation Cost Versus Quality Trade-Offs

Video annotation is resource-intensive. Frame sampling can reduce workload, but risks missing subtle transitions. Active learning loops, where models suggest uncertain frames for review, can improve efficiency. Still, cost and quality must be balanced thoughtfully.

Human in the Loop and AI-Assisted Annotation Pipelines

Purely manual annotation at scale is unsustainable. At the same time, fully automated labeling remains imperfect.

Foundation Model Assisted Pre-Labeling

Automated segmentation and tracking tools can generate initial labels. Annotators then correct and refine them. This approach accelerates throughput while preserving accuracy. It also allows teams to focus on complex cases rather than routine labeling.

Expert Review Layers

Tiered quality assurance systems add oversight. Initial annotators produce labels. Senior reviewers validate them. Domain specialists resolve ambiguous scenarios. In robotics projects, familiarity with task logic improves annotation reliability. Understanding how a robot moves or why a vehicle hesitates can inform labeling decisions.

Iterative Model Feedback Loops

Annotation is not a one-time process. Models trained on labeled data generate predictions. Errors are analyzed. Additional data is annotated to address weaknesses. This feedback loop gradually improves both the dataset and the model performance. It reflects an ongoing partnership between annotation teams and AI engineers.

How DDD Can Help

Digital Divide Data works closely with clients to define hierarchical action schemas that reflect real-world tasks. Instead of applying generic labels, teams align annotations with the intended deployment environment. For example, in a robotics assembly project, DDD may structure labels around specific subtask sequences relevant to that assembly line.

Multi-sensor support is integrated into workflows. Annotators are trained to align video frames with spatial data streams. Where AI-assisted tools are available, DDD incorporates them carefully, ensuring human review remains central. Quality assurance operates across multiple layers. Sampling strategies, inter-annotator agreement checks, and domain-focused reviews help maintain temporal consistency.

Conclusion

Physical AI systems do not learn from abstract ideas. They learn from labeled experience. Every grasp, every lane change, every coordinated movement between human and machine is encoded in annotated video. Model intelligence is bounded by annotation quality. Temporal reasoning, contextual awareness, and safety all depend on precise labels.

As organizations push toward more capable robots, smarter vehicles, and adaptable embodied agents, structured video annotation pipelines become strategic infrastructure. Those who invest thoughtfully in this foundation are likely to move faster and deploy more confidently.

The future of intelligent machines may feel futuristic. In practice, it rests on careful, detailed work done frame by frame.

Partner with Digital Divide Data to build high-precision video annotation pipelines that power reliable, real-world Physical AI systems.

References

Kawaharazuka, K., Oh, J., Yamada, J., Posner, I., & Zhu, Y. (2025). Vision-Language-Action Models for Robotics: A Review Towards Real-World Applications. IEEE Access, 13, 162467–162504. https://doi.org/10.1109/ACCESS.2025.3609980

Kou, L., Ni, F., Zheng, Y., Han, P., Liu, J., Cui, H., Liu, R., & Hao, J. (2025). RoboAnnotatorX: A comprehensive and universal annotation framework for accurate understanding of long-horizon robot demonstrations. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) (pp. 10353–10363). https://openaccess.thecvf.com/content/ICCV2025/papers/Kou_RoboAnnotatorX_A_Comprehensive_and_Universal_Annotation_Framework_for_Accurate_Understanding_ICCV_2025_paper.pdf

VLA-Survey Contributors. (2025). Vision-Language-Action Models for Robotics: A Review Towards Real-World Applications [Project survey webpage]. https://vla-survey.github.io/

Frequently Asked Questions

How much video data is typically required to train a Physical AI system?

Requirements vary by application. A warehouse manipulation system might rely on thousands of demonstrations, while an autonomous driving stack may require millions of frames across diverse environments. Data diversity often matters more than sheer volume.

How long does it take to annotate one hour of complex robotic demonstration footage?

Depending on annotation depth, one hour of footage can take several hours or even days to label accurately. Temporal segmentation and hierarchical labeling significantly increase effort compared to simple bounding boxes.

Can synthetic data reduce video annotation needs?

Synthetic data can supplement real-world footage, especially for rare scenarios. However, models deployed in physical environments typically benefit from real-world annotated sequences to capture unpredictable variation.

What metrics indicate high-quality video annotation?

Inter-annotator agreement, temporal boundary accuracy, identity consistency in tracking, and cross-modal alignment checks are strong indicators of quality.

How often should annotation taxonomies be updated?

As models evolve and deployment conditions change, taxonomies may require refinement. Periodic review aligned with model performance metrics helps ensure continued relevance.