By Umang Dayal

July 28, 2025

Keypoint detection has become a cornerstone of numerous computer vision applications, powering everything from pose estimation in sports analytics to gesture recognition in augmented reality and fine motor control in robotics.

As the field has evolved, so too has the complexity of the problems it aims to solve. Developers and researchers are increasingly faced with a critical decision: whether to rely on 2D or 3D keypoint detection models. While both approaches aim to identify salient points on objects or human bodies, they differ fundamentally in the type of spatial information they capture and the contexts in which they excel.

The challenge lies in choosing the right approach for the right application. While 3D detection provides richer data, it comes at the cost of increased computational demand, sensor requirements, and annotation complexity. Conversely, 2D methods are more lightweight and easier to deploy but may fall short when spatial reasoning or depth understanding is crucial. As new architectures, datasets, and fusion techniques emerge, the line between 2D and 3D capabilities is beginning to blur, prompting a reevaluation of how each should be used in modern computer vision pipelines.

This blog explores the key differences between 2D and 3D keypoint detection, highlighting their advantages, limitations, and practical applications.

What is Keypoint Detection?

Keypoint detection is a foundational task in computer vision where specific, semantically meaningful points on an object or human body are identified and localized. These keypoints often represent joints, landmarks, or structural features that are critical for understanding shape, motion, or orientation. Depending on the application and data requirements, keypoint detection can be performed in either two or three dimensions, each providing different levels of spatial insight.

2D keypoint detection operates in the image plane, locating points using pixel-based (x, y) coordinates. For instance, in human pose estimation, this involves identifying the positions of the nose, elbows, and knees within a single RGB image. These methods have been widely adopted in applications such as facial recognition, AR filters, animation rigging, and activity recognition.

3D keypoint detection, in contrast, extends this task into the spatial domain by estimating depth alongside image coordinates to yield (x, y, z) positions. This spatial modeling is essential in scenarios where understanding the true physical orientation, motion trajectory, or 3D structure of objects is required. Unlike 2D detection, which can be performed with standard cameras, 3D keypoint detection often requires additional input sources such as depth sensors, multi-view images, LiDAR, or stereo cameras. It plays a vital role in robotics grasp planning, biomechanics, autonomous vehicle perception, and immersive virtual or augmented reality systems.

2D Keypoint Detection

2D keypoint detection has long been the entry point for understanding visual structure in computer vision tasks. By detecting points of interest in an image’s x and y coordinates, it offers a fast and lightweight approach to modeling human poses, object parts, or gestures within a flat projection of the world. Its relative simplicity, combined with a mature ecosystem of datasets and pre-trained models, has made it widely adopted in both academic and production environments.

Advantages of 2D Keypoint Detection

One of the primary advantages of 2D keypoint detection is its computational efficiency. Models like OpenPose, BlazePose, and HRNet are capable of delivering high accuracy in real-time, even on resource-constrained platforms such as smartphones or embedded devices. This has enabled the proliferation of 2D keypoint systems in applications like fitness coaching apps, social media AR filters, and low-latency gesture recognition. The availability of extensive annotated datasets such as COCO, MPII, and AI Challenger further accelerates training and benchmarking.

Another strength lies in its accessibility. 2D detection typically requires only monocular RGB images, making it deployable with basic camera hardware. Developers can implement and scale 2D pose estimation systems quickly, with little concern for calibration, sensor fusion, or geometric reconstruction. This makes 2D keypoint detection particularly suitable for commercial applications that prioritize responsiveness, ease of deployment, and broad compatibility.

Limitations of 2D Keypoint Detection

However, the 2D approach is not without its constraints. It lacks any understanding of depth, which can lead to significant ambiguity in scenes with occlusion, unusual angles, or mirrored poses. For instance, without depth cues, it may be impossible to determine whether a hand is reaching forward or backward, or whether one leg is in front of the other. This limitation reduces the robustness of 2D models in tasks that demand precise spatial interpretation.

Moreover, 2D keypoint detection is inherently tied to the viewpoint of the camera. A pose that appears distinct in three-dimensional space may be indistinguishable in 2D from another, resulting in missed or incorrect inferences. As a result, while 2D detection is highly effective for many consumer-grade and real-time tasks, it may not suffice for applications where depth, orientation, and occlusion reasoning are critical.

3D Keypoint Detection

3D keypoint detection builds upon the foundation of 2D localization by adding the depth dimension, offering a more complete and precise understanding of an object’s or human body’s position in space. Instead of locating points only on the image plane, 3D methods estimate the spatial coordinates (x, y, z), enabling richer geometric interpretation and spatial reasoning. This capability is indispensable in domains where orientation, depth, and motion trajectories must be accurately captured and acted upon.

Advantages of 3D Keypoint Detection

One of the key advantages of 3D keypoint detection is its robustness in handling occlusions and viewpoint variations. Because 3D models can infer spatial relationships between keypoints, they are better equipped to reason about body parts or object components that are not fully visible. This makes 3D detection more reliable in crowded scenes, multi-person settings, or complex motions, scenarios that frequently cause ambiguity or failure in 2D systems.

The added depth component is also crucial for applications that depend on physical interaction or navigation. In robotics, for instance, understanding the exact position of a joint or grasp point in three-dimensional space allows for precise movement planning and object manipulation. In healthcare, 3D keypoints enable fine-grained gait analysis or postural assessment. For immersive experiences in AR and VR, 3D detection ensures consistent spatial anchoring of digital elements to the real world, dramatically improving realism and usability.

Disadvantages of 3D Keypoint Detection

3D keypoint detection typically requires more complex input data, such as depth maps, multi-view images, or 3D point clouds. Collecting and processing this data often demands additional hardware like stereo cameras, LiDAR, or RGB-D sensors. Moreover, training accurate 3D models can be resource-intensive, both in terms of computation and data annotation. Labeled 3D datasets are far less abundant than their 2D counterparts, and generating ground truth often involves motion capture systems or synthetic environments, increasing development time and expense.

Another limitation is inference speed. Compared to 2D models, 3D detection networks are generally larger and slower, which can hinder real-time deployment unless heavily optimized. Even with recent progress in model efficiency and sensor fusion techniques, achieving high-performance 3D keypoint detection at scale remains a technical challenge.

Despite these constraints, the importance of 3D keypoint detection continues to grow as applications demand more sophisticated spatial understanding. Innovations such as zero-shot 3D localization, self-supervised learning, and back-projection from 2D features are helping to bridge the gap between depth-aware accuracy and practical deployment feasibility. In contexts where precision, robustness, and depth-awareness are critical, 3D keypoint detection is not just advantageous, it is essential.

Real-World Use Cases of 2D vs 3D Keypoint Detection

Selecting between 2D and 3D keypoint detection is rarely a matter of technical preference; it’s a strategic decision shaped by the specific demands of the application. Each approach carries strengths and compromises that directly impact performance, user experience, and system complexity. Below are practical scenarios that illustrate when and why each method is more appropriate.

Use 2D Keypoints When:

Real-time feedback is crucial

2D keypoint detection is the preferred choice for applications where low latency is critical. Augmented reality filters on social media platforms, virtual try-ons, and interactive fitness applications rely on near-instantaneous pose estimation to provide smooth and responsive experiences. The lightweight nature of 2D models ensures fast inference, even on mobile processors.

Hardware is constrained

In embedded systems, smartphones, or edge devices with limited compute power and sensor input, 2D models offer a practical solution. Because they operate on single RGB images, they avoid the complexity and cost of stereo cameras or depth sensors. This makes them ideal for large-scale deployment where accessibility and scalability matter more than full spatial understanding.

Depth is not essential

For tasks like 2D activity recognition, simple joint tracking, animation rigging, or gesture classification, depth information is often unnecessary. In these contexts, 2D keypoints deliver sufficient accuracy without the overhead of 3D modeling. The majority of consumer-facing pose estimation systems fall into this category.

Use 3D Keypoints When:

Precision and spatial reasoning are essential

In domains like surgical robotics, autonomous manipulation, or industrial automation, even minor inaccuracies in joint localization can have serious consequences. 3D keypoint detection provides the spatial granularity needed for reliable movement planning, tool control, and interaction with real-world objects.

Orientation and depth are critical

Applications involving human-robot interaction, sports biomechanics, or AR/VR environments depend on understanding how the body or object is oriented in space. For example, distinguishing between a forward-leaning posture and a backward one may be impossible with 2D data alone. 3D keypoints eliminate such ambiguity by capturing true depth and orientation.

Scenes involve occlusion or multiple viewpoints

Multi-person scenes, complex body motions, or occluded camera angles often pose significant challenges to 2D models. In contrast, 3D detection systems can infer missing or hidden joints based on learned spatial relationships, providing a more robust estimate. This is especially valuable in surveillance, motion capture, or immersive media, where visibility cannot always be guaranteed.

Ultimately, the decision hinges on a careful assessment of application requirements, hardware constraints, latency tolerance, and desired accuracy. While 2D keypoint detection excels in speed and simplicity, 3D methods offer deeper insight and robustness, making them indispensable in use cases where spatial fidelity truly matters.

Read more: Mitigation Strategies for Bias in Facial Recognition Systems for Computer Vision

Technical Comparison: 2D vs 3D Keypoint Detection

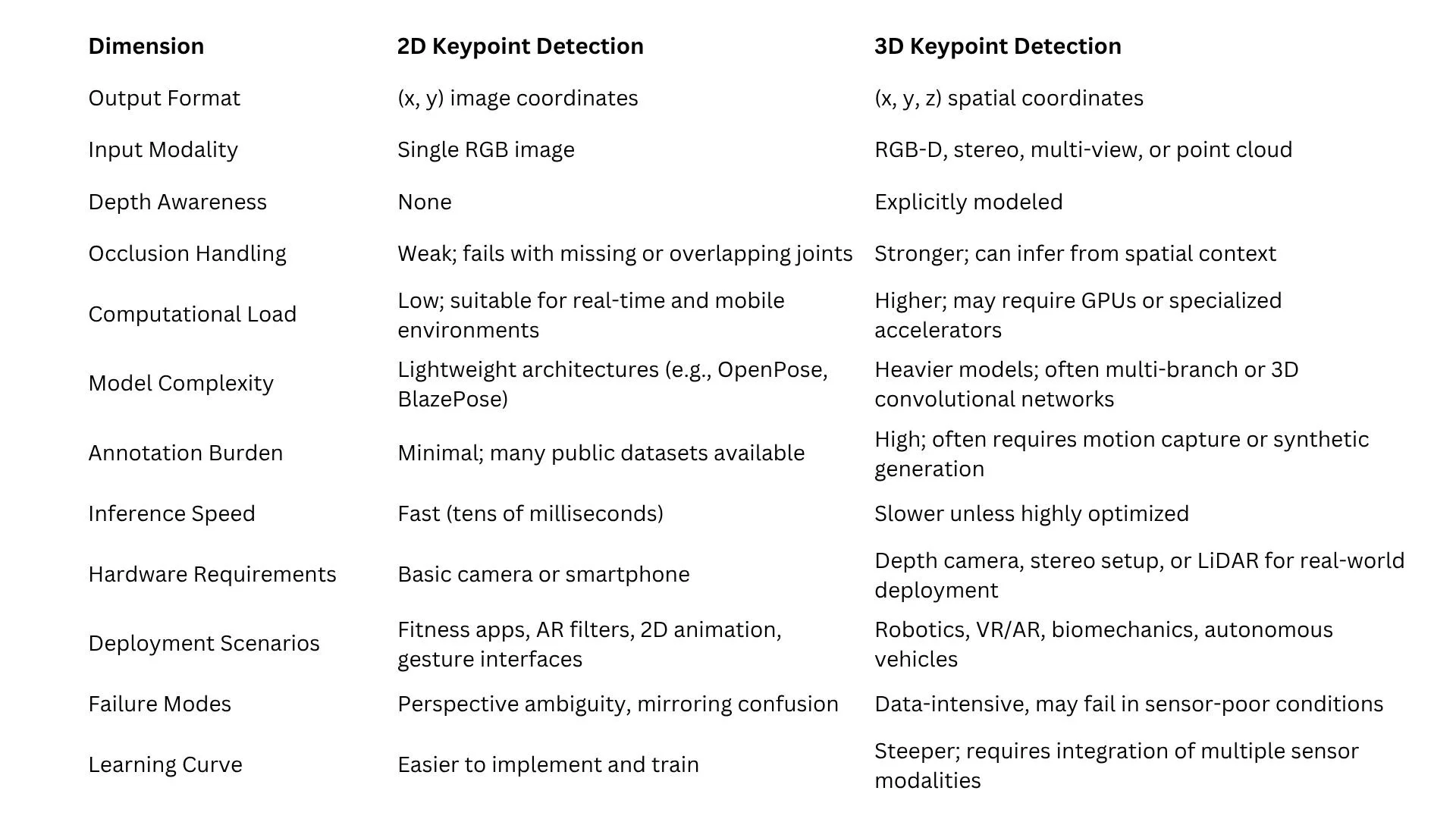

To make an informed decision between 2D and 3D keypoint detection, it’s important to break down their technical characteristics across a range of operational dimensions. This comparison covers data requirements, computational demands, robustness, and deployment implications to help teams evaluate trade-offs based on their system constraints and goals.

This comparison reveals a clear pattern: 2D methods are ideal for fast, lightweight applications where spatial depth is not critical, while 3D methods trade ease and speed for precision, robustness, and depth-aware reasoning.

In practice, this distinction often comes down to the deployment context. A fitness app delivering posture feedback through a phone camera benefits from 2D detection’s responsiveness and low overhead. Conversely, a surgical robot or VR system tracking fine motor movement in real-world space demands the accuracy and orientation-awareness only 3D detection can offer.

Understanding these technical differences is not just about choosing the best model; it’s about selecting the right paradigm for the job at hand. And increasingly, hybrid solutions that combine 2D feature extraction with depth-aware projection (as seen in recent research) are emerging as a way to balance performance with efficiency.

Read more: Understanding Semantic Segmentation: Key Challenges, Techniques, and Real-World Applications

Conclusion

2D and 3D keypoint detection each play a pivotal role in modern computer vision systems, but their strengths lie in different areas. 2D keypoint detection offers speed, simplicity, and wide accessibility. It’s ideal for applications where computational resources are limited, latency is critical, and depth is not essential. With a mature ecosystem of datasets and tools, it remains the default choice for many commercial products and mobile-first applications.

In contrast, 3D keypoint detection brings a richer and more accurate spatial understanding. It is indispensable in high-precision domains where orientation, depth perception, and robustness to occlusion are non-negotiable. Although it demands more in terms of hardware, training data, and computational power, the resulting spatial insight makes it a cornerstone for robotics, biomechanics, autonomous systems, and immersive technologies.

As research continues to evolve, the gap between 2D and 3D detection will narrow further, unlocking new possibilities for hybrid architectures and cross-domain generalization. But for now, knowing when and why to use each approach remains essential to building effective, efficient, and robust vision-based systems.

Build accurate, scalable 2D and 3D keypoint detection models with Digital Divide Data’s expert data annotation services.

References

Gong, B., Fan, L., Li, Y., Ma, C., & Bao, H. (2024). ZeroKey: Point-level reasoning and zero-shot 3D keypoint detection from large language models. arXiv. https://arxiv.org/abs/2412.06292

Wimmer, T., Wonka, P., & Ovsjanikov, M. (2024). Back to 3D: Few-shot 3D keypoint detection with back-projected 2D features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (pp. 3252–3261). IEEE. https://openaccess.thecvf.com/content/CVPR2024/html/Wimmer_Back_to_3D_Few-Shot_3D_Keypoint_Detection_with_Back-Projected_2D_CVPR_2024_paper.html

Patsnap Eureka. (2025, July). Human pose estimation: 2D vs. 3D keypoint detection explained. Eureka by Patsnap. https://eureka.patsnap.com/article/human-pose-estimation-2d-vs-3d-keypoint-detection

Frequently Asked Questions

1. Can I convert 2D keypoints into 3D without depth sensors?

Yes, to some extent. Techniques like monocular 3D pose estimation attempt to infer depth from a single RGB image using learning-based priors or geometric constraints. However, these methods are prone to inaccuracies in unfamiliar poses or occluded environments and generally don’t achieve the same precision as systems with true 3D inputs (e.g., stereo or depth cameras).

2. Are there unified models that handle both 2D and 3D keypoint detection?

Yes. Recent research has introduced multi-task and hybrid models that predict both 2D and 3D keypoints in a single architecture. Some approaches first estimate 2D keypoints and then lift them into 3D space using learned regression modules, while others jointly optimize both outputs.

3. What role do synthetic datasets play in 3D keypoint detection?

Synthetic datasets are crucial for 3D keypoint detection, especially where real-world 3D annotations are scarce. They allow the generation of large-scale labeled data from simulated environments using tools like Unity or Blender.

4. How do keypoint detection models perform under motion blur or low light?

2D and 3D keypoint models generally struggle with degraded image quality. Some recent approaches incorporate temporal smoothing, optical flow priors, or multi-frame fusion to mitigate issues like motion blur. However, low-light performance remains a challenge, especially for RGB-based systems that lack infrared or depth input.

5. What evaluation metrics are used to compare 2D and 3D keypoint models?

For 2D models, metrics like PCK (Percentage of Correct Keypoints), mAP (mean Average Precision), and OKS (Object Keypoint Similarity) are common. In 3D, metrics include MPJPE (Mean Per Joint Position Error) and PA-MPJPE (Procrustes-aligned version). These help quantify localization error, robustness, and structural accuracy.

6. How scalable is 3D keypoint detection across diverse environments?

Scalability depends heavily on the model’s robustness to lighting, background clutter, sensor noise, and occlusion. While 2D models generalize well due to broad dataset diversity, 3D models often require domain-specific tuning, especially in robotics or outdoor scenes. Advances in self-supervised learning and domain adaptation are helping bridge this gap.