DDD Solutions Engineering Team

23 Sep, 2025

Sensor fusion is the science of bringing together data from multiple sensors to create a clearer and more reliable picture of the world. Instead of relying on a single input, like a camera or a LiDAR unit, fusion combines their strengths and minimizes their weaknesses. This approach has become a cornerstone in the design of modern intelligent systems.

Its importance is evident across sectors that demand precision and safety. Autonomous vehicles must interpret crowded urban streets under varying weather conditions. Robots working in warehouses or on assembly lines require accurate navigation in dynamic spaces. Healthcare devices are expected to track patient vitals with minimal error. Defense and aerospace applications demand resilient systems capable of functioning in high-stakes and unpredictable environments. In each of these cases, a single sensor cannot provide the robustness required, but a fusion of multiple sensors can.

In this blog, we will explore the fundamentals of sensor fusion, why combining multiple sensors leads to more accurate and reliable systems, the key domains where it is transforming industries, the major challenges in implementation, and how organizations can build robust, data-driven fusion solutions.

What is Sensor Fusion?

At its core, sensor fusion is the process of integrating information from multiple sensors to form a more complete and accurate understanding of the environment. Rather than treating each sensor in isolation, fusion systems combine their outputs into a single, coherent picture that can be used for decision-making. This integration reduces uncertainty and allows machines to operate with greater confidence in complex or unpredictable conditions.

Researchers typically describe sensor fusion at three levels.

Data-level fusion combines raw signals from sensors before any interpretation, providing the richest input but also the heaviest computational load.

Feature-level fusion merges processed outputs such as detected edges, motion vectors, or depth maps, balancing detail with efficiency.

Decision-level fusion integrates conclusions drawn independently by different sensors, producing a final decision that benefits from multiple perspectives.

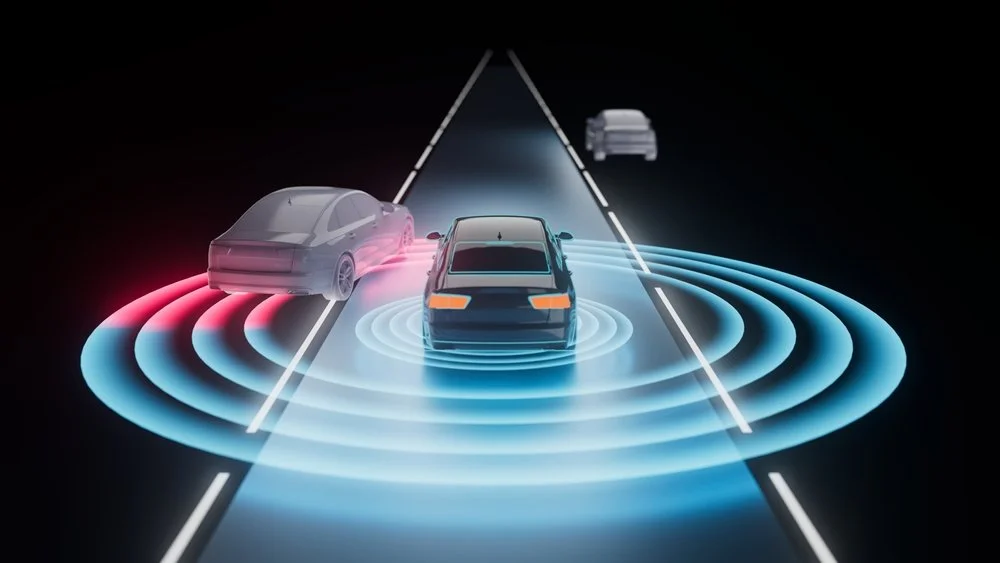

A practical example is autonomous driving. Cameras provide detailed images of road markings and traffic lights. LiDAR offers precise three-dimensional maps of the surroundings. RADAR supplies depth and velocity information even in poor weather. Together, these complementary inputs create a robust perception system capable of handling the complexity of real-world driving.

Why Multiple Sensors are Better Than One

Relying on a single sensor exposes systems to blind spots and vulnerabilities. Cameras, for example, provide rich semantic detail but struggle in low light or fog. LiDAR excels at generating precise depth information but can be costly and less effective in heavy rain. RADAR penetrates poor weather but lacks fine resolution. By combining these technologies, sensor fusion leverages strengths while compensating for weaknesses.

Redundancy and reliability

If one sensor fails or becomes unreliable due to environmental conditions, others can maintain system performance. This redundancy is essential for applications such as autonomous vehicles, where safety is paramount and failures cannot be tolerated.

Complementary sensing

Each sensor type captures a different aspect of the environment. LiDAR provides depth, cameras supply semantics like color and texture, and inertial measurement units (IMUs) track orientation and movement. Fusing these inputs produces a richer understanding than any single stream could provide.

Noise reduction

Individual sensors inevitably generate errors or false readings, but integrating data across multiple sources helps filter out anomalies and improve signal quality. This is particularly important in environments where accuracy is critical, such as industrial systems or surgical robotics.

Sensor Fusion Key Domains and Applications

Sensor fusion is not limited to a single industry. Its value is evident wherever accuracy, reliability, and resilience are mission-critical. The following domains illustrate how multiple sensors working together outperform single-sensor systems.

Autonomous Vehicles

Autonomous driving is one of the most visible examples of sensor fusion in action. Cars integrate cameras, LiDAR, RADAR, GPS, and IMUs to perceive their surroundings and make real-time driving decisions. Cameras identify road signs and traffic lights, LiDAR provides precise 3D maps, RADAR measures speed and distance in poor weather, and IMUs track the vehicle’s orientation.

Robotics

Robots operating in unstructured environments face challenges that single sensors cannot overcome. Mobile robots often fuse cameras, LiDAR, and IMUs to navigate cluttered warehouses, hospitals, or outdoor terrain. This combination allows robots to avoid obstacles, map their surroundings, and move safely in real time.

Healthcare

In healthcare, precision and reliability are essential. Modern wearable devices integrate multiple biosensors, such as heart rate monitors, accelerometers, and oxygen sensors, to provide continuous patient monitoring.

Industrial and Manufacturing

Factories and production lines are adopting sensor fusion to drive efficiency and predictive maintenance. IoT-enabled facilities often combine pressure, vibration, and temperature sensors to anticipate machine failures before they occur.

Remote Sensing and Defense

Defense, aerospace, and environmental monitoring rely heavily on multi-platform fusion. Satellites, drones, and ground sensors collect data that is integrated for decision-making in scenarios ranging from disaster response to surveillance.

Major Challenges in Sensor Fusion

While the benefits of sensor fusion are clear, implementing it effectively is far from straightforward. The process introduces technical and operational challenges that can affect reliability, scalability, and cost.

Complexity and computational demands

Fusing data from multiple sensors requires significant processing power. Raw data streams must be synchronized, filtered, and integrated in real time, often under strict latency constraints. This increases the computational load and demands specialized hardware or optimized algorithms, particularly in safety-critical systems like autonomous vehicles.

Calibration issues

For fusion to work, sensors must be aligned both spatially and temporally. Even minor calibration errors can introduce distortions that degrade performance. For example, a camera and LiDAR mounted on the same vehicle must maintain perfect alignment to ensure depth data matches visual inputs. Maintaining this calibration over time, especially in harsh environments, remains a difficult problem.

Data overload

Multiple high-resolution sensors generate massive volumes of data. Managing bandwidth, storage, and processing pipelines is a constant challenge, especially when real-time decisions are required. In industrial environments, this data volume can overwhelm traditional infrastructure, forcing a shift to edge computing and advanced data management strategies.

Failure amplification

If not carefully managed, fusion can amplify sensor errors instead of correcting them. A poorly calibrated or faulty sensor can introduce noise that contaminates the fused output, leading to worse outcomes than relying on a single reliable sensor.

How We Can Help

Building effective sensor fusion systems depends on high-quality data. Cameras, LiDAR, RADAR, and biosensors all generate vast amounts of raw information, but without accurate labeling, integration, and processing, this data cannot be turned into actionable intelligence. This is where Digital Divide Data (DDD) provides critical value.

DDD specializes in supporting organizations that rely on sensor fusion by delivering:

-

Multimodal data annotation: Precise labeling for LiDAR point clouds, camera images, RADAR data, and IMU streams, enabling fusion algorithms to align and learn effectively.

-

Domain-specific expertise: Teams with experience across automotive, robotics, healthcare, industrial IoT, and defense ensure that annotations reflect real-world conditions and operational requirements.

-

Scalable workflows: Proven processes that can handle large, complex datasets while maintaining consistency and quality.

-

Quality assurance: Rigorous multi-step checks that ensure the reliability of labeled data, reducing downstream risks in model training and deployment.

By combining technical expertise with scalable human-in-the-loop processes, DDD helps organizations strengthen the data backbone of their fusion systems. This ensures that projects can move from development to deployment with confidence in both accuracy and safety.

Read more: Cuboid Annotation for Depth Perception: Enabling Safer Robots and Autonomous Systems

Conclusion

Sensor fusion is no longer an optional enhancement in advanced systems; it is a foundational requirement. The integration of multiple sensors provides the redundancy, accuracy, and resilience that modern applications demand. From autonomous vehicles navigating crowded roads, to robots operating in dynamic environments, to healthcare devices monitoring patient health, the ability to combine and interpret diverse streams of data has become essential.

As artificial intelligence matures, sensor fusion will shift from rigid, rule-based systems to adaptive models capable of learning from context and environment. This transition will deliver machines that are not only accurate but also more resilient, transparent, and trustworthy. Sensor fusion represents the bridge between raw sensing and meaningful intelligence, allowing machines to perceive and respond to the world with human-like robustness.

Looking to strengthen your AI systems with reliable, multimodal data for sensor fusion?

Partner with Digital Divide Data to power accuracy, safety, and scalability in your next-generation solutions.

References

MDPI. (2024). Advancements in sensor fusion for underwater SLAM: A review. Sensors, 24(11). https://doi.org/10.3390/s24113792

Science Times. (2024, June 17). Sensor fusion and multi-sensor data integration for enhanced perception in autonomous vehicles. Retrieved from https://www.sciencetimes.com

Samadzadegan, F., Toosi, A., & Dadrass Javan, F. (2025). A critical review on multi-sensor and multi-platform remote sensing data fusion approaches: Current status and prospects. International Journal of Remote Sensing, 46(3), 1327-1402. https://doi.org/10.1080/01431161.2024.2429784

FAQs

Q1. What is the difference between sensor fusion and sensor integration?

Sensor integration refers to the process of connecting different sensors so they can work within the same system, while sensor fusion goes a step further by combining the data from these sensors to produce more accurate and reliable results.

Q2. How does sensor fusion improve safety in autonomous systems?

By combining multiple data sources, fusion ensures that a single point of failure does not compromise the entire system. For example, if a camera is obstructed by glare, LiDAR and RADAR can still provide reliable data for navigation, reducing the risk of accidents.

Q3. How does edge computing relate to sensor fusion?

Since fusion requires real-time processing of large volumes of data, edge computing helps by bringing computation closer to the sensors themselves. This reduces latency and makes it possible to run fusion algorithms without depending on cloud infrastructure.

Q4. What role does machine learning play in sensor fusion today?

Machine learning, particularly deep learning, is increasingly used to replace or augment traditional fusion methods like Kalman filters. These models can learn complex, non-linear relationships between sensor inputs, improving performance in dynamic or uncertain environments.

Q5. Which industries are expected to adopt sensor fusion next?

Beyond current uses in vehicles, robotics, healthcare, manufacturing, and defense, sensor fusion is expected to see growth in smart cities, precision agriculture, and environmental monitoring, where diverse data sources must be combined for effective decision-making.