By Umang Dayal

15 April, 2025

Artificial intelligence can only be as smart as the data it learns from. And when that data is mislabeled, inconsistent, or full of noise, the result is an unreliable AI system that performs poorly in the real world. Poor data annotation can quietly sabotage your project, whether you’re building a self-driving car, a recommendation engine, or a healthcare diagnostic tool.

But the good news? Unreliable data annotation is fixable. You just need the right processes, tools, and mindset. In this blog, we’ll walk through why data annotation often goes wrong and share five practical strategies you can use to fix it and prevent future issues.

Why Data Annotation Often Goes Wrong

Data annotation seems straightforward: labeling images, text, or video so machines can understand and learn. But in practice, it’s far more nuanced.

Inconsistency

Different annotators might interpret the same task in different ways, especially if the instructions are vague or incomplete. This is incredibly common when teams scale up quickly without formalizing their labeling guidelines.

Lack of training

Many annotation projects are outsourced to contractors or gig workers who may not have deep domain knowledge. Without proper onboarding or examples, they’re left to guess. And when there’s no feedback loop, these small mistakes get repeated frequently.

Bias

Annotators, like all humans, bring their own perspectives, cultural experiences, and assumptions to the task. Without checks and balances, this bias can creep into the data and affect the model’s decisions. Add to this the overuse of automated tools that aren’t supervised by humans, and you have a storm of unreliable labels.

The result? AI models that are inaccurate, unfair, or even unsafe. But now that we know the problems, let’s dive into how to fix them.

How to Fix Unreliable Data Annotation

Build Strong Guidelines and Train Your Annotators Well

Clear annotation guidelines are like a compass; they keep everyone pointing in the same direction. Without them, you’re asking your team to make judgment calls on complex decisions, which leads to inconsistency and confusion.

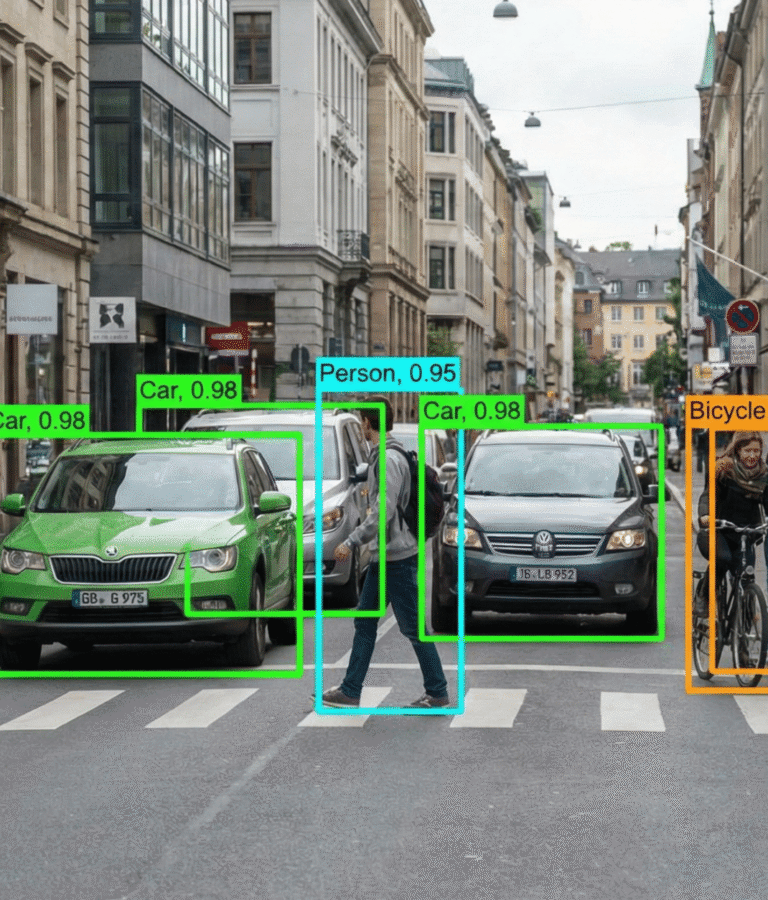

For example, in an image labeling task for self-driving cars, one annotator might label a pedestrian pushing a stroller as two separate entities, while another might label it as one. Guidelines should explain the “what” and the “why.” What are you asking the annotators to do? Why does it matter? Include visuals, real examples, and edge cases. Spell out how to handle difficult scenarios and what to do when they’re unsure. Use consistent language and revise the document as you learn more from the actual annotation work.

But documentation isn’t enough on its own. You also need to train your annotators, especially when you’re dealing with complex or subjective tasks. Start with a kickoff session where you walk them through the guidelines. Review their first few batches and offer corrections and explanations. Over time, host calibration sessions to align on tricky examples. This ensures consistency across annotators and over time. Investing in training upfront may slow you down a little, but it will save you a ton of rework and errors down the line.

Set Up Quality Assurance (QA) Loops

Quality assurance is not a one-time step solution, it’s a continuous process. Think of it as your safety net. Even your best annotators will make mistakes occasionally, especially with repetitive or large-volume tasks. That’s why regular QA checks are critical. One of the simplest ways to do this is through random sampling. Select a small portion of the annotated data and have a lead annotator or QA specialist review it. This can quickly surface recurring issues like label drift, missed annotations, or misunderstandings of the guidelines.

Another effective method is consensus labeling. Have multiple annotators label the same data and measure how much they agree. When there’s low agreement, it signals ambiguity in either the task or the instructions and gives you a chance to clarify. Additionally, consider building feedback loops. When mistakes are found, don’t just fix them; share the findings with the original annotators. This turns every error into a learning opportunity and reduces future inconsistencies. You can also track annotator performance over time and offer incentives or bonuses for high accuracy. A good QA system ensures your annotations stay reliable even as your project scales.

Combine Automation with Human Oversight

AI-powered annotation tools are becoming more popular, and for good reason, as they speed up the process by pre-labeling data based on previously seen patterns. This is great for repetitive tasks like bounding boxes or entity recognition in text. But automation isn’t perfect, especially in edge cases or tasks that require judgment.

That’s where human oversight becomes crucial. Humans should always review machine-labeled data, especially in high-stakes use cases like medical diagnostics or autonomous vehicles. This review doesn’t need to be exhaustive; you can prioritize a sample of labels for review or focus on low-confidence predictions from the tool.

You can also use automation to assist human annotators rather than replace them. For example, a tool might highlight objects in an image but let the annotator confirm or adjust the label. This hybrid model offers the best of both worlds: speed and accuracy.

Reduce Bias with Diverse, Well-Informed Teams

Bias in data annotation isn’t always obvious, but it can have serious consequences. If your annotation team is too homogenous geographically, culturally, or demographically, they may unintentionally introduce skewed labels that don’t reflect the diversity of real-world users.

For example, imagine building a facial recognition model trained mostly on data labeled by people from one region or ethnicity. The model may fail when applied to faces from other groups, leading to biased outcomes. To mitigate this, aim for diversity in your annotation teams. Bring in people from different backgrounds and regions. If that’s not possible, at least rotate team members and introduce multiple viewpoints during review sessions.

Also, teach your annotators how to spot and avoid bias. Include examples of subjective labeling and explain how it can impact the final model. When people understand the bigger picture, they’re more likely to be thoughtful and objective in their work.

Use Active Learning to Focus on What Matters

Not all data is equally valuable to your model. In fact, a large portion of your dataset might be redundant, meaning the model has already learned all it can. So, why waste time labeling it? Active learning solves this by letting your model guide the annotation process. It flags the data points it’s most uncertain about, usually the trickiest edge cases or ambiguous examples, and sends them to humans for review. This means your annotators are focusing on the areas that will actually improve the model’s performance.

It’s a smarter, more efficient way to annotate. You get more impact from fewer labels, and your model learns faster. This approach is especially useful when you’re working with limited time, budget, or annotation bandwidth.

Read more: 5 Best Practices To Speed Up Your Data Annotation Project

How Digital Divide Data Can Help

At Digital Divide Data (DDD), we understand that high-quality data is at the heart of successful AI. Our role isn’t just to label data; it’s to help you build smarter, more reliable models by ensuring that the data you train them on is accurate, consistent, and free from bias. Here’s how we support this mission:

Clear, Collaborative Onboarding

We start every project by sitting down with your team to fully understand the use case and define what success looks like. Together, we create detailed guidelines that remove ambiguity and cover tricky edge cases. This ensures our annotators are working from a shared understanding and that we’re aligned with your goals from the beginning.

Real-World Annotator Training

Before any labeling begins, we train our team using your data and task-specific examples. We don’t just explain how to do the work; we also explain why it matters. This approach helps our annotators make better decisions, especially when the work requires judgment or context. The result is fewer mistakes and more consistent outputs.

Quality Checks Built Into the Workflow

Quality isn’t something we add at the end, it’s something we build into every step. We use peer reviews, senior-level checks, and inter-annotator agreement tracking to catch issues early and often. Feedback loops ensure that mistakes are corrected and used as learning opportunities.

Flexible Integration with Your Tools

Whether you’re working with fully manual annotation or a machine-in-the-loop setup, we’re comfortable adapting to your workflow. If you’ve got automated pre-labeling in place, we can step in to validate and fine-tune those labels. Our role is to complement your tools with human oversight that improves precision.

Diverse, Mission-Driven Teams

Our team comes from a wide range of backgrounds, and that diversity shows up in the quality of our work. By providing opportunities to underserved communities, we not only create economic impact but also build teams that reflect a broader range of perspectives. This helps reduce annotation bias and makes your models more inclusive.

Scalable Support Without Compromising Quality

We can quickly ramp up team size while maintaining quality through strong project management and continuous oversight. No matter the size of your project, we make sure you get reliable, high-quality results.

Conclusion

In the world of AI, your models are only as good as the data they’re trained on, and that starts with precise, thoughtful annotation. Poor labeling can quietly undermine even the most sophisticated systems, leading to biased outcomes, inconsistent behavior, and costly setbacks.

But with the right approach, annotation doesn’t have to be a bottleneck, it can be a competitive advantage. Partner with DDD to ensure your AI models are built on a foundation of high-quality, bias-free data. Contact us today to get started.