By Umang Dayal

July 16, 2025

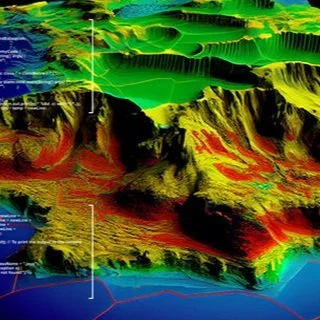

In modern warfare and defense operations, information superiority has become just as critical as firepower. At the heart of this transformation lies geospatial data, an expansive category encompassing satellite imagery, LiDAR scans, terrain models, sensor telemetry, and location-based metadata. These spatial datasets provide the contextual backbone for understanding and acting upon physical environments, whether for troop movement, surveillance, or targeting operations.

Artificial intelligence (AI) has emerged as a force multiplier within this domain; its capabilities in pattern recognition, predictive modeling, and autonomous decision-making are redefining how militaries leverage geospatial intelligence (GEOINT).

This blog explores how AI and geospatial data are being used for autonomous defense systems. It examines the core technologies involved, the types of autonomous platforms in use, and the practical applications on the ground. It also addresses the ethical, technical, and strategic challenges that must be navigated as this powerful integration reshapes military operations worldwide.

Geospatial Data for Autonomous Defense Systems

Geospatial AI (GeoAI) Foundations

Geospatial Artificial Intelligence, or GeoAI, refers to the application of AI techniques to spatial data to extract insights, recognize patterns, and support decision-making in geographic contexts. In defense systems, GeoAI functions as a critical enabler of automation and situational awareness. It allows machines to interpret complex geospatial datasets and derive actionable intelligence at a scale and speed that human analysts cannot match.

Object Detection on Satellite Imagery

AI models, particularly convolutional neural networks (CNNs), are trained to detect and classify military infrastructure, vehicles, troop formations, and changes in terrain. These models are being increasingly enhanced by transformer-based architectures that offer better context-awareness and scalability across various image types and resolutions.

Terrain Mapping for Autonomous Navigation

Defense platforms operating in unstructured environments, such as mountainous regions, forests, or deserts, rely on geospatial data to create digital terrain models (DTMs) and identify navigable paths. AI augments this process by interpreting elevation data, estimating traversability, and dynamically rerouting based on detected obstacles or threats.

AI models can analyze multi-temporal satellite or aerial imagery to identify new constructions, troop movements, or altered landscapes. These changes can be automatically flagged and prioritized based on strategic relevance, enabling faster intelligence cycles and proactive decision-making.

Enabling Technologies

Several enabling technologies support the integration of AI and geospatial intelligence. At the foundation are deep learning architectures, including CNNs for image data and transformers for both spatial and textual fusion. These models can handle high-dimensional data and identify spatial relationships that traditional algorithms often overlook.

Edge computing is particularly important for autonomous systems deployed in the field. By processing data locally, onboard drones or vehicles, edge AI reduces latency, ensures mission continuity in GPS- or comms-denied environments, and allows real-time response without constant uplink to a centralized server. With the advent of 6G and low-latency mesh networks, edge devices can also share data, enabling collaborative autonomy across fleets of platforms.

Digital Twins and Simulation Environments

These virtual replicas of real-world terrains and battlefield scenarios are powered by geospatial data and AI algorithms. They allow defense planners to simulate mission outcomes, test autonomous behavior in dynamic environments, and optimize tactics with reduced risk and cost. Importantly, they also serve as high-quality training grounds for reinforcement learning models used in mission planning and maneuvering.

Together, these technologies form a layered and adaptive tech stack that enables autonomous systems not only to perceive and navigate the physical world but also to interpret, learn, and act intelligently within it. This foundational layer is what transforms geospatial data from a static resource into a living operational capability.

Autonomous Defense Systems using Geospatial Data

Categories of Autonomous Platforms

Autonomous defense platforms are no longer limited to experimental prototypes; they are increasingly integrated into operational workflows across ground, aerial, and maritime domains. These platforms rely on AI and geospatial data to operate independently or semi-independently in high-risk or data-dense environments.

Unmanned Ground Vehicles (UGVs) operate in complex terrain, executing logistics support, surveillance, or combat missions. By leveraging terrain models, obstacle maps, and AI-based navigation, UGVs can traverse unstructured environments, identify threats, and make route decisions with minimal human input.

Unmanned Aerial Vehicles (UAVs) are widely used for reconnaissance, target acquisition, and precision strikes. Equipped with real-time image processing capabilities, UAVs can autonomously identify and track objects of interest, adjust flight paths based on dynamic geospatial inputs, and share insights with command centers or other drones in a swarm configuration.

Unmanned Surface and Underwater Vehicles (USVs and UUVs) bring similar capabilities to naval operations. These systems use sonar-based spatial data, ocean current models, and underwater mapping AI to patrol coastal zones, detect mines, or deliver payloads. They play an essential role in both conventional deterrence and hybrid maritime threats.

Hybrid systems are now emerging that integrate ground, aerial, and maritime elements into cohesive autonomous operations. These multi-domain systems share geospatial intelligence and use collaborative AI to coordinate actions, extending situational awareness and increasing mission effectiveness across varied terrains.

In each of these categories, geospatial AI enables real-time adaptation to environmental and tactical variables. Whether it is a UAV adjusting altitude to avoid radar detection or a UGV rerouting due to terrain instability, the ability to perceive and interpret spatial data autonomously is a defining capability of modern defense systems.

The Autonomy Stack for Integrating AI with Geospatial Data

The autonomy of these platforms is made possible by a layered stack of AI capabilities, each responsible for a critical aspect of perception and decision-making.

-

Sensor fusion integrates data from multiple sources, visual, infrared, LiDAR, radar, and GPS, to form a coherent view of the operating environment. This redundancy increases resilience and reliability, particularly in degraded or adversarial conditions.

-

Perception modules use computer vision and deep learning to detect, classify, and track objects. These systems can distinguish between friend and foe, identify terrain types, and detect anomalies in real time.

-

Localization and mapping involve technologies like SLAM (Simultaneous Localization and Mapping), which allow platforms to construct or update maps while keeping track of their position within them. AI enhances SLAM by improving accuracy in GPS-denied or visually ambiguous environments.

-

Path planning algorithms determine optimal routes for reaching a destination while avoiding obstacles, threats, and difficult terrain. These planners incorporate real-time inputs and predictive modeling to adjust routes dynamically as conditions change.

-

Mission execution and control modules translate strategic objectives into tactical actions. These include payload deployment, surveillance behavior, or coordination with other units. AI ensures that these actions are context-aware, adaptive, and aligned with broader operational goals.

-

Human-in-the-loop or loop-out paradigms define the level of autonomy. In critical operations, human oversight remains essential for ethical, strategic, or legal reasons. However, increasingly, defense systems are transitioning to “human-on-the-loop” roles, where operators monitor and intervene only when necessary, relying on AI to handle routine or time-sensitive decisions.

This autonomy stack is not a rigid hierarchy but a flexible framework that can be customized based on the mission type, platform capabilities, and operational environment. It reflects a shift from remote-controlled systems to intelligent agents that perceive, decide, and act in real time, often faster and more accurately than humans.

Challenges Integrating AI with Geospatial Data

Despite the rapid progress and compelling use cases, integrating AI with geospatial data in autonomous defense systems introduces a set of complex challenges. These span technical limitations, operational constraints, and broader ethical and legal considerations that must be addressed for successful and responsible deployment.

Technical Challenges

Real-time processing of high-dimensional geospatial data

Satellite imagery, LiDAR point clouds, and sensor telemetry are massive in volume and demand significant computational resources. Processing this data at the edge within the autonomous platform itself is particularly difficult given limitations in size, weight, and power (SWaP) of onboard hardware.

Precision and robustness in unstructured environments

Unlike urban or mapped areas, battlefield environments often include unpredictable terrain, dynamic obstacles, and varying weather conditions. AI models trained in controlled conditions can underperform or fail altogether when exposed to real-world complexity, leading to mission risks or operational failures.

Sensor reliability and spoofing risks

GPS jamming, signal interference, and adversarial attacks targeting sensor inputs can degrade or manipulate the data on which AI models rely. Without effective countermeasures or redundancy mechanisms, this makes autonomous platforms vulnerable to misinformation or operational paralysis.

Strategic and Operational Constraints

Interoperability remains a persistent barrier

In multinational coalitions or joint force operations, platforms often come from different manufacturers and adhere to different data formats, communication protocols, and autonomy levels. This lack of standardization hinders seamless collaboration and increases the risk of miscoordination.

Bandwidth and edge limitations

While edge AI enables local decision-making, many autonomous systems still rely on intermittent connectivity with command centers. In communication-degraded or GPS-denied environments common in contested zones, autonomous decision-making becomes more difficult and error-prone if the system is not sufficiently self-reliant.

Adversarial AI and cybersecurity threats

AI models can be manipulated through poisoned training data, adversarial inputs, or system-level hacks. In a military context, this not only compromises system performance but can also lead to catastrophic outcomes if exploited by an adversary during active missions.

Ethical and Legal Considerations

Meaningful human control

The question of when and how humans should intervene in decisions made by autonomous systems, especially lethal ones, remains unresolved in both military doctrine and international law. Ensuring accountability in cases of misidentification or unintended harm is a major ethical hurdle.

Cross-border data privacy

Satellite imagery and spatial data often include civilian infrastructure, raising questions about how such data is collected, stored, and used. Moreover, military applications of geospatial data sourced from commercial providers may violate privacy norms or sovereign boundaries, especially in coalition operations.

Bias in AI models

If training data is geographically skewed, culturally biased, or lacks representation of adversarial tactics, the resulting models may exhibit poor generalization and flawed decision-making. This is especially problematic in diverse, rapidly changing combat environments where assumptions made in training do not always hold.

Conclusion

The fusion of artificial intelligence and geospatial data is reshaping the landscape of modern defense systems. What was once the domain of passive intelligence gathering is now evolving into a dynamic ecosystem where machines perceive, interpret, and act on spatial data with minimal human intervention. This transformation is not just technological; it is strategic. In contested environments where speed, accuracy, and adaptability define success, AI-powered geospatial systems provide a decisive edge.

This convergence reflects a growing recognition that the next generation of defense advantage will come not only from superior weaponry but from superior information processing and decision-making systems.

To harness this potential, defense stakeholders must invest not just in algorithms and platforms but in the ecosystems that support them: data infrastructure, ethical frameworks, international collaboration, and human-machine integration protocols. Only then can we ensure that the integration of AI and geospatial data advances not only operational effectiveness but also security, accountability, and global stability.

This is not a future scenario. It is a present imperative. And its implications will shape the trajectory of autonomous defense for decades to come.

From training high-quality labeled datasets for autonomous navigation to deploying scalable human-in-the-loop systems for government and defense. DDD delivers the infrastructure and intelligence you need to operationalize innovation.

Contact us to learn how we can help accelerate your AI-geospatial programs with precision, scalability, and purpose.

References:

Bengfort, B., Canavan, D., & Perkins, B. (2023). The AI-enabled analyst: The future of geospatial intelligence [White paper]. United States Geospatial Intelligence Foundation (USGIF). https://usgif.org/wp-content/uploads/2023/10/USGIF-AI_ML_May_2023-whitepaper.pdf

Monzon Baeza, V., Parada, R., Concha Salor, L., & Monzo, C. (2025). AI-driven tactical communications and networking for defense: A survey and emerging trends. arXiv. https://doi.org/10.48550/arXiv.2504.05071

Onsu, M. A., Lohan, P., & Kantarci, B. (2024). Leveraging edge intelligence and LLMs to advance 6G-enabled Internet of automated defense vehicles. arXiv. https://doi.org/10.48550/arXiv.2501.06205

Frequently Asked Questions (FAQs)

1. How is AI used in space-based defense systems beyond satellite image analysis?

AI is increasingly applied in space situational awareness, collision prediction, and autonomous satellite navigation. For example, AI enables satellites to detect and respond to anomalies, optimize orbital adjustments, and coordinate in satellite constellations for resilient communications and Earth observation. In defense, this also includes real-time threat detection from anti-satellite (ASAT) weapons or adversarial satellite behavior.

2. Can commercial geospatial AI platforms be repurposed for defense applications?

Yes, many commercial GeoAI platforms offer foundational capabilities such as object recognition, land cover classification, and change detection. These can be adapted or extended for defense-specific needs, often with added layers of encryption, real-time analytics, and integration into secure military networks.

3. What is the role of synthetic geospatial data in training AI models for defense?

Synthetic geospatial data, including procedurally generated satellite imagery, 3D terrain models, and simulated sensor outputs, is used to augment limited or sensitive real-world data. It helps train AI models on edge cases, adversarial scenarios, or environments where real data is unavailable (e.g., contested zones, classified regions). This improves generalization and robustness while reducing dependence on expensive or classified datasets.

4. What is the difference between autonomous and automated systems in defense?

-

Automated systems follow pre-defined rules or scripts (e.g., a missile following a programmed trajectory).

-

Autonomous systems perceive their environment and make real-time decisions without predefined instructions (e.g., a drone that dynamically adjusts its route based on terrain and threats). Autonomy involves adaptive behavior, situational awareness, and in many cases, learning, which are powered by AI.