By Umang Dayal

June 10, 2025

Generative AI has rapidly evolved from a research novelty into a core technology shaping everything from search engines and image generation to code assistance and content creation.

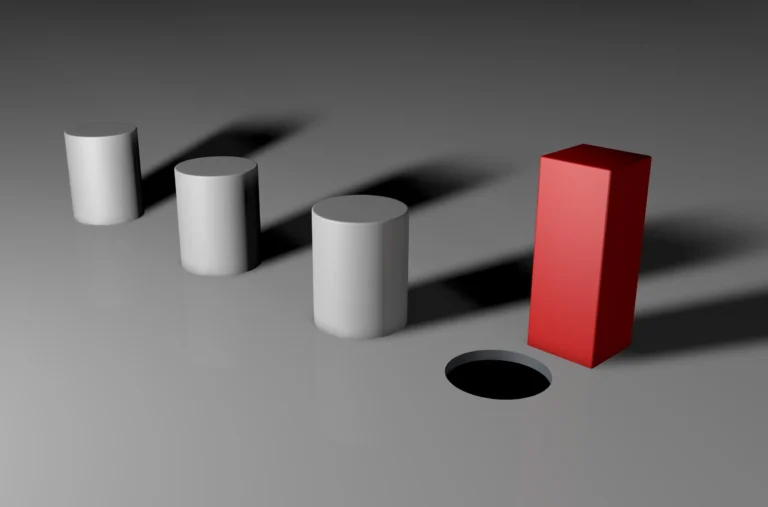

However, as generative models have grown in scale and sophistication, so have concerns about the fairness and equity of the outputs they produce. They often reflect and amplify the biases present in their training data, which includes real-world artifacts laden with historical inequality, cultural stereotypes, and demographic imbalances. These issues aren’t simply technical bugs, they are manifestations of deeper structural problems embedded in how data is collected, labeled, and interpreted.

Why does this matter?

Biased AI systems can harm marginalized communities, reinforce societal stereotypes, and erode public trust in the technology. When these systems are deployed at scale in education, recruitment, healthcare, or legal settings, the consequences are no longer academic, they become deeply personal and potentially discriminatory. As AI systems become gatekeepers to knowledge, services, and opportunities, the imperative to address bias is not just a technical challenge but a social responsibility.

This blog explores how bias manifests in generative AI systems, why it matters at both technical and societal levels, and what methods can be used to detect, measure, and mitigate these biases. It also examines what organizations can do to mitigate bias in Gen AI and build more ethical and responsible AI models.

Understanding Bias in Generative AI

Bias in AI doesn’t begin at the point of model output; it’s present throughout the pipeline, from how data is sourced to how models are trained and used. In generative AI, this becomes even more complex because the systems are designed to produce original content, not just classify or predict based on fixed inputs. This creative capability, while powerful, also makes bias more subtle, harder to predict, and more impactful when scaled.

At its core, bias in AI refers to systematic deviations in outcomes that unfairly favor certain groups or perspectives over others. These biases are not random; they often reflect dominant social norms, overrepresented demographics, or culturally specific values encoded in the data. In generative models, this can manifest in various ways:

-

Text generation: Language models trained on internet corpora often reflect gender, racial, and cultural stereotypes. For instance, prompts involving professions may default to gendered completions (“nurse” as female, “engineer” as male) or generate toxic language when prompted with identities from marginalized communities.

-

Image generation: Visual models like Midjourney or AI image enhancer tools may overrepresent Western beauty standards or produce biased representations when prompted with racially or culturally specific inputs. For example, asking for images of a “CEO” may consistently return white males, while prompts like “criminal” may result in darker-skinned faces.

-

Speech and audio: Generative voice models can struggle with non-native English accents, often introducing pronunciation errors or lowering transcription accuracy. This has implications for accessibility, inclusion, and product usability across diverse populations.

These examples all trace back to multiple, overlapping sources of bias:

-

Training Data: Most generative models are trained on vast, publicly available datasets, including web text, books, forums, and images. These sources are inherently biased, they reflect real-world inequalities, societal stereotypes, and uneven representation.

-

Model Architecture: The design of deep learning models can exacerbate bias, particularly when attention mechanisms or optimization objectives prioritize frequently occurring patterns over minority or outlier data.

-

Reinforcement Learning with Human Feedback (RLHF): Many models use human ratings to fine-tune responses. While this improves output quality, it can also introduce human subjectivity and cultural bias, depending on who provides the feedback.

-

Prompting and Deployment Contexts: The same model can behave very differently based on how it’s prompted and the environment in which it’s used. Deployment scenarios often surface latent biases that were not obvious in controlled settings.

Measuring Bias in Gen AI: Metrics and Evaluation

Before we can mitigate bias in generative AI, we must first understand how to detect and measure it. Unlike traditional machine learning tasks, where performance can be assessed using clear metrics like accuracy or recall, bias in generative systems is far more elusive. The outputs are often open-ended, probabilistic, and context-sensitive, making evaluation inherently more subjective and multi-dimensional.

The Challenge of Measuring Bias in Generative Models

Generative models produce varied outputs for the same prompt, depending on randomness, temperature settings, and internal sampling strategies. This variability means that a single biased output may not reveal the full extent of the problem, and an unbiased output doesn’t guarantee fairness across all use cases. Bias can emerge across a wide distribution of responses, often surfacing only when models are systematically audited with well-designed prompt sets.

Additionally, fairness is not a one-size-fits-all concept. Some communities may view certain representations as harmful, while others may not. This subjectivity introduces difficulty in deciding what constitutes “bias” and how to evaluate it consistently across languages, cultures, and domains.

Quantitative Metrics for Bias

Despite these challenges, researchers have developed several metrics to help quantify bias in generative systems:

-

Stereotype Bias Benchmarks: Datasets like CrowS-Pairs and StereoSet measure stereotypical associations in model completions. These datasets present paired prompts (e.g., “The man worked as a…” vs. “The woman worked as a…”) and evaluate whether model outputs reinforce social stereotypes.

-

Distributional Metrics: These track the frequency or proportion of different demographic groups in generated outputs. For example, prompting an image model to generate “doctors” and measuring how often the outputs depict women or people of color.

-

Embedding-Based Similarity/Distance: In this method, the semantic similarity between model outputs and biased or neutral representations is analyzed using vector space embeddings. This allows for a more nuanced comparison of output tendencies.

Qualitative and Mixed-Method Evaluations

Quantitative scores can highlight bias patterns, but they rarely tell the full story. Qualitative assessments are crucial to understanding the nature, tone, and context of bias. These include:

-

Prompt-based Audits: Curated prompt sets are used to evaluate model behavior under stress tests or adversarial conditions. For instance, evaluating how a model completes open-ended prompts related to religion, gender, or nationality.

-

Human-in-the-Loop Reviews: Panels of diverse reviewers evaluate the fairness or offensiveness of outputs. These reviews are essential for capturing nuance, such as subtle stereotyping or cultural misrepresentation that numerical metrics might miss.

-

Audit Reports and Red Teaming: Many organizations now conduct internal audits and red teaming exercises to identify bias risks before release. These reports often document how the model behaves under a wide range of scenarios, including those relevant to marginalized groups.

Methods to Mitigate Bias in Gen AI

Identifying bias in generative AI is only the beginning. The more difficult challenge lies in developing effective strategies to mitigate it, without compromising the model’s utility, creativity, or performance. Mitigation must occur across different levels of the AI pipeline: the data that trains the model, the design of the model itself, and the way outputs are handled at runtime. Each layer plays a role in either reinforcing or correcting underlying biases.

Data-Level Interventions

Since most generative models are trained on large-scale web data, much of the bias stems from that initial foundation. Interventions at the data level aim to reduce the skewed representations that get encoded into model weights.

-

Curated and Filtered Datasets: Removing or rebalancing harmful, toxic, or overly dominant representations from training corpora is a foundational strategy. For example, filtering out forums or websites known for extremist content or explicit bias can reduce harmful outputs downstream.

-

Synthetic Counterfactual Data: This involves generating new training examples that present alternative realities to stereotypical associations. For example, including examples where women are CEOs and men are nurses helps models learn a broader distribution of real-world roles.

-

Balanced Sampling: Ensuring that data includes diverse demographic representations, across gender, ethnicity, region, and culture, can help reduce overfitting to dominant patterns and improve inclusivity in outputs.

Model-Level Mitigations

At the level of model training and fine-tuning, several techniques aim to directly reduce bias in how the model learns associations from its data.

-

Debiasing Fine-Tuning: Techniques like LoRA (Low-Rank Adaptation) or specific fairness-aware objectives can be used to retrain or adapt parts of a model’s architecture without requiring full retraining. Research initiatives like AIM-Fair have explored fine-tuning generative models using adversarial objectives to suppress bias while preserving fluency.

-

Fairness Constraints in Loss Functions: During training, it’s possible to include regularization terms that penalize biased behaviors or reinforce fairness metrics. This technique attempts to align the model’s optimization process with fairness goals.

Post-Processing Techniques

In production environments, not all biases can be fixed at the training level. Post-processing allows real-time interventions when models are already deployed.

-

Output Filtering: Many companies now use moderation filters that block or rephrase potentially harmful completions. These are rule-based or machine-learned layers that sit between the model and the user.

-

Prompt Rewriting and Content Steering: Using controlled prompting techniques, like instructing the model to respond “fairly” or “inclusively,” can subtly nudge outputs away from biased language. Some prompt engineering approaches also mask identity-sensitive terms to reduce stereotyping.

Trade-offs and Tensions

Every bias mitigation strategy introduces trade-offs. There is a constant balancing act between fairness, performance, interpretability, and user satisfaction:

-

Fairness vs. Accuracy: Reducing bias might sometimes reduce performance on traditional benchmarks if those benchmarks themselves are skewed.

-

Bias Mitigation vs. Free Expression: Over-filtering may stifle nuance, creativity, or legitimate discussion, especially around sensitive topics.

-

Transparency vs. Complexity: Advanced debiasing methods may improve fairness but at the cost of making models more opaque or harder to interpret.

Can We Ever Achieve Truly Unbiased Gen AI?

The pursuit of fairness in generative AI often raises a deeper question: What does it actually mean for a model to be “unbiased”? While many technical solutions aim to reduce or control bias, the concept itself is far from absolute. Bias is not just a computational issue; it’s a philosophical and cultural one, embedded in how we define fairness, who sets those definitions, and what trade-offs we’re willing to accept.

Bias as a Reflection, Not a Flaw

One of the most challenging ideas for AI practitioners is that bias is not just a flaw of the model; it’s often a reflection of the world. Generative AI systems trained on real-world data will inevitably absorb the prejudices, hierarchies, and inequalities embedded in that data. In this sense, removing all bias could mean sanitizing the model to the point of artificiality, stripping it of its ability to reflect the world as it is, in all its complexity.

This presents a dilemma: Should models mirror reality, even when that reality is unjust? Or should they present an idealized version of the world that promotes fairness but may distort lived experiences? There is no universally correct answer.

Whose Fairness Are We Modeling?

Another philosophical limit lies in the question of perspective. Fairness is culturally contingent. What one society views as equitable, another may see as biased or exclusionary. There are deep disagreements, across political, regional, and ideological lines, about how race, gender, religion, and identity should be represented in public discourse. Designing a model that satisfies all these competing expectations is not only difficult, but it may also be fundamentally impossible.

This is why bias mitigation must move beyond technical fixes and engage with social science, ethics, and community input. It’s not enough for developers to optimize for a single fairness metric. The model’s design must reflect a process of dialogue, diversity, and continuous reevaluation.

Accepting Imperfection, Pursuing Accountability

Perhaps the most pragmatic perspective is to accept that complete unbias is unattainable. But that does not mean the effort is futile. The goal is not perfection, it’s progress. Even if some degree of bias is unavoidable, models can be made more accountable, transparent, and aligned with ethical values through:

-

Clear documentation of data and training decisions

-

Regular bias audits and red teaming

-

Engagement with affected communities

-

Transparent disclosure of model limitations

In this light, fairness becomes a moving target, one that evolves as society changes and as AI systems are deployed in new contexts. The challenge is not to “solve” bias once and for all, but to embed a continuous process of reflection, correction, and learning into the development lifecycle.

Read more: Gen AI Fine-Tuning Techniques: LoRA, QLoRA, and Adapters Compared

How Organizations Can Overcome Bias in Gen AI

Bias in generative AI is not just a technical issue, it’s an organizational responsibility. While individual developers and researchers play a crucial role, systemic change requires broader institutional commitment. Companies, research labs, and public sector organizations that deploy or develop generative models must implement operational strategies that go beyond compliance and move toward genuine accountability.

Building Diverse, Cross-Functional Teams

Bias often goes unnoticed when teams are homogeneous. A narrow set of perspectives in model development can result in blind spots, missed assumptions, overlooked harm vectors, or unchecked norms. Building diverse teams across gender, race, geography, and discipline isn’t just a moral imperative, it enhances the capacity to detect and mitigate bias at earlier stages.

Crucially, diversity must extend beyond demographics to include disciplinary diversity. Ethical AI teams should include social scientists, linguists, cultural scholars, and legal experts alongside data scientists and engineers.

Instituting Internal Model Audits

Just as models are tested for performance and security, they must also be audited for bias. Internal model audits should involve:

-

Prompt-based stress testing

-

Evaluating outputs for specific use cases (e.g., healthcare, hiring, criminal justice)

-

Measuring disparities in responses across demographic prompts

Audits must be recurring, not one-off events, and involve both automated tools and human reviews.

Creating Feedback Loops with Users and Communities

Bias often manifests in real-world deployment contexts that can’t be fully simulated during training. That’s why organizations must establish clear, accessible channels for users and impacted communities to flag problematic behavior in model outputs. Effective feedback mechanisms should:

-

Be transparent about how reports are handled

-

Offer response timelines

-

Feed into model updates or policy adjustments

Community-driven auditing, where marginalized or affected groups test models for fairness, is an emerging practice that makes the development process more democratic and grounded in lived experience.

Open-Sourcing Fairness Research and Tools

As models grow in scale and impact, the knowledge surrounding their fairness should not be proprietary. Open-sourcing evaluation datasets, fairness metrics, mitigation techniques, and audit methodologies helps the broader ecosystem improve and allows for independent scrutiny. Sharing findings about what works and what doesn’t also reduces duplication of effort and accelerates progress.

Implementing Explainable AI (XAI) Practices

Explainability is central to accountability. Tools like SHAP (SHapley Additive exPlanations), LIME (Local Interpretable Model-agnostic Explanations), and emerging LLM-specific explainability methods help clarify why a model generated a particular output. This is critical for identifying the roots of bias and for enabling stakeholders, including users, regulators, and affected individuals, to understand and challenge model behavior.

Explainable systems are especially important in high-stakes domains, such as healthcare, finance, or legal tech, where biased outputs can have real-world consequences.

Read more: Scaling Generative AI Projects: How Model Size Affects Performance & Cost

How DDD Can Help

At Digital Divide Data (DDD), we play a critical role in building more equitable and representative AI systems by combining high-quality human-in-the-loop services with a mission-driven workforce. Tackling bias in generative AI begins with diverse, accurately labeled, and contextually rich data.

Culturally Diverse and Representative Data Annotation

DDD’s global annotation teams span multiple countries, cultures, and languages. This allows for the creation of datasets that are sensitive to regional norms, inclusive of minority groups, and representative of global demographics, helping prevent overrepresentation of Western-centric perspectives in training data.

Fairness-Focused Human Feedback (RLHF)

When fine-tuning generative models using reinforcement learning with human feedback, DDD ensures that annotators are trained to spot not just factual inaccuracies, but also subtle forms of social, gender, or cultural bias. This feedback helps developers align models with fairness objectives at scale.

Contextual Sensitivity in Annotation Guidelines

DDD works closely with clients to co-develop task guidelines that account for social and cultural context. This ensures that annotators aren’t applying one-size-fits-all rules, but are instead making informed decisions based on nuanced cultural knowledge.

Rapid Feedback Loops for Model Iteration

DDD enables fast-turnaround human-in-the-loop pipelines, allowing AI teams to test mitigation strategies, gather feedback on bias reduction efforts, and iterate more rapidly on model updates.

By integrating human-in-the-loop perspectives into the data pipeline, DDD helps AI developers build systems that are more inclusive, transparent, and trusted.

Conclusion

Bias in generative AI is neither new nor easily solvable, but it is manageable. As these systems grow more powerful and pervasive, addressing their embedded biases is no longer optional; it’s a prerequisite for responsible deployment.

To make generative AI fairer, every part of the ecosystem must engage. Data curators must balance representation with realism. Model builders must prioritize inclusivity without sacrificing integrity. Organizations must embed fairness into governance and accountability frameworks. Regulators, researchers, and communities must work together to set norms and hold systems to ethical standards.

The path forward is not about creating perfect models. It’s about building transparent, accountable systems that evolve with feedback, reflect societal shifts, and above all, do less harm. Fairness in AI is a continuous pursuit, and the more openly we engage with its challenges, the closer we get to meaningful solutions.

Turn diverse human insights into better Gen AI outcomes. Get a free consultation today.