D3Scenes (D3S) 2D & 3D Annotations for Open-Source Driving Datasets

D3Scenes (D3S) 2D & 3D Annotations for Open-Source Driving Datasets

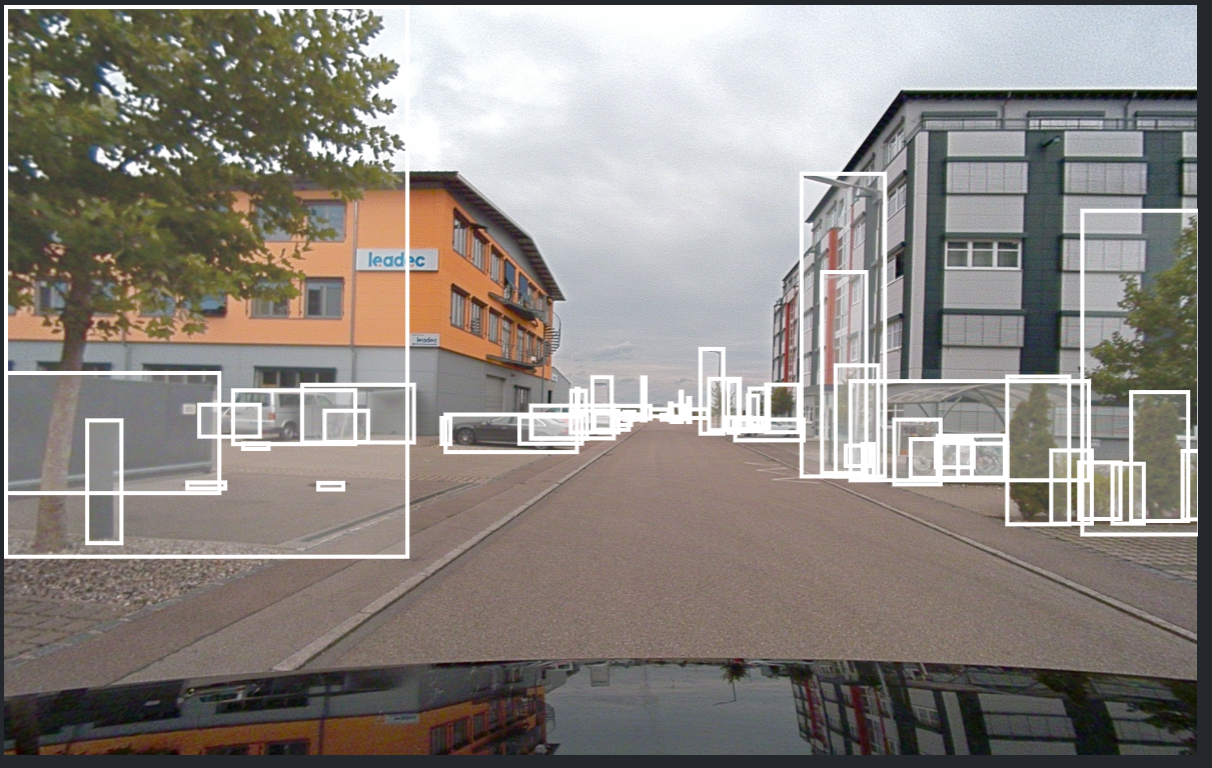

Accelerate your computer vision pipeline with benchmark-quality data designed for model-readiness. D3Scenes delivers precision, consistency, and contextual intelligence at scale.

The A2D2 dataset (Reference Links: ReadMe, License) was collected in Germany by Audi AG, specifically in and around several cities (Ingolstadt, Munich, and surrounding areas) where Audi conducts autonomous driving research and testing. The driving environment includes urban, suburban, rural, and highway scenes.

The Argoverse dataset (Reference Links: Terms of Use, Privacy Policy) comes from six U.S. cities with complex, unique driving environments: Miami, Austin, Washington DC, Pittsburgh, Palo Alto, and Detroit.

License: D3S is available for non-commercial use under CC BY-NC-SA 4.0 (Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International).

Build Smarter AI Systems with DDD’s D3Scenes

D3S datasets combine pixel-perfect 2D and LiDAR-accurate 3D annotations with contextual intelligence,

identifying not just what an object is, but who and why it matters.

2D Annotations:

Bounding Box

Image Segmentation

3D Annotations:

iDAR Bounding Box

Object Attributes:

Pedestrians (VRUs)

Vehicles

Traffic Signs

Annotation Volume

1,074

A2D2 (2D)

files annotated

with segmentation + bounding boxes (primarily suburban).

with segmentation + bounding boxes (primarily suburban).

1,199

Argoverse (2D)

files annotated

with segmentation + bounding boxes (busy urban, highways, suburban).

with segmentation + bounding boxes (busy urban, highways, suburban).

790

Argoverse (3D)

Files Annotated

with LiDAR 3D bounding boxes.

with LiDAR 3D bounding boxes.

95%

Accuracy Across

2D boxes, semantic segmentation, and object attributes (validated through DDD’s multi-stage QA).

2D boxes, semantic segmentation, and object attributes (validated through DDD’s multi-stage QA).

Object Type Distribution

| Dataset | Annotation Type | Cars (%) | Vegetation (%) | Other Static Objects (%) | Sidewalk (%) |

|---|---|---|---|---|---|

| A2D2 | Segmentation42.4 | 17.6 | 21.4 | - | |

| A2D2 | Bounding Box | 30.7 | 18.9 | 34.9 | - |

| Argoverse | Segmentation | 27.4 | 24.9 | - | 8.36 |

| Argoverse | Bounding Box | 19.9 | 17.5 | 30.0 | - |

| Argoverse | 3D | 8.9 | 43.8 | 41.8 | - |

Why DDD (D3S) Stands Out

Precision + Context

Geometric accuracy and rich attributes (role, demographic, function) enable context-aware perception and planning, not just detection.

Diverse ODD Coverage

Annotations span suburban, urban, and highway to bolster generalization and robustness.

High Quality Standards

≥95% quality threshold enforced by rigorous QA, matching stringent, safety-critical requirements.

Actionable Intelligence

Go beyond “what” and “where” to capture “who” and “why” signals that improve decision-making for safer, smarter AD/ADAS systems.

DDD’s Unique Value Proposition

- Precision + Context Geometric accuracy and rich attributes (role, demographic, function) enable context-aware perception and planning, not just detection.

- Diverse ODD Coverage Annotations span suburban, urban, and highway to bolster generalization and robustness.

- Actionable Intelligence

Go beyond “what” and “where” to capture “who” and “why” signals that improve decision-making for safer, smarter AD/ADAS systems.

- High Quality Standards

≥95% quality threshold enforced by rigorous QA, matching stringent, safety-critical requirements.

Access Datasets Now

Turn complex data into smarter AI systems.

Talk to our Solutions Engineers to tailor datasets, enhance annotations, and accelerate your next AI innovation.

FAQs

-

No. D3S is released under CC BY-NC-SA 4.0 for non-commercial use only. Contact us for commercial licensing/annotation services.

-

D3S builds upon two major open-source autonomous driving datasets:

A2D2 — Created by Audi AG, Germany, covering urban, suburban, rural, and highway environments. (References: ReadMe, License)

Argoverse — Developed across six U.S. cities (Miami, Austin, Washington, DC, Pittsburgh, Palo Alto, and Detroit). (References: Terms of Use, Privacy Policy)

D3Scenes (D3S) — Produced and maintained by Digital Divide Data (DDD), licensed under CC BY-NC-SA 4.0. (Refer to D3S Terms of Use for details.)

-

D3S overlays are organized to align with the source datasets. Depending on your workflow, you may reference or separately obtain the original data per their terms.

-

Yes, provided with the dataset. We also share guidance on mapping attributes to your class ontology.

-

We provide recommended split files and can adapt them to your research protocol on request.

Turn complex data into smarter AI systems.

Talk to our Solutions Engineers to tailor datasets, enhance annotations, and accelerate your next AI innovation.