By Umang Dayal

July 1, 2025

Imagine trying to build a powerful generative AI model without enough training data. Maybe the data you need is locked behind privacy regulations, scattered across siloed systems, or simply doesn’t exist in sufficient quantity. In such cases, you’re not just facing a technical challenge; you’re facing a hard limit on your model’s potential. This is exactly where synthetic data becomes essential.

Synthetic data isn’t scraped, collected, or labeled in the traditional sense. Instead, it’s created artificially but purposefully by algorithms that understand and reproduce the statistical properties of real-world information. It’s data without the baggage of personal identifiers, logistical constraints, or legacy inconsistencies.

In this blog, we’ll break down the best practices for synthetic data generation in generative AI and dive into the challenges and best practices that define its responsible use. We’ll also examine real-world use cases across industries to illustrate how synthetic data is being leveraged today.

What Is Synthetic Data?

Synthetic data is artificially generated information created through algorithms and statistical models to reflect the characteristics and structure of real-world data. Unlike traditional datasets that are captured through direct observation or manual input, synthetic data is simulated based on rules, patterns, or learned distributions. It serves as a proxy when real data is inaccessible, insufficient, or sensitive, offering a controlled and flexible alternative for training and testing AI models.

There are several types of synthetic data, each suited to different use cases.

Tabular synthetic data mimics structured datasets such as spreadsheets or databases, and is often used in financial modeling, healthcare analytics, and customer segmentation.

Image-based synthetic data is commonly generated through computer graphics or generative adversarial networks (GANs) to simulate visual environments for object detection or classification tasks.

Video and 3D synthetic data are integral in training models for humanoid and autonomous vehicles, where simulating physical interactions is crucial.

Text-based synthetic data, often produced by large language models, supports tasks in natural language understanding, dialogue generation, and content moderation.

A key advantage of synthetic data lies in its ability to overcome limitations of real data. Real datasets often contain noise, inconsistencies, or biases, and acquiring them may raise concerns about privacy, cost, or feasibility. In contrast, synthetic datasets can be generated at scale, targeted for specific distributions, and scrubbed of personally identifiable information.

Why Synthetic Data Matters for Generative AI

Generative AI models thrive on data; the more diverse, comprehensive, and representative the training data, the more robust and capable these models become. However, sourcing such data from real-world environments is not always feasible. In many domains, data may be limited, imbalanced, protected by privacy laws, or simply unavailable. Synthetic data offers a compelling solution to these challenges by enabling the controlled creation of training datasets that align with the needs of generative AI systems.

Data Diversity

One of the most significant benefits of synthetic data is its ability to enhance data diversity. Real-world datasets often reflect historical biases or omit rare scenarios, which can limit a model’s ability to generalize. Synthetic data allows developers to engineer variation deliberately, ensuring that minority classes, edge cases, or underrepresented contexts are well covered. For generative models, which aim to replicate or create new content based on learned patterns, this diversity can make the difference between a narrow, overfitted system and one that is capable of broad, creative output.

Scalability

Generative models, particularly large-scale transformers and diffusion models, require vast amounts of data to perform well. Generating high-volume synthetic datasets is often faster, cheaper, and more repeatable than collecting equivalent real-world data. Moreover, synthetic data can be generated in parallel with model development, accelerating iteration cycles and improving overall agility.

Privacy and compliance

In regulated sectors like healthcare, finance, or education, access to sensitive user data is restricted by frameworks such as GDPR, HIPAA, or FERPA. Synthetic data offers a path to developing AI capabilities without exposing or mishandling private information. By simulating realistic but non-identifiable data, organizations can innovate responsibly while staying compliant with data governance requirements.

Cost Efficiency and Repeatability

It eliminates the need for expensive manual data collection or data annotation and enables teams to replicate experiments consistently across environments. This is especially useful when fine-tuning or validating generative models, where reproducibility and control over inputs are essential.

Key Challenges in Synthetic Data Generation

Generating data that is both useful and trustworthy involves navigating a range of technical and ethical challenges. Without addressing these carefully, synthetic data can introduce unintended risks, compromise model performance, or even violate the very principles it aims to uphold, such as fairness and privacy.

Balancing Realism and Utility

One of the core tensions in synthetic data generation lies in the trade-off between realism and utility. Highly realistic synthetic data might closely resemble real data but fail to introduce the variability needed for robust learning. Conversely, data that is too artificially varied may lack grounding in realistic distributions, reducing its relevance. Striking the right balance is critical: the data must be statistically consistent with real-world patterns while also tailored to improve model generalization and robustness.

Distribution Shift and Bias Propagation

If the synthetic data does not accurately capture the statistical properties of the target domain, models trained on it may suffer from distributional shift, performing well on synthetic inputs but failing on real-world data. Additionally, if the real data used to train synthetic generators (such as GANs or LLMs) contains embedded biases, these can be replicated or even amplified in the synthetic outputs. Without active bias mitigation techniques, synthetic data risks reinforcing the very issues it aims to solve.

Overfitting to Synthetic Artifacts

Synthetic data often contains subtle patterns or artifacts introduced by the generation process. These artifacts, while imperceptible to humans, can be easily learned by machine learning models. This can result in overfitting, where models perform well during training but fail to generalize when exposed to real data. Overfitting to synthetic quirks is especially dangerous in high-stakes applications such as medical diagnosis, autonomous navigation, or content moderation.

Labeling Inconsistencies and Semantic Drift

In supervised learning contexts, maintaining high-quality labels in synthetic data is crucial. However, automated labeling pipelines or LLM-generated annotations can introduce semantic drift, where labels become ambiguous or misaligned with real-world definitions. This is particularly challenging in tasks involving subjective or nuanced labels, such as sentiment analysis or medical image classification. Inconsistent labeling undermines training quality and can erode trust in the resulting models.

Evaluation Complexity

Unlike real data, synthetic datasets often lack a clear benchmark for evaluation. There is no “ground truth” against which to measure fidelity, diversity, or usefulness. As a result, organizations must define custom evaluation pipelines that combine statistical tests, model-based validation, and manual review. This introduces operational overhead and requires cross-functional collaboration between data scientists, domain experts, and compliance teams.

Security and Privacy Risks

Although synthetic data is often assumed to be privacy-safe, this assumption is not always valid. If a generative model is trained on sensitive data without proper safeguards, it may inadvertently leak identifiable information through memorization. Techniques such as membership inference attacks can exploit these vulnerabilities. Therefore, privacy-preserving mechanisms must be embedded throughout the data generation lifecycle, not just applied post hoc.

Best Practices for Generating Synthetic Data in Gen AI

Effectively generating synthetic data for generative AI involves more than simply creating large volumes of artificial samples. To truly serve as a high-quality substitute or supplement to real-world data, synthetic datasets must be purposefully designed, thoroughly validated, and ethically managed.

The following best practices address the core requirements for building reliable, privacy-compliant, and performance-enhancing synthetic data pipelines.

Define Clear Objectives

Before generating any data, it is essential to clarify the purpose the synthetic data will serve. Whether the goal is to augment small datasets, simulate edge cases, reduce privacy risk, or support model prototyping, the generation process should be aligned with specific downstream tasks.

For example, if the target application is dialogue generation, the synthetic data should reflect realistic conversational flows, context preservation, and speaker intent. Misaligned objectives often result in data that appears valid on the surface but offers limited functional value during training or evaluation.

Maintain Data Realism and Diversity

High-quality synthetic data should approximate the statistical properties of real data while also introducing meaningful variability. This means the data should not only look authentic but should also preserve key relationships and distributions.

For structured data, this includes correlations between variables; for images, texture and lighting consistency; for text, syntactic coherence and domain relevance. Diversity should be engineered intentionally by including underrepresented scenarios, linguistic styles, or behavioral patterns, ensuring the model learns from a broad dataset. Using advanced generative models like GANs, VAEs, or LLMs with domain-specific fine-tuning can help achieve this balance.

Ensure Privacy by Design

Synthetic data is often used to avoid exposing sensitive information, but this benefit is not guaranteed by default. Privacy risks may persist, particularly if the data generator has memorized aspects of the original dataset. To address this, privacy must be incorporated into the design of the synthetic data pipeline.

Techniques such as differential privacy, data masking, and anonymization of training inputs should be used to minimize leakage risk. Additionally, models should be audited for memorization using tools like membership inference tests or canary insertion methods. Privacy validation is especially critical in sectors governed by strict compliance frameworks such as GDPR or HIPAA.

Validate Synthetic Data Quality

A synthetic dataset is only as valuable as its ability to support accurate, generalizable model performance. Validation must include both statistical tests and task-specific evaluations. Statistical tests like the Kolmogorov-Smirnov test or KL-divergence can be used to compare distributions between real and synthetic data.

For vision or language tasks, evaluation metrics such as FID (Fréchet Inception Distance), BLEU scores, or model performance deltas provide deeper insight. Where applicable, human-in-the-loop review can catch subtle quality issues not detected through automation. Validation should be repeated periodically, especially as models or data generation strategies evolve.

Prevent Overfitting to Synthetic Artifacts

To avoid synthetic data acting as a crutch that models overfit to, consider a hybrid training approach where synthetic and real data are mixed. This prevents the model from learning spurious patterns or artifacts unique to synthetic data.

Additional strategies include injecting controlled noise, using data augmentation techniques, and analyzing generalization performance on held-out real data. It’s important to detect when models learn from synthetic data in a way that doesn’t transfer to real-world behavior, as this often signals over-reliance on generation-specific features.

Document Data Generation Pipelines

Transparency and reproducibility are critical when using synthetic data, especially in regulated or high-stakes environments. Every stage of the generation process should be logged, including the source data, generation method, model versions, prompts or parameters used, and any post-processing steps.

This documentation ensures that datasets can be regenerated, debugged, or audited when needed. It also helps establish accountability and supports downstream governance workflows. In collaborative teams, well-documented data pipelines allow multiple stakeholders to understand, review, and improve the synthetic data lifecycle.

Read more: Prompt Engineering for Generative AI: Techniques to Accelerate Your AI Projects

Case Studies for Synthetic Data Generation in Generative AI

Synthetic data is enabling organizations to build powerful AI systems while navigating complex data challenges. Let’s explore a few of them below:

Healthcare: Privacy-Preserving Clinical Data for Model Training

In healthcare, access to high-quality clinical data is often restricted due to patient privacy regulations and institutional data silos. Synthetic data has become a viable alternative for training diagnostic models, simulating patient records, and building predictive tools.

For example, synthetic electronic health records (EHRs) generated using domain-aware generative models can closely mirror real patient trajectories without exposing personal information.

Hospitals and research labs have used synthetic datasets to pretrain machine learning models that later fine-tune on limited real data, reducing the risk of privacy violations while improving model readiness. With privacy safeguards like differential privacy baked into generation pipelines, these synthetic datasets help accelerate AI research in areas such as disease progression modeling, hospital readmission prediction, and clinical NLP.

Finance: Simulating Transactional Patterns for Fraud Detection

The financial sector faces constant tension between innovation and regulatory compliance. Fraud detection models, for instance, require access to detailed transactional data, which is tightly guarded and often anonymized to the point of being unusable. Synthetic data allows financial institutions to simulate transactional behavior, including fraudulent patterns, in a controlled environment.

By using generative techniques to produce plausible but non-identifiable transaction sequences, teams can train and stress-test fraud detection systems across a wide range of scenarios. This has proven especially useful in developing systems that can handle adversarial behavior and rare event detection. Some organizations also use synthetic customer profiles for testing risk models, building credit scoring tools, or creating training datasets for financial chatbots.

Retail and E-commerce: Training Conversational AI with Synthetic Dialogues

In the retail sector, AI-powered customer support systems depend heavily on dialogue data. Yet, collecting real customer conversations, especially those involving complaints, returns, or technical issues, can be slow, costly, and privacy-sensitive. Companies are now using synthetic dialogue generation with large language models to simulate realistic customer-agent conversations across various contexts.

These synthetic interactions are used to train and fine-tune chatbots, recommendation engines, AI image enhancer tools, and voice assistants. By injecting controlled variations such as tone, urgency, or product categories, teams can increase coverage across intent types while maintaining language diversity. This approach not only improves model accuracy but also accelerates development timelines and supports continuous retraining without additional data collection overhead.

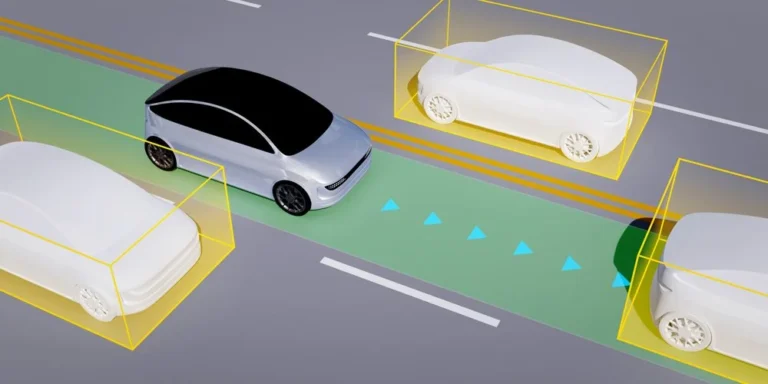

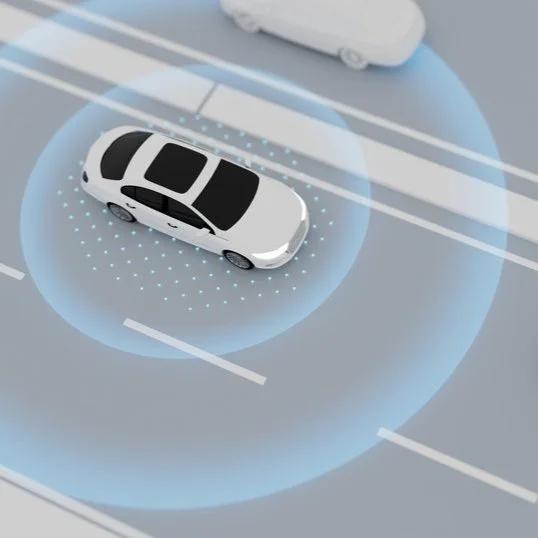

Autonomous Systems: Synthetic Vision for Safer Navigation

Autonomous vehicles and robotics rely on massive volumes of image and sensor data to perceive and navigate environments. Capturing enough real-world edge cases, like rare weather conditions, unusual pedestrian behavior, or nighttime visibility, is prohibitively expensive and dangerous. Synthetic image and video data, generated through simulation engines or neural rendering models, fill this gap.

By simulating diverse traffic scenarios and environmental conditions, teams can build more robust perception models and reduce dependency on real-world trial-and-error testing. This has become standard practice in industries ranging from self-driving car development to drone navigation and warehouse automation.

Read more: Importance of Human-in-the-Loop for Generative AI: Balancing Ethics and Innovation

Conclusion

Synthetic data has emerged as a cornerstone technology for scaling and improving generative AI systems. As models grow in complexity and demand more representative, diverse, and privacy-conscious training data, synthetic generation offers a flexible and effective way to meet these needs.

Synthetic data is not a replacement for real-world data; it is a powerful complement. When used responsibly, it can fill critical gaps, reduce time to deployment, and enable innovation where traditional data collection is constrained. As generative AI continues to expand its reach across industries, organizations that master synthetic data generation will be better positioned to build scalable, secure, and high-performing AI systems.

At Digital Divide Data (DDD), we offer scalable, ethical, and privacy-compliant data solutions for Gen AI that power next-generation AI systems. Whether you need support designing synthetic data pipelines, validating AI outputs, or enhancing data diversity across domains, our SMEs are here to help.

Partner with DDD to transform your data strategy with precision and purpose. Contact us to learn how we can support your GenAI goals.

References:

Aitken, Z., Zhang, L., & Nematzadeh, A. (2024). Generative AI for synthetic data generation: Methods, challenges, and the future. arXiv. https://arxiv.org/abs/2403.04190

Amershi, S., Holstein, K., & Binns, R. (2024). Examining the expanding role of synthetic data throughout the AI development pipeline. arXiv. https://arxiv.org/abs/2501.18493

AIMultiple Research. (2024, March). Synthetic data generation benchmark & best practices. AIMultiple. https://research.aimultiple.com/synthetic-data-generation

FAQs

1. Is synthetic data suitable for fine-tuning large language models (LLMs)?

Yes, synthetic data can be highly effective for fine-tuning LLMs, especially when real-world data is limited, sensitive, or needs augmentation in specific domains. It is often used to simulate domain-specific interactions (e.g., legal, medical, or technical dialogues). However, care must be taken to avoid reinforcing hallucinations, injecting biases, or reducing factual consistency. Prompt engineering, data diversity, and human-in-the-loop review are often used to manage these risks.

2. Can synthetic data help address class imbalance in machine learning models?

Absolutely. One of the primary benefits of synthetic data is its ability to balance datasets by generating additional samples for underrepresented classes. This is especially useful in scenarios like fraud detection, medical diagnoses, or language classification tasks where rare categories lack sufficient examples in real-world datasets. Synthetic oversampling can improve recall and fairness metrics, provided that the generated samples are of high fidelity.

3. What legal considerations apply when using synthetic data derived from proprietary datasets?

Even if the final dataset is synthetic, legal exposure may arise if the synthetic data generator was trained on copyrighted or proprietary sources without proper authorization. This is especially relevant when using third-party models or pre-trained generators. Organizations should ensure that training data complies with licensing agreements and that synthetic outputs do not replicate protected content.

4. Can synthetic data be used for benchmarking AI systems?

Synthetic data can be used for benchmarking, especially when test scenarios need to be controlled, varied systematically, or anonymized. However, benchmarks based solely on synthetic data may not fully reflect real-world performance. A common practice is to use synthetic data for stress testing or exploratory evaluation, while retaining a real-world validation set to measure true deployment readiness.

5. Is synthetic data appropriate for reinforcement learning (RL) environments?

Yes, synthetic environments are commonly used in RL to simulate decision-making scenarios. Simulation engines generate synthetic states, actions, and rewards for training agents in tasks like robotics, game playing, or industrial control. However, sim-to-real transfer remains a challenge; models trained on synthetic environments must be adapted carefully to handle the complexity of the real world.