By Aaron Bianchi

Sep 6, 2023

Introduction

In the quest to achieve fully autonomous driving, one of the critical challenges lies in creating a reliable perception system. Autonomous vehicles need to interpret their surroundings accurately and make informed decisions in real time. Sensor fusion, a cutting-edge technology, holds the key to improving perception and safety in autonomous driving. This blog post will delve into the concept of sensor fusion and its pivotal role in shaping the future of autonomous vehicles.

The Power of Sensor Fusion

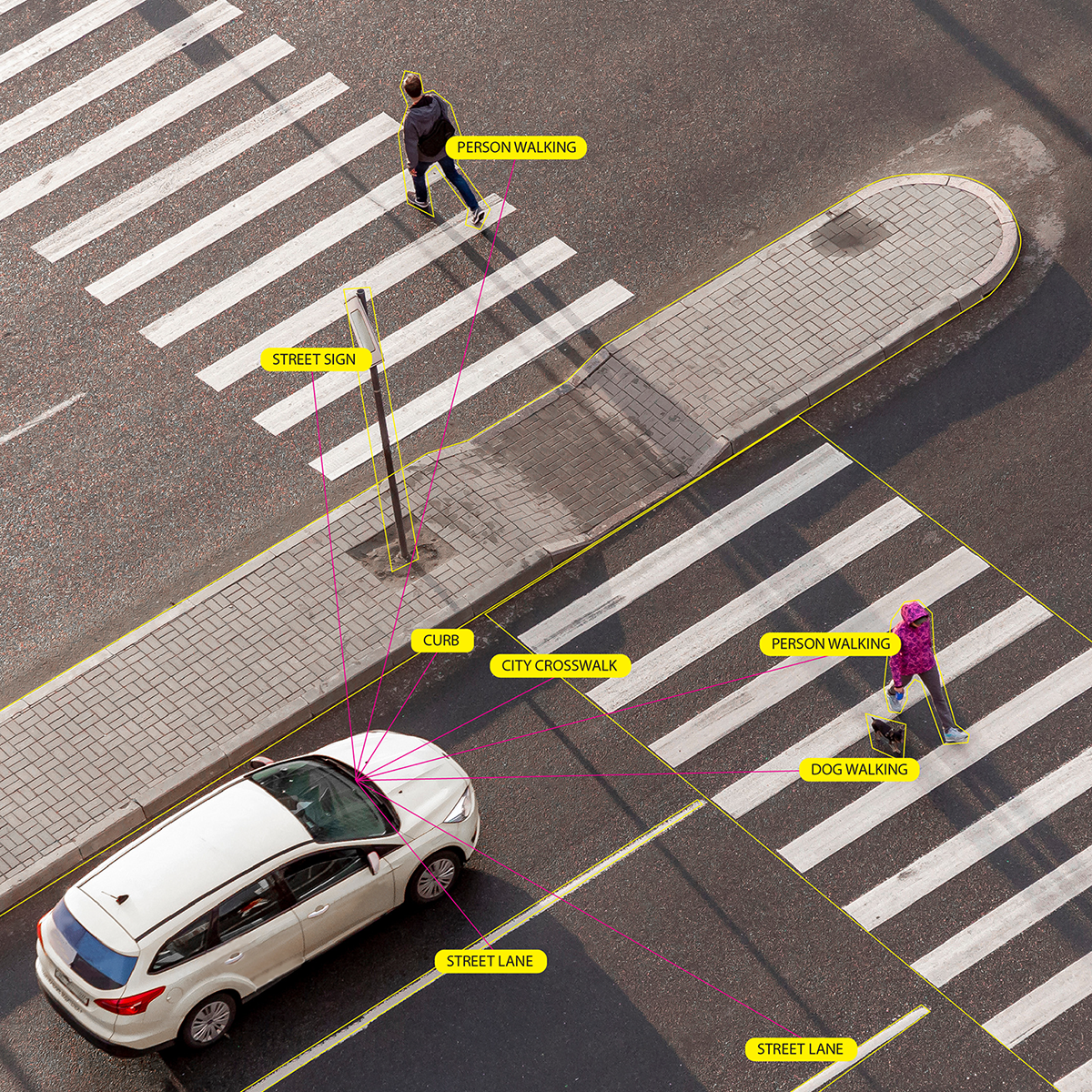

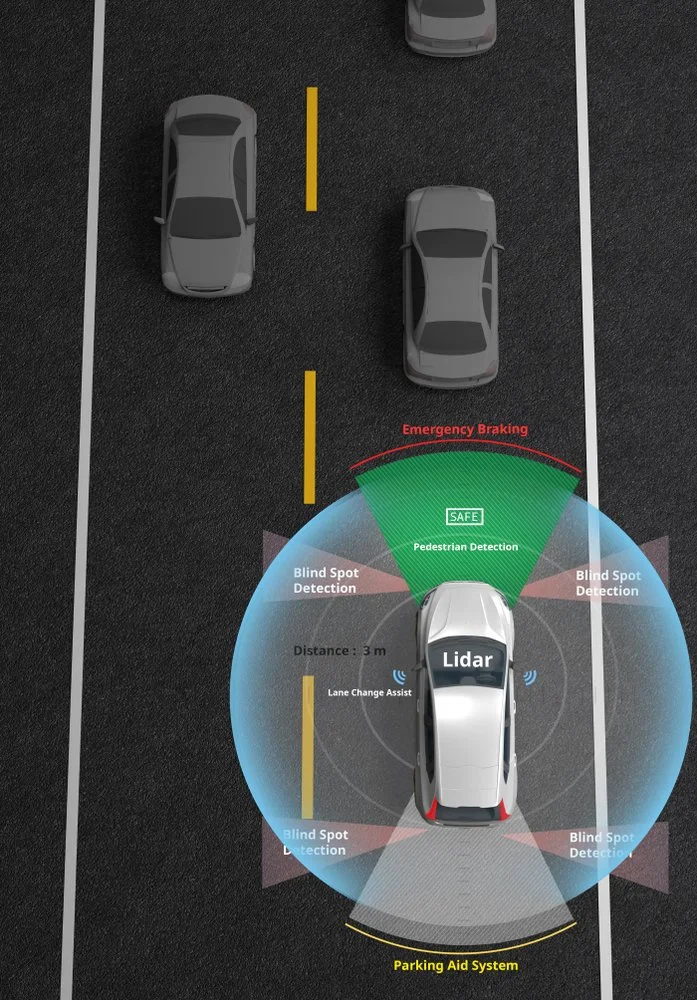

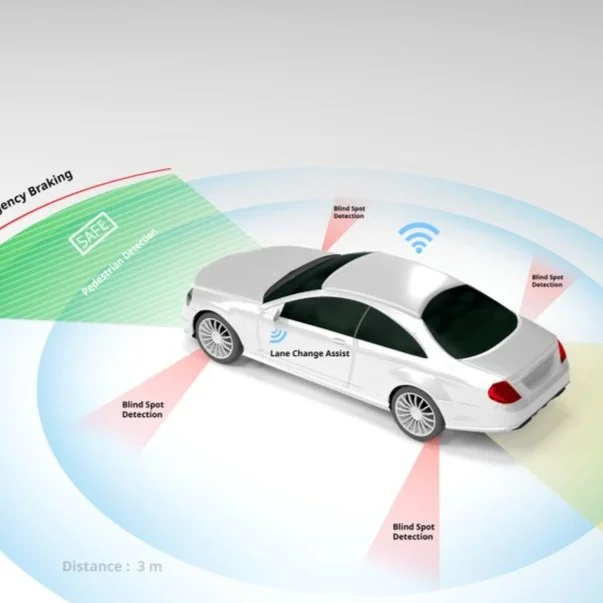

Sensor fusion involves integrating data from various sensors, such as cameras, radars, and lidar, to form a singular and detailed view of the vehicle’s environment. Each sensor provides unique information, and by combining them, autonomous vehicles can achieve a holistic perception of the world around them. For instance, cameras are excellent at recognizing objects, while radars can accurately measure distance and speed. Lidar, on the other hand, creates precise 3D maps of the surroundings. The strengths and weaknesses of these sensors can also change based on lighting conditions, weather, and environment. Integrating these data streams before performing any modeling or analysis enables vehicles to overcome the limitations of individual sensors and enhances their perception capabilities significantly.

Training Algorithms for Fused Data

To interpret and exploit the fused sensor data effectively, autonomous driving algorithms must undergo rigorous training. Training involves exposing the algorithms to vast amounts of labeled data, allowing them to learn and adapt to different scenarios. In 2017, Waymo became the first company to deploy fully self-driving cars in the US. Their history-making success can be attributed to perception systems that include a custom suite of sensors and software, allowing their vehicles to more accurately understand what is happening around them.

Challenges arise in calibrating, synchronizing, and aligning the data from diverse sensors, ensuring consistent data quality, and managing computational complexity. Advanced machine learning techniques, like deep neural networks, play a crucial role in training these algorithms to make sense of the fused data accurately. Some challenges to training algorithms for fused data include:

-

Syncing and Aligning Data: Integrating sensor data with varying rates must be precise to avoid errors.

-

Ensuring Calibration across sensors: Accurate calibration is crucial; variations impact performance for a model that relies on fused data inputs.

-

Handling Large Data: Real-time sensor fusion requires efficient algorithms due to computational complexity and a need for edge deployment in dynamic vehicles.

-

Managing Sensor Failures: Redundancy is essential to maintain safety during sensor malfunctions.

-

Addressing Edge Cases: Fused algorithms must handle rare and challenging scenarios effectively, which is heavily determined by training data – both real and synthetic.

-

Costly Training Data: Acquiring labeled data from multiple sensors is time-consuming and expensive.

-

Interpretability Concerns: Deep learning’s “black-box” nature hinders decision understanding.

-

Ensuring Generalization: Algorithms should work well in various environments to ensure broad adoption.

Real-World Applications and Case Studies

Sensor fusion has already made a significant impact on real-world autonomous driving applications. From simple applications with RGB and IR cameras that provide more robust sensing in light and dark conditions, to the fusion of camera and lidar data that enable vehicles to detect pedestrians and cyclists more reliably. Moreover, radar-lidar fusion improves object detection in adverse weather conditions, such as heavy rain or fog, where cameras might struggle. These case studies demonstrate how sensor fusion contributes to creating a safer and more efficient autonomous driving experience.

Future Prospects of Sensor Fusion Technologies

As technology continues to advance, sensor fusion will continue to be critical for AV deployments at scale.. Research and development efforts are focused on refining algorithms to handle complex edge cases and improve real-time decision-making capabilities. Advancements in hardware, such as more compact and affordable sensors, will further drive the adoption of sensor fusion in the industry. Additionally, the ongoing development of 5G networks will enable vehicles to communicate and share perception data, enhancing the overall safety of autonomous driving systems.

Conclusion

In conclusion, sensor fusion is a critical enabler of enhanced safety in autonomous driving. By combining data from multiple sensors, autonomous vehicles can achieve a comprehensive understanding of their surroundings, improving perception capabilities and decision-making. Although challenges exist in training algorithms for fused data, real-world applications and case studies demonstrate the tangible benefits of this technology. Looking ahead, continuous research and development will further refine sensor fusion technologies, making autonomous driving safer and more reliable than ever before. As we move towards a future with autonomous vehicles, sensor fusion stands as a beacon of hope, steering us closer to a world of safer and smarter transportation.