Using Aerial Imagery as Training Data

By Aaron Bianchi

Aug 6, 2021

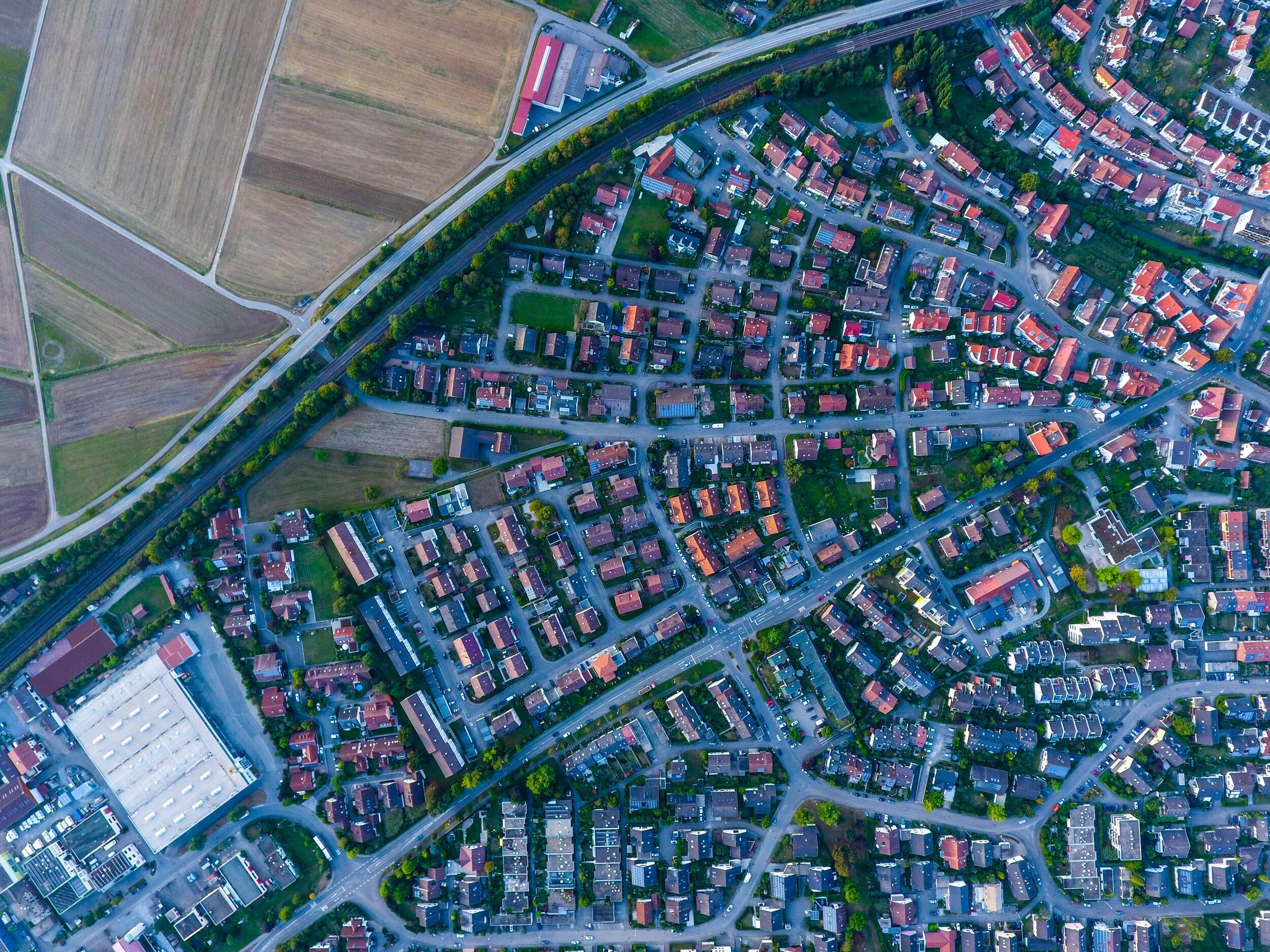

Numerous industries use satellite and aerial imagery to apply machine learning to business and social problem sets. This is a particular strength for DDD given our experience in geospatial and aerial use cases in insurance, transportation, meteorology, environmental protection, agriculture, law enforcement, national security, remote delivery, and traffic management.

This experience has taught us a great deal about the challenges and pitfalls associated with aerial image segmentation. We aired a webinar on this subject, and you can view the recording on-demand. Our goal is to deliver a hands-on guide to overcoming these challenges.

Price of failure. Consider the cost of inadequately or incorrectly training an algorithm to evaluate geospatial or aerial images. Say the project is agricultural. Can you imagine the impact of incorrectly identifying crop disease or inadequate irrigation? Or say the project is military. What are the potential costs of misidentifying an elementary school as an army barracks? You need to include the expected cost of this kind of failure into your DIY vs outsource equation.

Workforce. Aerial and geospatial images are often very large and very detailed, meaning that a large workforce of labelers is required to generate sufficient volumes of training data in a timely fashion. In our experience, most data science teams don’t have access to an in-house workforce big enough to meet their training data demands. This lack of a workforce is one of the principal drivers of seeking a training data partner.

Data volumes. Keep in mind that you may be able to support in-house data preparation for an initial, simple use case, but in your quest for greater levels of model confidence, you will have to train your algorithm on additional use cases, and eventually edge cases. You may be able to generate enough data in-house to train an algorithm to land a delivery drone on a simple graphical market, but what does it take to distinguish between a leaf on the marker and a three-year-old child? Each additional use case requires at least as much training data as the first one, and rarely-occurring edge cases may require significantly more data. This dramatically compounds your workforce requirements, a discovery that many data science teams make late in their projects when budgets are dwindling and deadlines are imminent.

Process and tools. Extremely high-resolution images are far too large to assign to a single labeler. But breaking up images, assigning them to multiple labelers, and then reassembling everything coherently introduces issues around worker consistency and process management. Do you have the wherewithal to train consistency into your own workforce? Do you have the technology and process required to track changes to very high numbers of image fragments? Most data science teams don’t.

Specialization. Are you confident that you can define the most efficient tasks required to label your training data? We had a client who wanted us to label every individual tree in enormous hi-res forest images. As it happened, they weren’t interested in tree density; rather, they were trying to detect illegal land clearing. Because we have been preparing training data for decades, we were able to show them a different approach to labeling their images that appropriately trained their algorithm, but at a fraction of the time and cost of their approach.

Focus. Preparing training data for aerial and geospatial systems involves the application of human judgment to nuanced, and sometimes hard to decipher, images. Our own data shows that the longer individuals spend on a particular kind of interpretation the faster and more accurate they do the work. Data science teams that crowdsource their aerial segmentation work do not capture these workforce efficiencies. DDD assigns you a team that stays with you throughout the span of your project, meaning that you capture all the benefits of growing worker efficiency and your effective cost per transaction steadily declines.