Data Bias: AI’s Ticking Time Bomb

By Aaron Bianchi

Dec 8, 2021

We’ve all seen the headlines. It’s big news when an AI system fails or backfires, and it’s an awful black eye for the organization the headlines point to.

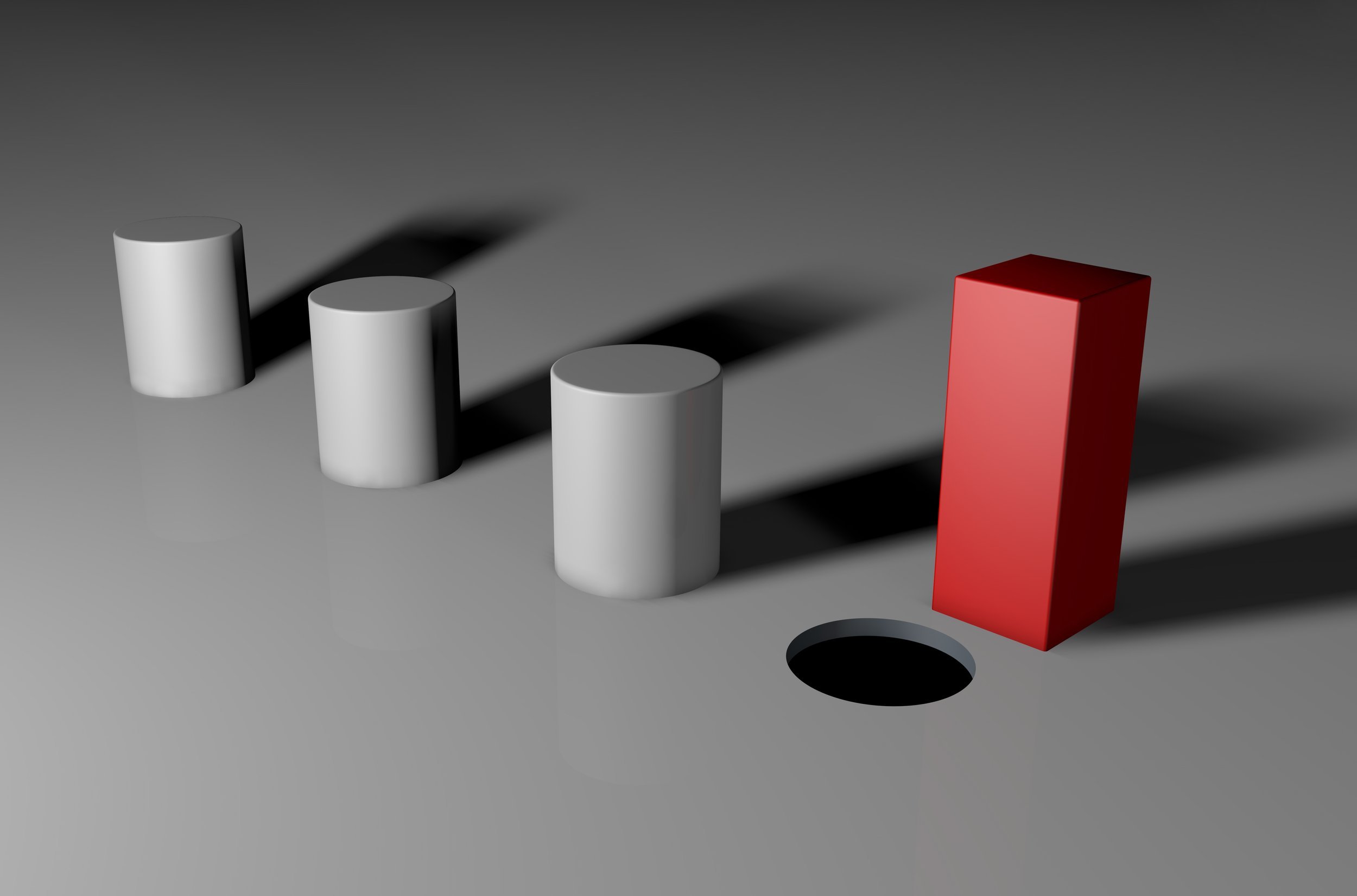

Most of the time these headlines can be traced back to issues with the AI model’s training data. Bias in training data can take a variety of forms, but they all create the potential for leaving the algorithm under- or mis-trained.

In our discussions with clients, we alert them to three data preparation mistakes or oversights that can produce bias:

Failing to ensure the data measuring instrument is accurate. Distortion of the entire data set can result from bad measurement or collection techniques. When this occurs, the bias tends to be consistent and in a particular direction. The danger here is that the production model is out of sync with the reality it is designed to react to.

Bad measurement can take lots of forms. Low quality speech samples, with noise and missing frequencies, can affect a model’s ability to process speech in real time. A drone with an inaccurate GPS system or misaligned altimeter will provide image-based training data that has systematically distorted image metadata. Poorly designed survey and interview instruments can consistently distort responses.

Failing to accurately capture the universe in the data. Sample bias occurs when the training data set is not representative of the larger space the algorithm is intended to operate in. A non-representative training data set will teach the algorithm that the problem space is different than it is.

A classic example of sample bias involved an attempt to teach an algorithm to distinguish dogs from wolves. The training data’s wolf images were overwhelmingly in snowy settings, which led the algorithm to conclude that every picture with snow in it contained a wolf.

But as amusing as this is, sample bias can have serious consequences. Facial recognition algorithms that are trained on disproportionately Caucasian images misidentify African Americans and Asians. Autonomous vehicles that crash into gray trailers on overcast days likely have had too little exposure to this scenario.

Failing to eliminate social or cultural influences from the data. Cultural bias happens when human prejudices or regional idiosyncrasies make their way into AI training data.

As an example, in the UK athletic shoes are often referred to as pumps. In the US pumps are heeled women’s shoes. Use a UK-based team to label shoe images to train an algorithm targeting US shoppers and you end up with a model that may offer the wrong shoes due to cultural bias.

Cultural bias can be more insidious, however. Randomly sample images of airline pilots and you will end up with a data set that is almost entirely male. However, it would be wrong for all kinds of reasons to have the algorithm you are training conclude that airline pilot and male are causally related.

There are well-understood approaches to data gathering and data sampling that avoid all these forms of bias. Unfortunately, these approaches are labor- and time-intensive and data science teams often lack members with the skills or bandwidth to address them.

Our clients generally want to offload the management of training data bias, and they are delighted to learn that mitigating this bias is a core DDD capability. We fully understand the forms that bias takes, and the sources of those biases. We know how to create or collect data sets that are free of bias. And if you already have the data you need, we can offload the management of data bias in your training data sets.