Author: Umang Dayal

Finance teams often operate across multiple ERPs, dozens of SaaS tools, regional accounting systems, and an endless stream of spreadsheets. Even in companies that have invested heavily in automation, the automation tends to focus on discrete tasks. A bot posts journal entries. An OCR tool extracts invoice data. A workflow tool routes approvals.

Traditional automation and isolated ERP upgrades solve tasks. They do not address systemic data challenges. They do not unify the flow of information from source to insight. They do not embed intelligence into the foundation.

Intelligent data pipelines are the foundation for scalable, AI-enabled, audit-ready finance operations. This guide will explore how to scale finance and accounting with intelligent data pipelines, discuss best practices, and design a detailed pipeline.

What Are Intelligent Data Pipelines in Finance?

Data moves on a schedule, not in response to events. They are rule-driven, with transformation logic hard-coded by developers who may no longer be on the team. A minor schema change in a source system can break downstream reports. Observability is limited. When numbers look wrong, someone manually traces them back through layers of SQL queries.

Reconciliation loops often sit outside the pipeline entirely. Spreadsheets are exported. Variances are investigated offline. Adjustments are manually entered. This architecture may function, but it does not scale gracefully.

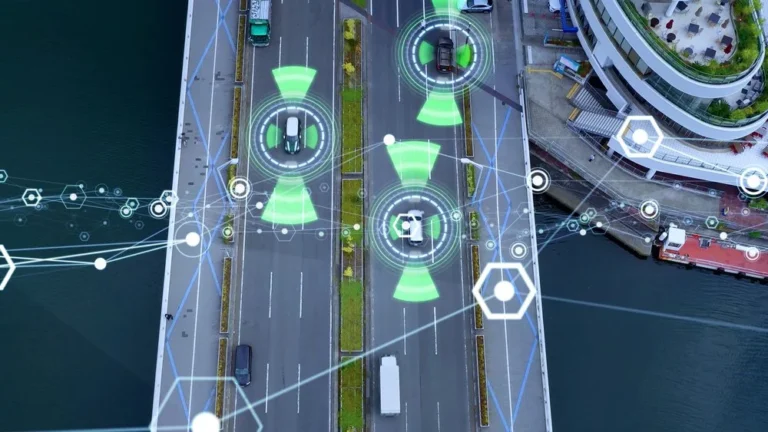

Intelligent pipelines operate differently. They are event-driven and capable of near real-time processing when needed. If a large transaction posts in a subledger, the pipeline can trigger validation logic immediately. AI-assisted validation and classification can flag anomalies before they accumulate. The system monitors itself, surfacing data quality issues proactively instead of waiting for someone to notice a discrepancy in a dashboard.

Lineage and audit trails are built in, not bolted on. Every transformation is traceable. Every data version is preserved. When regulators or auditors ask how a number was derived, the answer is not buried in a chain of emails.

These pipelines also adapt. As new data sources are introduced, whether a billing platform in the US or an e-invoicing portal in Europe, integration does not require a complete redesign. Regulatory changes can be encoded as logic updates rather than emergency workarounds.

Intelligence in this context is not a marketing term. It refers to systems that can detect patterns, surface outliers, and adjust workflows in response to evolving conditions.

Core Components of an Intelligent F&A Pipeline

Building this capability requires more than a data warehouse. It involves multiple layers working together.

Unified Data Ingestion

The starting point is ingestion. Financial data flows from ERP systems, sub-ledgers, banks, SaaS billing platforms, procurement tools, payroll systems, and, increasingly, e-invoicing portals mandated by governments. Each source has its own schema, frequency, and quirks.

An intelligent pipeline connects to these sources through API first connectors where possible. It supports both structured and unstructured inputs. Bank statements, PDF invoices, XML tax filings, and system logs all enter the ecosystem in a controlled way. Instead of exporting CSV files manually, the flow becomes continuous.

Data Standardization and Enrichment

Raw data is rarely analysis-ready, and the chart of accounts mapping across entities must be harmonized. Currencies require normalization with appropriate exchange rate logic. Tax rules need to be embedded according to jurisdiction. Metadata tagging helps identify transaction types, risk categories, or business units. Standardization is where many initiatives stall. It can feel tedious. Yet without consistent data models, higher-level intelligence has nothing stable to stand on.

Automated Validation and Controls

This is where the pipeline starts to show its value. Duplicate detection routines prevent double-posting. Outlier detection models surface transactions that fall outside expected ranges. Policy rule enforcement ensures segregation of duties and that approval thresholds are respected. When something fails validation, exception routing directs the issue to the appropriate owner. Instead of discovering errors at month, teams address them as they occur.

Reconciliation and Matching Intelligence

Reconciliation is often one of the most labor-intensive parts of finance operations. Intelligent pipelines can automate invoice-to-purchase-order matching, applying flexible logic rather than rigid thresholds. Intercompany elimination logic can be encoded systematically. Cash application can be auto-matched based on patterns in remittance data.

Accrual suggestion engines may propose entries based on historical behavior and current trends, subject to human review. The goal is not to remove accountants from the process, but to reduce repetitive work that adds little judgment.

Observability and Governance Layer

Finance cannot compromise on control. Data lineage tracking shows how each figure was constructed. Version control ensures that changes in logic are documented. Access management restricts who can view or modify sensitive data. Continuous control monitoring provides visibility into compliance health. Without this layer, automation introduces risk. With it, automation can enhance control.

AI Ready Data Outputs

Once data flows are clean, validated, and governed, advanced use cases become realistic. Forecast models draw from consistent historical and operational data. Risk scoring engines assess exposure based on transaction patterns. Scenario simulations evaluate the impact of pricing changes or currency shifts. Some organizations experiment with narrative generation for close commentary, where systems draft variance explanations for review. That may sound futuristic, but with reliable inputs, it becomes practical.

Why Finance and Accounting Cannot Scale Without Pipeline Modernization

Scaling finance is not simply about handling more transactions. It involves complexity across entities, products, regulations, and stakeholder expectations. Without pipeline modernization, each layer of complexity multiplies manual effort.

The Close Bottleneck

Real-time subledger synchronization ensures that transactions flow into the general ledger environment without delay. Pre-close anomaly detection identifies unusual movements before they distort financial statements. Continuous reconciliation reduces the volume of open items at period end. Close orchestration tools integrated into the pipeline can track task completion, flag bottlenecks, and surface risk areas early. Instead of compressing all effort into the last few days of the month, work is distributed more evenly. This does not eliminate judgment or oversight. It redistributes effort toward analysis rather than firefighting.

Accounts Payable and Receivable Complexity

Accounts payable teams increasingly manage invoices in multiple formats. PDF attachments, EDI feeds, XML submissions, and portal-based invoices coexist. In Europe, e-invoicing mandates introduce standardized but still varied requirements across countries. Cross-border transactions require careful tax handling. Exception rates can be high, especially when purchase orders and invoices do not align cleanly. Accounts receivable presents its own challenges. Remittance information may be incomplete. Customers pay multiple invoices in a single transfer. Currency differences create reconciliation headaches.

Pipeline-driven transformation begins with intelligent document ingestion. Optical character recognition, combined with classification models, extracts key fields. Coding suggestions align invoices with the appropriate accounts and cost centers. Automated two-way and three-way matching reduces manual review.

Predictive exception management goes further. By analyzing historical mismatches, the system may anticipate likely issues and flag them proactively. If a particular supplier frequently submits invoices with missing tax identifiers, the pipeline can route those invoices to a specialized queue immediately. On the receivables side, pattern-based cash application improves matching accuracy. Instead of relying solely on exact invoice numbers, the system considers payment behavior patterns.

Multi-Entity and Global Compliance Pressure

Organizations operating across the US and Europe must navigate differences between IFRS and GAAP. Regional VAT regimes vary significantly. Audit traceability requirements are stringent. Data privacy obligations affect how financial information is stored and processed. Managing this complexity manually is unsustainable at scale.

Intelligent pipelines enable structured compliance logic. Jurisdiction-aware validation rules apply based on entity or transaction attributes. VAT calculations can be embedded with country-specific requirements. Reporting formats adapt to regulatory expectations. Complete audit trails reduce the risk of undocumented adjustments. Controlled AI usage, with clear logging and oversight, supports explainability. It would be naive to suggest that pipelines eliminate regulatory risk. Regulations evolve, and interpretations shift. Yet a flexible, governed data architecture makes adaptation more manageable.

Moving from Periodic to Continuous Finance

From Month-End Event to Always-On Process

Ongoing reconciliations ensure that balances stay aligned. Embedded accrual logic captures expected expenses in near real time. Real-time variance detection flags deviations early. Automated narrative summaries may draft initial commentary on significant movements, providing a starting point for review. Instead of writing explanations from scratch under a deadline, finance professionals refine system-generated insights.

AI in the Close Cycle

AI applications in close are expanding cautiously. Variance explanation generation can analyze historical trends and operational drivers to propose plausible reasons for changes. Journal entry recommendations based on recurring patterns can save time. Control breach detection models identify unusual combinations of approvals or postings. Risk scoring for high-impact accounts helps prioritize review. Not every balance sheet account requires the same level of scrutiny each period.

Still, AI is only as strong as the pipeline feeding it. If source data is inconsistent or incomplete, outputs will reflect those weaknesses. Blind trust in algorithmic suggestions is dangerous. Human oversight remains essential.

Designing a Scalable Finance Intelligent Data Pipeline

Ambition without architecture leads to frustration. Designing a scalable pipeline requires a clear blueprint.

Source Layer

The source layer includes ERP systems, CRM platforms, billing engines, banking APIs, procurement tools, payroll systems, and any other financial data origin. Each source should be cataloged with defined ownership and data contracts.

Ingestion Layer

Ingestion relies on API first connectors where available. Event streaming may be appropriate for high-volume or time-sensitive transactions. The pipeline must accommodate both structured and unstructured ingestion. Error handling mechanisms should be explicit, not implicit.

Processing and Intelligence Layer

Here, data transformation logic standardizes schemas and applies business rules. Machine learning models handle classification and anomaly detection. A policy engine enforces approval thresholds, segregation of duties, and compliance logic. Versioning of transformations is critical. When a rule changes, historical data should remain traceable.

Control and Governance Layer

Role-based access restricts sensitive data. Audit logs capture every significant action. Model monitoring tracks performance and drift. Data quality dashboards provide visibility into completeness, accuracy, and timeliness. Governance is not glamorous work, but without it, scaling introduces risk.

Consumption Layer

Finally, data flows into BI tools, forecasting systems, regulatory reporting modules, and executive dashboards. Ideally, these outputs draw from a single governed source of truth rather than parallel extracts. When each layer is clearly defined, teams can iterate without destabilizing the entire system.

Why Choose DDD?

Digital Divide Data combines technical precision with operational discipline. Intelligent finance pipelines depend on clean, structured, and consistently validated data, yet many organizations underestimate how much effort that actually requires. DDD focuses on the groundwork that determines whether automation succeeds or stalls. From large-scale document digitization and structured data extraction to annotation workflows that train classification and anomaly detection models, DDD approaches data as a long-term asset rather than a one-time input. The teams are trained to follow defined quality frameworks, apply rigorous validation standards, and maintain traceability across datasets, which is critical in finance environments where errors are not just inconvenient but consequential.

DDD supports evolution with flexible delivery models and experienced talent who understand structured financial data, compliance sensitivity, and process documentation. Instead of treating data preparation as an afterthought, DDD embeds governance, audit readiness, and continuous quality monitoring into the workflow. The result is not just faster data processing, but greater confidence in the systems that depend on that data.

Conclusion

Finance transformation often starts with tools. A new ERP module, a dashboard upgrade, a workflow platform. Those investments matter, but they only go so far if the underlying data continues to move through disconnected paths, manual reconciliations, and fragile integrations. Scaling finance is less about adding more technology and more about rethinking how financial data flows from source to decision.

Intelligent data pipelines shift the focus to that foundation. They connect systems in a structured way, embed validation and controls directly into the flow of transactions, and create traceable, audit-ready outputs by design. Over time, this reduces operational friction. Closed cycles become more predictable. Exception handling becomes more targeted. Forecasting improves because the inputs are consistent and timely.

Scaling finance and accounting is not about working harder at month-end. It is about building an infrastructure where data flows cleanly, controls are embedded, intelligence is continuous, compliance is systematic, and insights are available when they are needed. Intelligent data pipelines make that possible.

Partner with Digital Divide Data to build the structured, high-quality data foundation your intelligent finance pipelines depend on.

References

Deloitte. (2024). Automating finance operations: How generative AI and people transform the financial close. https://www.deloitte.com/us/en/services/audit-assurance/blogs/accounting-finance/automating-finance-operations.html

KPMG. (2024). From digital close to intelligent close. https://kpmg.com/us/en/articles/2024/finance-digital-close-to-intelligent-close.html

PwC. (2024). Transforming accounts payable through automation and AI. https://www.pwc.com/gx/en/news-room/assets/analyst-citations/idc-spotlight-transforming-accounts-payable.pdf

European Central Bank. (2024). Artificial intelligence: A central bank’s view. https://www.ecb.europa.eu/press/key/date/2024/html/ecb.sp240704_1~e348c05894.en.html

International Monetary Fund. (2025). AI projects in financial supervisory authorities: Toolkit and governance considerations. https://www.imf.org/-/media/files/publications/wp/2025/english/wpiea2025199-source-pdf.pdf

FAQs

1. How long does it typically take to implement an intelligent finance data pipeline?

Timelines vary widely based on system complexity and data quality. A focused pilot in one function, such as accounts payable, may take three to six months. A full enterprise rollout across multiple entities can extend over a year. The condition of existing data and clarity of governance structures often determine speed more than technology selection.

2. Do intelligent data pipelines require replacing existing ERP systems?

Not necessarily. Many organizations layer intelligent pipelines on top of existing ERPs through API integrations. The goal is to enhance data flow and control without disrupting core transaction systems. ERP replacement may be considered separately if systems are outdated, but it is not a prerequisite.

3. How do intelligent pipelines handle data privacy in cross-border environments?

Privacy requirements can be encoded into access controls, data masking rules, and jurisdiction-specific storage policies within the governance layer. Role-based permissions and audit logs help ensure that sensitive financial data is accessed appropriately and in compliance with regional regulations.

4. What skills are required within the finance team to manage intelligent pipelines?

Finance teams benefit from professionals who understand both accounting principles and data concepts. This does not mean every accountant must become a data engineer. However, literacy in data flows, controls, and basic analytics becomes increasingly valuable. Collaboration between finance, IT, and data teams is essential.

5. Can smaller organizations benefit from intelligent pipelines, or is this only for large enterprises?

While complexity increases with size, smaller organizations also face fragmented tools and growing compliance expectations. Scaled-down versions of intelligent pipelines can still reduce manual effort and improve control. The architecture may be simpler, but the principles remain relevant.